Why Your Organisation Needs a Robust AI Policy

.avif)

.avif)

The European Union’s AI Act entered into force in August 2024 and will be mostly applicable by 2 August 2026. This means that in just a short time, organisations operating in or with the EU must comply with new rules governing the development and use of artificial intelligence. The Act takes a risk-based approach: it bans certain harmful AI practices outright, heavily regulates “high-risk” AI systems, and imposes transparency on others. Non-compliance can lead to hefty fines – up to 7% of worldwide annual turnover for the most serious violations. With the bulk of obligations coming into force August 2026, now is the time for companies to act. A crucial step is establishing a robust AI policy within your organisation. This article explains why an internal AI policy is essential in light of the EU AI Act and outlines key topics such a policy should cover to limit risks and promote AI’s benefits responsibly.

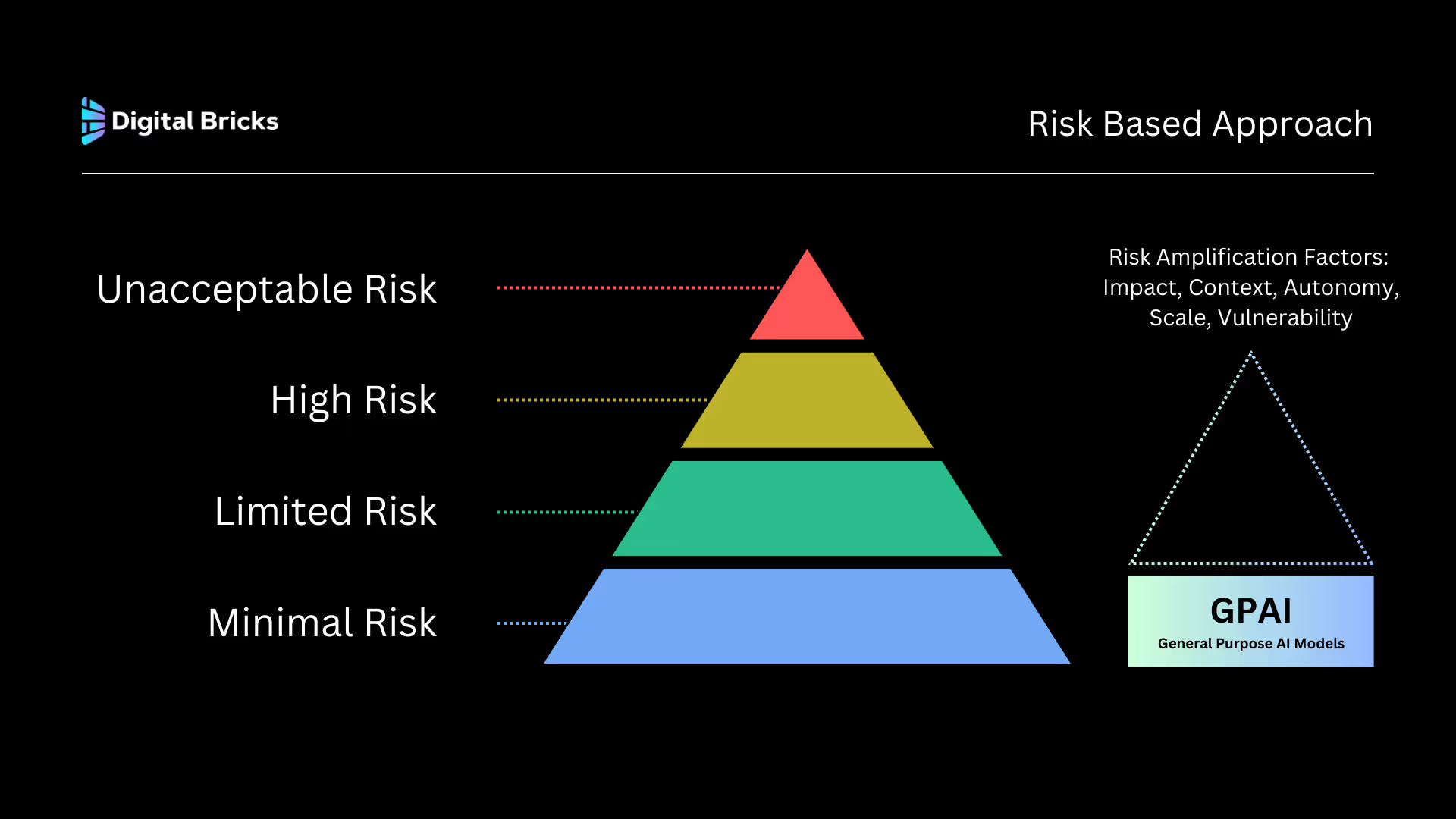

The EU AI Act (EU Regulation 2024/1689) is the first comprehensive AI law, aiming to ensure AI is used safely and ethically across the EU. It categorizes AI systems by risk level:

Time is short: Key provisions roll out in stages – e.g. AI literacy obligations and bans are already in effect (Feburary 2025), general-purpose AI rules start August 2025, and the main high-risk system obligations hit in 2026. Regulators expect organisations to be ready on time. Every company that develops or deploys AI (which includes everything from a tech firm building AI software to a sales team using an AI tool) should assess their AI systems now to see which risk category they fall under. This is where a company-wide AI policy becomes invaluable.

.avif)

Putting a robust AI policy in place is not just a legal checkbox – it’s a business imperative. Here’s why it matters, especially in light of the EU AI Act’s looming enforcement.

An internal AI policy translates the complex legal requirements of the AI Act into practical guidelines for your staff. By clearly spelling out what is and isn’t allowed, your organization can avoid violations that lead to fines or sanctions. For instance, a policy can outright ban the use of AI for any prohibited practices (like social scoring or manipulative algorithms) in your projects, mirroring the law. It can also mandate that any AI considered “high-risk” undergo proper vetting and complies with the Act’s safeguards (risk assessments, documentation, human oversight, etc.). Remember, under the Act regulators can impose fines up to 7% of global turnover for the gravest breaches (such as using banned AI) and up to 3% for other compliance failures. A strong policy helps steer your organization well clear of these penalties.

Beyond avoiding legal risk, a well-crafted AI policy demonstrates your organization’s commitment to ethical and responsible AI. This can greatly enhance trust among customers, partners, and employees. The EU AI Act’s ethos is about protecting fundamental rights and safety – aligning your internal practices with that ethos shows stakeholders that you use AI to help, not harm. In fields like tech and consulting, clients increasingly demand assurances that AI-driven solutions are fair and transparent. In sales and marketing, consumers expect AI (say, in personalized ads or pricing) not to cross ethical lines. A public-facing stance on responsible AI, backed by an internal policy, can thus be a competitive advantage.

.avif)

Implementing an AI policy forces a company to take stock of its AI uses and put governance in place. This readiness has immediate benefits. By 2026, for example, if you deploy any high-risk AI system (perhaps an HR department using AI for CV screening, or a finance team using AI for credit decisions), you’ll need processes for human oversight, record-keeping, and informing affected people. It’s far easier to build those processes now than to scramble later. A policy can assign clear roles (e.g. who in the org must approve an AI use case, who monitors outcomes) so that when new AI projects arise, teams follow a defined protocol. This reduces confusion and inconsistency, ensuring AI is integrated smoothly and safely into operations.

The EU AI Act introduces an “AI literacy” obligation: companies that provide or use AI systems must ensure their staff have sufficient understanding of AI. In practice, this means training employees about AI’s capabilities, limitations, and the legal duties that come with it. A robust AI policy underpins this culture change – it can serve as the foundation for training programs and everyday decision-making. In fact, experts note that linking AI literacy training with a company AI policy is a best practice. The policy sets the “rules of the road” for AI use in your organization, and training builds the skills and mindset to follow those rules. Together, they foster an informed workforce that can leverage AI responsibly and spot potential issues early.

.avif)

Almost every sector is touched by AI today. Whether you’re a tech firm, a consultancy, or a sales-driven enterprise, you likely use AI tools – from machine learning models in products, to analytics services, to customer-facing chatbots. The AI Act’s requirements are sweeping: they apply not just to AI developers but also to companies that deploy third-party AI systems in their business processes. Therefore, an AI policy is not just for Silicon Valley R&D teams. It must speak to various departments (IT, HR, marketing, etc.) and business lines, guiding them on compliant and beneficial AI use. For example, a consulting company’s policy might govern how consultants utilize AI in client projects or ensure any advice on AI solutions aligns with EU norms. A retail or sales company’s policy might set rules for using AI in customer profiling or pricing so it doesn’t become discriminatory or opaque. In all cases, a one-size-fits-all approach won’t work – but a coherent policy framework can be adapted across the organization to address each context.

In short, a robust AI policy acts as an internal compass for navigating AI opportunities and risks. It operationalizes the principles of the EU AI Act, ensuring compliance, encouraging ethical innovation, and preparing your people for the changes ahead.

What should your organization’s AI policy actually include? Below are key topics and guidelines that a comprehensive AI policy should cover to align with the EU AI Act and general best practices for responsible AI.

Affirm that all AI activities will comply with applicable laws and regulations (explicitly referencing the EU AI Act). The policy should prohibit any AI practices banned by the Act, such as social scoring or unauthorized biometric surveillance. It should also require that “high-risk” AI systems are identified and handled per the Act’s rules – meaning they must be certified or assessed before use and continuously monitored. This section of the policy essentially translates regulatory requirements (like Article 5 prohibitions and Annex III high-risk categories) into the company context, so everyone knows the non-negotiables.

Define who in the organization is responsible for AI oversight. For example, appoint an AI Compliance Officer or Committee that will review and approve high-risk AI deployments, maintain the AI inventory, and update the policy as needed. Clarify that project managers or product owners deploying AI have the duty to ensure those systems adhere to the policy (with support from legal/compliance teams). Having clear ownership and escalation paths is crucial for accountability – and regulators will expect it as part of the “organizational measures” for compliance.

.avif)

Establish a process to classify AI systems by risk level (perhaps following the Act’s categories: unacceptable, high, limited, low risk) whenever a new AI tool or project is proposed. The policy should require a pre-implementation risk assessment for any AI system that could be high-risk. This includes evaluating potential impact on individuals’ rights or safety, checking for biases, etc. If a system is deemed high-risk (e.g. an AI used in recruitment or loan approvals), the policy might mandate additional steps before deployment: e.g. conducting a conformity assessment or audit, preparing required documentation, and getting management sign-off that all Act obligations are met (data quality, transparency, human oversight, etc. as listed in the Act). Lower-risk AI applications might have a simpler approval path but should still be logged and reviewed for any ethical red flags.

AI systems are only as good as the data they are trained on. The policy should include guidelines to ensure high-quality, representative data and avoid discriminatory outcomes, echoing the Act’s requirements for high-risk AI. It should also cover privacy and security: compliance with GDPR when personal data is involved, data minimization, and measures to secure data and models against unauthorized access.

.avif)

For instance, if your sales department uses an AI tool on customer data, the policy would remind them to respect privacy laws and maybe require a Data Protection Impact Assessment (DPIA) if sensitive data is used – in fact, the Act ties into this by requiring deployers of high-risk AI to leverage provider information for DPIAs.

.avif)

Reinforce that AI does not have the final say in matters that significantly affect people. The policy should mandate appropriate human oversight for AI-driven decisions, especially high-stakes ones. Concretely, this could mean specifying that a human must review or can override AI outputs in hiring decisions, credit evaluations, medical diagnoses, etc. The EU AI Act explicitly requires human oversight mechanisms for high-risk AI and that users (deployers) assign trained staff to supervise AI systems. Your policy can detail how this oversight is implemented in practice – for example, “Any AI-based recommendation in our HR recruitment system must be reviewed by HR staff before a final decision is made” or “If our customer service chatbot flags a possible emergency situation, a human agent should take over immediately.” By codifying these rules, you not only comply with the Act but also reduce the chance of AI errors going unchecked.

Include guidelines for transparency both internally and externally. Internally, employees using AI should know the AI’s purpose, limitations, and when they are interacting with an AI. Externally, the policy should commit to informing individuals when AI is used in decisions or services that affect them, as required by the Act for high-risk systems. For instance, if an AI system is used to assess job applicants or to determine insurance eligibility, those individuals must be notified that an AI is involved. Likewise, if the organization publishes or disseminates AI-generated content (like marketing material, reports, or media), the policy should require labeling it as AI-generated where appropriate. The EU AI Act enforces this for certain content – e.g., deepfake images or videos must be clearly marked as AI-generated, and AI-generated text that looks like news or public information must be disclosed (unless it’s been human-edited). Ensuring honesty about AI involvement will maintain trust and comply with these transparency rules.

.avif)

As mentioned, raising AI literacy is now a legal obligation – and it’s also key to effective organizational change. The policy should require regular training for employees on AI ethics, the contents of the AI Act, and the company’s own AI guidelines. It might stipulate that all staff complete an “AI use training module” annually, or that specialized teams get advanced training on managing AI risks. The policy can also serve as a reference document in these trainings. According to legal experts, a general AI policy can be a centerpiece of an AI literacy program, helping staff understand permitted AI uses and linking to further resources. In practice, this means your AI policy shouldn’t sit in a drawer – it should be actively communicated (via workshops, intranet, etc.), so that using AI responsibly becomes part of the company culture.

Outline how the organization will monitor AI systems in operation. This includes keeping required logs of AI system activity (the Act mandates at least six months of log retention for high-risk AI users) and performance metrics to catch issues like bias or error rates over time. The policy might establish periodic audits or reviews of key AI systems to ensure they are functioning as intended and staying compliant. Additionally, include an incident response plan: if an AI system malfunctions or causes a potential legal/ethical issue (for example, a serious incident reportable under the Act, or simply a customer-facing error), how should employees escalate it, and how will the company address it? Perhaps designate a team to investigate AI incidents and a protocol to inform stakeholders/regulators if necessary. Having this in the policy ensures the company can react swiftly and transparently to any AI-related problems.

Many organizations obtain AI tools or services from vendors. The policy should cover due diligence on third-party AI solutions – for instance, requiring vendors to demonstrate compliance with the EU AI Act (if they’re providing a high-risk system, have they CE-marked it or provided conformity documentation?). It could also require contract clauses that oblige vendors to inform you of any changes or incidents with their AI. Essentially, make sure that outsourced AI doesn’t become a compliance blind spot. If your sales team wants to adopt a new AI-driven CRM analytics tool, the policy’s procurement rules would kick in to vet that tool’s adherence to necessary standards before use.

Finally, state that the AI policy itself will be periodically reviewed and updated. AI technology and regulations are evolving rapidly – the policy should be a living document. Commit to reviewing it at least annually or whenever there are significant legal changes (for example, if EU regulators issue new guidelines or the Act gets amended). Also, as your organization’s use of AI matures, the policy might need to expand with more specific standards (e.g. addressing new use cases like generative AI for design, or new best practices). Keeping it updated ensures continuous alignment with both law and innovation.

By covering these elements, your AI policy will serve as a comprehensive guide for responsible AI use. It provides clarity to employees on how to innovate with AI safely and lawfully, and it creates a documented system of control that regulators will expect to see if they ever assess your compliance measures.

Crafting a policy is only half the battle – implementing it effectively is where organizational change comes in. Adopting a robust AI policy should be viewed as part of a broader shift towards a “compliance and ethics by design” culture in the age of AI. Here are some considerations to ensure the policy truly makes an impact:

Leadership should not only approve the AI policy but champion it. C-suite executives and division heads in tech, consulting, sales, etc., need to openly communicate why the policy matters – linking it to the company’s values, risk management, and long-term strategy. When top management emphasizes responsible AI use, it sends a message that compliance and ethics are a priority, not a bureaucratic hurdle.

Implementing AI governance isn’t just an IT or legal issue – it involves multiple functions. Set up a cross-functional AI governance team (if you haven’t already) with representatives from Legal/Compliance, IT/Data Science, Security, HR, and business units. This team can oversee the rollout of the policy: updating procedures, selecting training content, and advising project teams. For example, if a marketing team wants to use a new AI-driven personalization tool, they should consult this team or follow a workflow established by it to ensure the tool’s use aligns with the policy (checking for any transparency or bias concerns, etc.).

Introduce the AI policy to the entire organization through clear communications – e.g., internal webinars, FAQs, and practical examples of “dos and don’ts” drawn from the policy. Make training sessions engaging, with real case studies (both successes and cautionary tales) of AI in business. The goal is to make employees personally invested in following the guidelines, understanding that it protects both the company and themselves. Encourage employees to ask questions and even provide feedback on the policy – this helps identify areas where more clarity might be needed. We strongly recommend developing a clear set of employee-facing guidelines based on your AI policy. Think of these as a simplified and practical interpretation of the policy, designed to help teams across the organisation understand what the rules mean in their day-to-day work. By translating the formal document into accessible language and real-world examples, you’ll ensure the policy is not only read, but followed.

.avif)

A change-oriented approach will empower staff at all levels to take responsibility. Encourage a mindset where employees feel comfortable flagging concerns about an AI tool or suggesting improvements. Perhaps implement an internal reporting channel for any AI ethics or compliance issues encountered. By surfacing problems early (say, a salesperson notices an AI lead-scoring system seems to be excluding a demographic unreasonably), the organization can address them before they escalate. Tie this into the broader ethics culture – just as employees might report a safety or harassment issue, they should treat AI impacts with similar seriousness.

Emphasize that complying with the EU AI Act via a strong policy is not just about avoiding fines; it’s about unlocking AI’s benefits responsibly. When everyone knows the guardrails, they can innovate with confidence. For instance, developers can experiment with AI features knowing the criteria they must meet, rather than fearing later shutdowns due to non-compliance. Sales teams can enthusiastically adopt AI-driven insights, knowing there’s a process to ensure those insights are fair and accurate. Essentially, a well-implemented policy enables “responsible agility” – you can move fast on AI, because you’ve built safety into the process.

As time passes, track your organization’s readiness milestones. This could include metrics like “% of high-risk AI systems inventoried and assessed” or “number of staff trained in AI policy”. Regularly report on progress to senior leadership. Celebrate achievements – for example, highlight a project team that successfully deployed an AI solution fully compliant with the new policy, or an instance where an AI risk was caught and mitigated thanks to the policy’s processes. Recognizing these wins reinforces positive behavior and shows that the policy is making a difference.

Ultimately, the legislation should be seen as a catalyst for positive change. By putting a robust AI policy in place and infusing its principles throughout your organization, you aren’t just avoiding legal trouble – you are building a culture of trust, innovation, and accountability. This positions your organization to harness AI’s tremendous potential in a sustainable way.

Now is the time to act. We know developing a comprehensive AI policy can feel like a big task, it is! At Digital Bricks, we’ve been through it ourselves and have supported organisations across sectors in navigating these changes. We conduct a thorough AI readiness check, identify risk areas, and work closely with you to design a robust, actionable policy aligned with your operations and the EU AI Act. With the right preparation you’ll be leading the way in responsible, future-proof AI adoption.