The 10 Best AI Video Generators for Content Creation

Video content in the last few years has taken the internet by storm. Short clips on TikTok, Instagram Reels, and YouTube Shorts are racking up billions of views, while longer videos on YouTube continue to captivate global audiences. In fact, by 2025 video is projected to account for over 80% of all internet traffic. And for today’s top creators, this video boom is paying off – literally. TikTok superstars like Charli D’Amelio can reportedly earn north of $100,000 for a single post, illustrating how lucrative short-form video has become. Video isn’t just another piece of content; it’s the essence of modern content creation and online entertainment.

Now, a new twist is redefining how videos get made: AI-generated video. Imagine describing an idea in text and having an AI turn it into a short movie – no camera crew or editing suite required. This technology offers an incredible opportunity for creatives to bring their wildest ideas to life with just a prompt. In other words, the power of video production is moving into the hands of anyone with an adventurous imagination and a knack for crafting prompts. This emerging field of AI video generation is ushering in a new era of creation, one where solo content creators, tech enthusiasts and marketing teams alike can instantly generate compelling videos by simply telling an AI what they envision.

For marketers, this is especially exciting. Marketing teams now have the ability to whip up engaging promotional videos on demand, scaling up their “AI marketing” campaigns without the usual high costs and lead times. Need a snappy product demo or a personalised ad? An AI video generator can produce it overnight, allowing brands to respond faster to trends and customer interests. It’s a game-changer that promises faster content cycles and endless creative variations – all driven by smart algorithms and prompt engineering magic behind the scenes.

In this article, we’ll explore the top ten AI video generation models leading the charge in 2025. These are ten of the best AI video generators available today, each pushing the boundaries of creativity and putting a virtual film studio at your fingertips. But first, let’s briefly cover what AI video generators are and how they work.

Simply put, AI video generators are systems that create moving images (videos) from a given input – whether that input is text, images, or even other videos. They build on recent breakthroughs in generative AI that we’ve seen with tools for images (like DALL·E and Stable Diffusion), but add an extra dimension: time. Instead of producing a single picture, these models produce a sequence of frames that form a coherent video clip.

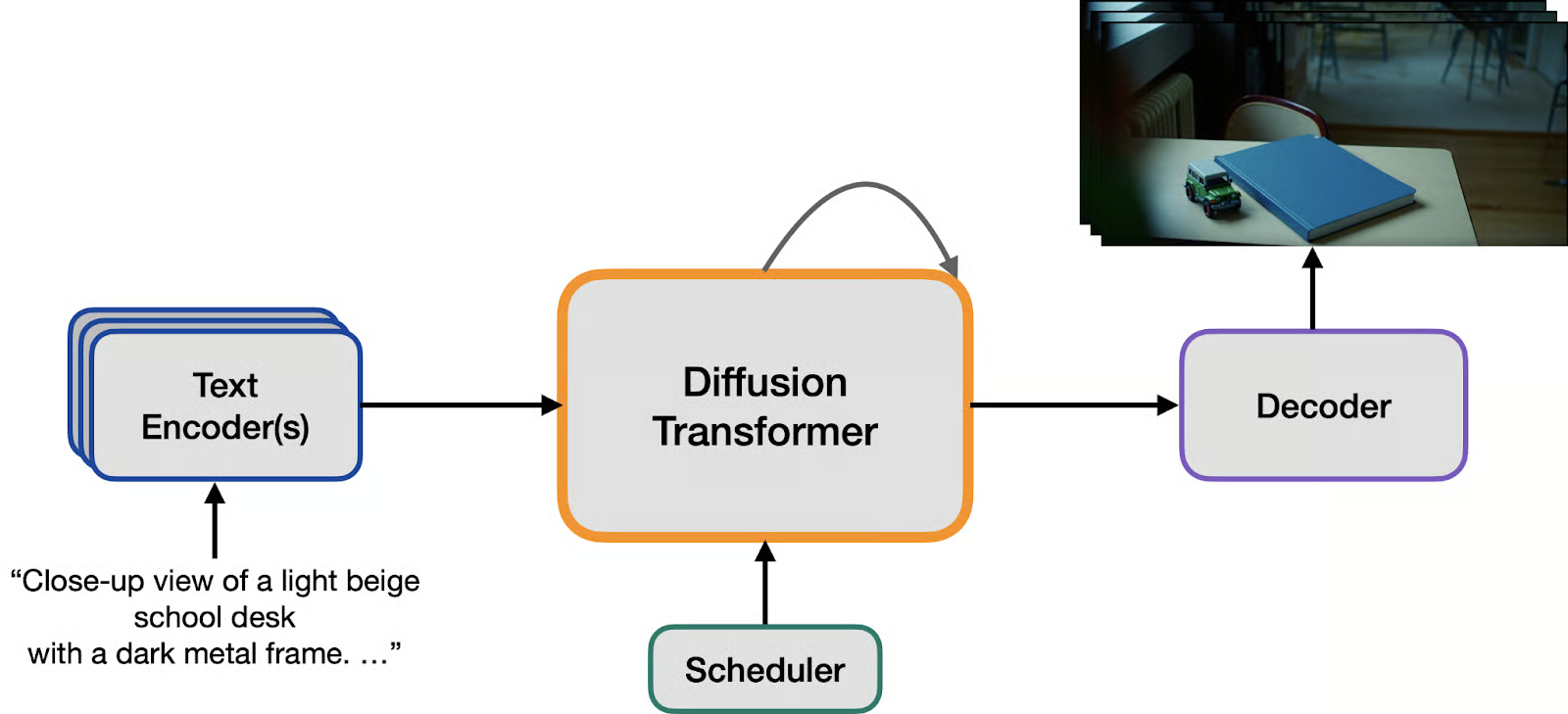

Under the hood, most of these AI video generators follow a similar workflow. The system takes a text prompt (or an image prompt) and feeds it through a text encoder to grasp the nuances of the request. Then it starts with random noise and gradually “dens” (or refines) that noise into meaningful frames using a denoising network guided by a scheduler. In essence, the AI is imagining how each frame should look, one step at a time, while keeping the sequence consistent from start to finish. Additional encoders and decoders convert data between raw pixels and a compressed latent space to make the process efficient. Unlike a static image generator, a video generator has to ensure temporal coherence – the scene at the end of the clip should still match what it was at the beginning. That means characters shouldn’t magically change outfits mid-video (unless prompted!), and the lighting and camera angles should flow naturally. Some advanced models even generate audio in sync with the visuals, so you get music, sound effects, or dialogue that matches the action.

The result? You type a description, and the AI produces a short video clip that tries to match it. It feels a bit like science fiction, but this is the reality of AI video generation in 2025. Below, we’ll dive into ten leading models — a mix of cutting-edge systems from tech giants and open-source projects — that are defining this nascent field. Each model has its own strengths, whether it’s ultra-realistic visuals, stylistic animation, speed of generation, or ease of use. Let’s explore what each brings to the table.

Veo 3 is Google’s state-of-the-art video generation model, and it’s setting a high bar for quality. Veo 3 can produce high-fidelity clips up to about 8 seconds long at 720p or 1080p resolution (widescreen 16:9) with smooth 24fps motion and native audio included. In other words, the videos don’t come out silent – this model generates sound to match the footage, whether that’s dialogue, background ambience, or sound effects. It’s available through Google’s Gemini AI API and represents the cutting edge of what Google’s research has achieved in generative video.

What makes Veo 3 shine is its cinematic flair and consistency. It excels at turning text prompts into scenes that look like they’ve come from a professional film shoot. Think coherent lighting and depth-of-field, rich filmic colours (no glitchy surreal tinge unless intended), and stable visuals from frame to frame. If you prompt it with a dramatic movie scene, you’ll get something with appropriate lighting and camera angles – you might even get characters speaking the quoted lines from your prompt with lips synced to the audio. Veo 3 is particularly praised for handling dialogue-driven scenes and creative animation well. It keeps characters on-model throughout the clip and maintains the overall look without strange flickers or continuity errors. Essentially, Veo 3 strives to give you a miniature Hollywood clip: consistent, polished, and with both video and sound in sync for maximum realism.

Sora 2 is another top-tier AI video generator – in fact, this is OpenAI’s flagship text-to-video system. The second iteration of Sora builds upon the original by adding something creators had been craving: synchronised audio alongside the visuals. With Sora 2, when you prompt a scene, you get the whole package in one go – moving images plus matching audio (dialogue, ambient sounds, and sound effects). This all-in-one approach allows for far more cohesive storytelling because the sights and sounds are generated together, ensuring they line up perfectly.

Aside from audio, Sora 2 focuses on realism and continuity. It has improved understanding of physics and the physical plausibility of actions, meaning if you prompt a person juggling or a glass of water tipping over, the model tries to respect real-world behavior (objects have weight, things don’t just pass through each other, etc.). It’s also better at maintaining consistency across multiple shots. For example, if your video prompt involves a character moving from one room to another in two different shots, Sora 2 will keep that character’s appearance consistent, keep the lighting appropriate in each room, and preserve the “world state” so it feels like one continuous story. Stylistically, it’s versatile – you can request anything from photorealistic footage to Pixar-like animation or stylised anime, and Sora 2 will adapt its output accordingly. A quirky new feature in Sora 2 is its ability to simulate failures: you can actually prompt scenarios like a stunt performer missing a jump or someone slipping on a banana peel, and the AI will produce the fumble. This might sound niche, but it’s very useful for filmmakers planning out scenes (previsualization) or exploring safety scenarios. All told, Sora 2 is a powerful, well-rounded AI video generator, bringing us closer to one-click short films with sound and narrative cohesion.

PixVerse V5 is the latest release from the PixVerse platform, and it marks a big upgrade over their previous 4.5 version. This model stands out for its speed and visual sharpness. It offers both text-to-video and image-to-video generation, meaning you can either start from scratch with a line of text or feed it an image to animate, and PixVerse V5 will handle it quickly while delivering high-quality, cinematic visuals. Creators have noted that the motion in PixVerse V5’s outputs is impressively smooth and expressive – gone is the stiff, jerky movement that earlier generation tools sometimes produced. If you ask for a dancing scene, you’ll actually get fluid dance motions with a sense of weight and timing.

PixVerse V5’s development focused on three pillars of realism: motion, consistency, and detail. The model produces more natural movement; for instance, camera pans and character animations have a nice flow, and characters move as if they have weight (no more floaty, ghost-like actions). It also nails temporal consistency – styles, colours, and subjects remain coherent across all frames. That means if you’re generating a video of a red car driving at sunset, the car stays the same shade of red throughout, and the sunset lighting doesn’t suddenly flicker or change incorrectly frame-to-frame. Details are another strong suit: PixVerse clips often come out crisp and film-worthy in terms of image clarity. And crucially, the model is good at following the prompt faithfully. If you specify a certain atmosphere or object in the prompt, PixVerse V5 usually includes it with very little deviation – your direction translates cleanly to what you see on screen. For content creators, this reliability is golden: you spend less time re-rolling the AI output because it got the colour tone or subject wrong, and more time refining the prompt to get exactly the scene you want.

The name Kling 2.5 Turbo hints that this model is all about power and speed. It’s the newest upgrade in the Kling AI video generation suite and is built for next-level creative freedom with less wait time. In practical terms, Kling 2.5 Turbo generates videos faster than many competitors and lets you iterate quickly, which is great when you’re tweaking a scene or trying multiple ideas. It handles both text-to-video and image-to-video generation, now with stronger prompt adherence and advanced camera control features. That means if you feed it a detailed script or storyboard, it’s more likely to nail the specifics than earlier versions – fewer surprises, more of what you envisioned.

Visually, Kling 2.5 Turbo puts an emphasis on film-grade aesthetics. The frames it produces tend to be sharp, with balanced lighting and rich colours that give scenes a polished, professional look. It’s as if the AI has a built-in cinematographer ensuring each shot is well-composed. One highlight is how well it handles camera movements: you can prompt complex actions like smooth pans, zoom-ins, or drone-like aerial shots, and the model will execute them in a way that feels like a human camera operator did it. This level of camera control in an AI generator is a boon for creators wanting dynamic shots.

Kling 2.5 Turbo also steps up the realism by being physics-aware in its motion. It knows about gravity and impacts, meaning if something falls or collides in your scene, the motion will reflect a believable physical response. Liquids will slosh, objects will bounce or break in a natural manner, and characters’ movements will show momentum. Even facial expressions and character performances got an upgrade – emotions and reactions appear more lifelike, which makes the resulting video more convincing. All these improvements come together to make Kling 2.5 Turbo a favorite for creators who want both speed and high fidelity. You can dream up a scene with detailed action and camera directions, and watch it unfold on screen within minutes, rendered with a cinematic touch.

Hailuo 02 (from MiniMax) is a next-generation model that’s been turning heads, particularly for those interested in high-resolution output and physical realism. Unlike some models that max out at lower resolutions, Hailuo 02 is designed for native 1080p video generation from the ground up. So the clarity and detail in its clips are top-notch, suitable for full HD displays without upscaling. It’s also a champion at understanding and following detailed instructions (what AI researchers call instruction following). If you give Hailuo 02 a complex prompt with multiple elements or a very specific scenario, it shows an exceptional proficiency in interpreting and executing those instructions faithfully.

One of the technical breakthroughs behind Hailuo 02 is a new architecture called Noise-Aware Compute Redistribution (NCR). Without diving too deep into the math, NCR basically helps the model use its computing power more efficiently during the generation process. The result is about 2.5× better efficiency at similar model sizes. This efficiency was reinvested into making Hailuo 02 much larger and more trained than its predecessor (3× the model size, trained on 4× more data) without increasing the cost for creators using it. In plain terms: it’s a beefier, smarter model that doesn’t cost more to run, thanks to those optimisations.

What does this mean for the videos? Hailuo 02 can produce scenes that are both intricate and physically authentic. For example, it shines with scenarios like sports or stunts where body movement and timing are critical. The model can handle something like a gymnastics routine or a martial arts sequence, getting the balance and gravity right so it feels real. Frames come out clearer and more stable, even in fast motion, and the temporal consistency is rock-solid (no weird blurs or morphing mid-action). Hailuo 02 is the kind of AI you’d turn to for generating an action scene or any video where you need that high-definition crispness combined with trustworthy physics. It’s making AI-generated video a viable option for more demanding uses where previously you’d worry an AI might trip up on the details.

From the company behind TikTok (ByteDance) comes Seedance 1.0, a high-quality video generator built with storytelling in mind. Seedance 1.0 is crafted to produce smooth, stable motion and can handle multi-shot videos natively. This means it isn’t limited to just one continuous scene – you can have it generate a short sequence that involves different shots or camera cuts, and it will maintain consistency across them. For marketers or creators who want to string together a mini narrative (say, different angles of a product or a short skit), that’s a huge plus.

Technically, Seedance 1.0 supports both text-to-video (T2V) and image-to-video (I2V) workflows. You can feed it a pure text prompt, or give it a starting image to base the video on. It has a wide dynamic range in terms of motion: it’s as adept at capturing subtle facial expressions or gentle movements as it is at large-scale, fast action scenes. The key is that it maintains stability and physical realism throughout. If multiple actors or objects are interacting, Seedance handles those multi-agent scenarios gracefully, and if the camera is doing something complex (like swooping around), the output remains smooth rather than jittery.

A standout feature of Seedance 1.0 is its ability to keep a coherent style and subject continuity through transitions in space or time. This is essential for storytelling – you don’t want your main character’s appearance to change when you cut to a new scene, or the overall colour tone to suddenly shift unless it’s intentional. Seedance ensures that if you generate a sequence of shots, the main subject, visual style, and atmosphere remain consistent from one shot to the next. It basically understands that all those shots are part of one story. Additionally, it outputs in HD (1080p) with strong visual detail, giving the final video a polished, film-like feel. Whether you’re crafting a short ad with multiple scenes or an animated story for fun, Seedance 1.0 brings a balance of creative flexibility and reliable quality that makes the process easier.

Not all top AI video tools are closed-source corporate projects – Wan 2.2 is proof of the innovation happening in the open-source community. Wan 2.2 is an advanced large-scale video generator that is completely open-source, meaning its code and model weights are freely available for anyone to use or modify. It’s the successor to a model called Wan 2.1, and it introduces a clever architecture upgrade: a Mixture-of-Experts (MoE) diffusion design. In an MoE model, different “experts” (sub-networks) specialise in different parts of the task and the system smartly routes the work among them. Wan 2.2 uses this to handle the denoising process more efficiently – essentially, it can pack in more capability without needing dramatically more computing power.

The practicality of Wan 2.2 is a big selling point. Because it’s open, the developers have even released a version of the model that can run on a high-end consumer GPU (like an NVIDIA 4090). In fact, they have a 5 billion-parameter hybrid model that can generate 720p video at 24fps on a single 4090 card. That’s impressive, considering how heavy video generation usually is. They also provide larger 14-billion-parameter models for those with more computing resources, targeting outputs at 480p and 720p for different needs. In short, whether you’re a researcher, an indie developer, or just a hobbyist with a powerful PC, Wan 2.2 gives you a capable AI video generator you can actually run yourself.

In terms of output, Wan 2.2 doesn’t slouch either. It’s known for creating videos with a lot of cinematic control and believable motion. Thanks to the Mixture-of-Experts approach, one part of the model can focus on the broad strokes (like the overall scene layout in early noisy frames) while another hones in on fine details in later stages. The end product tends to have clean compositions, crisp textures, and stable coherence over time. Plus, the team behind Wan 2.2 included a set of curated aesthetic style labels you can use in prompts – these give you precise control over things like lighting style, colour grading, or camera framing. Want your video to look like noir cinema with moody lighting? Or perhaps a bright, over-saturated 90s music video vibe? Wan 2.2 lets you dial in such stylistic choices in a controlled way. Being open-source, it’s also a boon for learning and experimentation: you can inspect how it works, tweak it, or even fine-tune it further on your own data to suit your brand’s needs.

Mochi 1 is another heavyweight from the open-source world, developed by the team at Genmo. It’s an Apache-licensed model that has garnered attention for high-fidelity motion and strong prompt adherence, closing the gap between what open models can do versus the fancy closed ones. In early evaluations, Mochi 1 performed so well that it started to challenge some of the proprietary systems in terms of quality. For those of us cheering on open AI research, that’s a big deal – it means you don’t necessarily need access to a secret lab model to get top-tier results.

What does Mochi 1 deliver? In essence, it’s very good at translating natural language prompts into coherent, cinematic video sequences. If you describe a scene in detail, Mochi 1 will work hard to faithfully capture your description in the resulting footage. The motion in its videos is polished and deliberate, meaning things move with purpose and according to your prompt, rather than wobbling around aimlessly or introducing random elements. For example, if you prompt “a yellow robot gently watering a rose in a sunlit garden, wind blowing,” Mochi 1 will aim to get the robot, the action of watering, the rose, the gentle motion of wind on plants, and the warm sunlight vibe all into the clip in a harmonious way. The fact that it’s open-source and Apache-licensed is crucial: it allows researchers, creators, and developers to experiment freely. You can integrate Mochi 1 into your own applications without worrying about restrictive licenses, and you can fine-tune it on your own custom video data if you want to personalise its style or improve it further.

Mochi 1 is part of a broader movement to democratise generative video tech. By bridging the quality gap between open and closed systems, it empowers anyone interested in AI video creation to get hands-on. Whether you’re a filmmaker prototyping scenes, a game developer generating cutscenes, or just an enthusiast playing with AI art, Mochi 1 offers a top-notch tool that you can bend to your will. It represents the ethos of open innovation: the cutting edge of AI, but for everyone.

Moving into the realm of real-time generation, LTX-Video is a model from the company Lightricks that’s known for delivering high-quality videos at remarkable speed. It’s built on a diffusion model backbone (specifically a DiT, or Diffusion Transformer) and can generate video faster than you can watch it – achieving 30 frames per second at a resolution of 1216×704. In other words, LTX-Video can pump out frames quicker than standard playback, which opens up possibilities for interactive applications or just drastically shorter wait times when you’re creating content.

LTX-Video is somewhat unique in that it focuses on transforming images into videos, often with optional additional conditioning. This means you can give it a single image (or a short video clip) plus a prompt, and it will generate a new video that smoothly animates that input. For instance, you could provide a still photo of a product and ask LTX-Video to create a 5-second rotating showcase of it, and it will do so with photorealistic detail. Or you might supply a drawing of a character and prompt a short action scene; LTX can use the image as a visual reference and bring it to life.

To accommodate different needs, LTX-Video comes in a few model size variants. There’s a big 13-billion-parameter model when you want the highest fidelity (crisp detail, complex scenes), and there are also distilled and even 8-bit (FP8) versions that run faster and lighter for those with less compute power. There’s even a slim 2-billion-parameter model for lightweight deployments – useful if you wanted to run it on smaller hardware or integrate it into a mobile app or something. The key is that even the smaller versions maintain a balance of speed and realism, so creators can choose the trade-off that works for them.

In terms of output, LTX-Video’s results are smooth and coherent. If you give it a still image, the way it generates motion looks quite intentional and lifelike, as if a videographer had filmed a sequence starting from that image. It keeps textures crisp and subjects stable; you won’t see things melting or warping oddly as the video plays out. Camera dynamics (like zooms or pans that it introduces) feel natural, and overall it avoids that “AI-ish” look – many clips feel as if they could have been real videos at first glance. Because of the extensive and diverse video data it was trained on, LTX-Video has a good grasp of varied content, and you can guide it with detailed English prompts to nail specific styles or actions. For creatives who value speed (say, social media managers needing quick turnaround, or app developers doing on-the-fly video generation for users), LTX-Video is an incredibly practical tool that doesn’t sacrifice quality for the sake of speed.

Last but not least, Marey is the signature AI video generator from a company called Moonvalley. Marey is built with a clear vision: meet the standards of world-class cinematography and integrate seamlessly into professional filmmaking workflows. In many ways, Marey is targeted at filmmakers, studios, and high-end content creators who demand maximum control and fidelity. It’s not just about spitting out a quick video for social media; it’s about generating production-ready footage that could feasibly be used in a film or high-quality commercial.

Marey emphasises precision in every frame and strong temporal consistency. This means if you generate a sequence of shots for a scene, Marey will ensure that the lighting, tone, and visual details remain consistently aligned with the creative vision across all those shots. If the director’s style guide says the colour palette is teal-and-orange with moody lighting, Marey will keep that look steady from the opening frame to the closing frame. It focuses on frame-level control, giving creators the ability to fine-tune exactly how each frame should appear, which is crucial for avoiding any weird blips or artifacts when the footage is scrutinised on a big screen.

Another aspect where Marey shines is maintaining stable subjects and smooth motion no matter how complex the sequence. For example, if you’re generating a multi-shot car chase scene (a typically challenging scenario for AI), Marey would strive to keep the car looking identical in every shot, ensure the motion between frames is perfectly fluid, and maintain the overall pacing and tension that you’d expect if a human edited the sequence. It’s the kind of model that a creator might use to pre-visualise a movie scene: you describe the storyboard, and Marey gives you an approximate video that’s coherent enough to present to producers or team members. And because it aims for such high quality, the outputs are designed to withstand close examination – meaning less of that tell-tale AI fuzz or distortion when you pause the video and look closely.

In sum, Marey is like having a top-notch cinematographer and VFX team inside your computer, focused on realising your exact vision. It’s particularly exciting for those in the film and advertising industries, as it points to a future where AI might generate drafts of scenes or even final footage for creative projects. Moonvalley’s approach with Marey underscores the theme of this whole list: AI video generators are not just tech demos anymore; they’re becoming serious tools across all levels of content creation.

From Hollywood-esque short films to snappy social media ads, AI video generation models are rapidly advancing to make video creation faster and more accessible than ever. Creators in advertising, e-commerce, marketing, cinema, YouTube, and even casual TikTokers are already experimenting with these tools. The benefits are clear: you can iterate ideas quickly, achieve cinematic quality without a physical studio, and explore creative possibilities that might have been out of reach with traditional methods. A marketing team, for instance, can generate a dozen variant video ads tailored to different audiences in the time it used to take to film one – that’s the power of AI video in action.

However, with great power comes great responsibility. The realism these models achieve also brings ethical challenges. It’s now possible to create hyper-realistic scenes that never happened, raising concerns about deepfakes, misleading visuals in ads, or even completely AI-generated influencers. As we embrace these AI video generators, it’s essential to use them responsibly – be transparent when content is AI-made, verify information and context, and follow legal guidelines to avoid misuse. The technology is a double-edged sword: it can empower creativity and efficiency, but it can also deceive if wielded unethically. Society and platforms will likely develop new norms and detection tools to handle this, just as we’ve done for AI-generated images and text.

One thing is certain: to fully harness these AI video tools, creators will need to master the art of the prompt. Crafting the right prompt (often called prompt engineering) is becoming a key skill – it’s the new “lighting and camera angle” in the AI studio, so to speak. Recognising this, many organisations are now investing in scaling up their teams’ prompting skills. (At Digital Bricks, for example, we help organisations and individuals build expertise in prompt engineering so they can get the most out of AI tools like those described above.) By learning how to speak the AI’s language, a creator can better steer the model to produce exactly the desired outcome, whether that’s a heartfelt charity campaign video or a hilarious meme clip.

In conclusion, AI video generators are opening up a new era of content creation. They put a virtual movie studio at anyone’s disposal, limited only by imagination and careful guidance. The ten models we’ve covered are at the forefront of this revolution – each bringing something unique, from Google’s audio-savvy Veo 3 to the open-source prowess of Mochi 1 and the film-industry focus of Marey. As these systems continue to improve, we’re likely to see an explosion of AI-generated videos across the internet. Whether you’re a solo YouTuber, a creative marketer, or a filmmaker, it might be time to add an AI video generator to your toolkit. With a good idea and a well-engineered prompt, who knows what cinematic marvels you might create next?