Generative Watermarking

.avif)

.avif)

Generative AI watermarking is an emerging technology that embeds invisible markers into AI-generated content to verify its authenticity and trace its origin. This technique can be applied to text, images, audio, and video content. As AI-generated media becomes harder to distinguish from human-created work, generative watermarking has gained attention as a way to combat misinformation, protect intellectual property, discourage academic cheating, and promote trust in digital content. In this article, we explore how generative watermarking works, its recent developments, the challenges it faces, and why it could become a cornerstone of digital trust in the coming years.

Watermarking techniques aim to alter AI-generated outputs in subtle ways that do not noticeably impact their quality for a human viewer or reader, but are detectable by machines.

Some systems insert a hidden “signature” into AI-written text by choosing certain words from a predetermined set. For example, Google DeepMind’s SynthID for text takes advantage of the many synonyms and word choices available in a language. It strategically substitutes words with specific alternatives that fit naturally but create an AI-specific pattern. The result is a textual fingerprint that is invisible in normal reading but can be identified by a detection tool. A human writer’s word choices are more random, so this patterned choice of words signals AI origin.

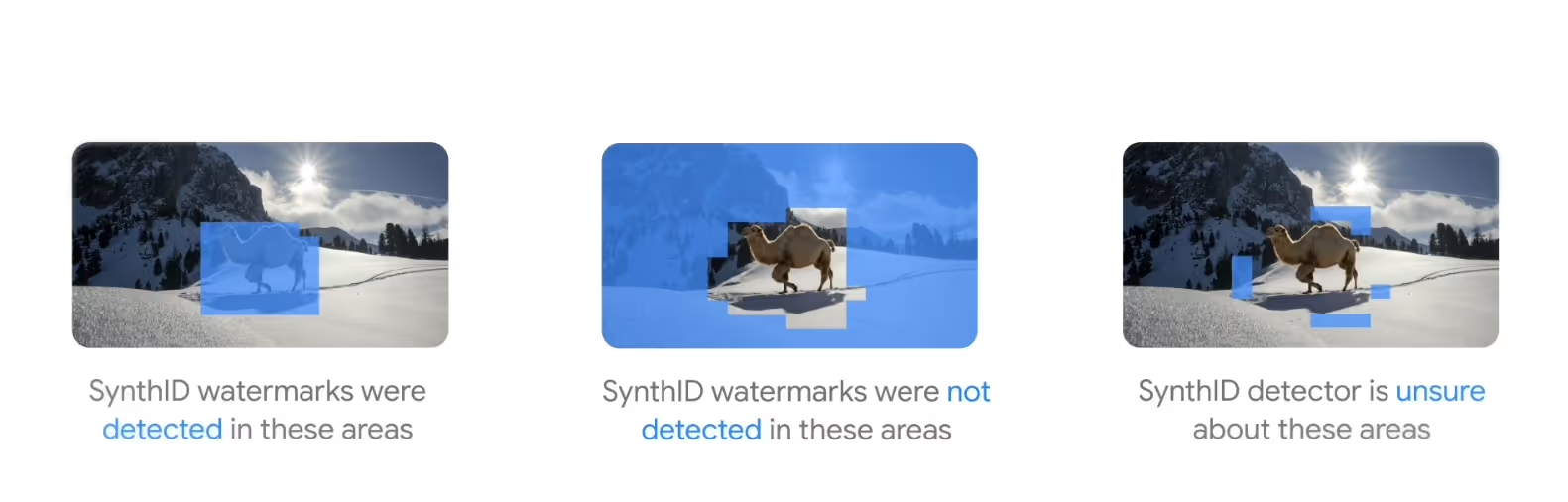

Visual watermarking involves making imperceptible changes at the pixel level of AI-generated images or frames of video. These tiny alterations are designed to survive common edits like resizing or compression. For instance, a watermarking algorithm might subtly adjust the color values of individual pixels. The changes are too slight for the human eye to notice, but an algorithm can detect the unique pattern. Another technique is embedding hidden patterns or bits of data in the image or audio that only a machine can extract. These watermarks act like invisible signatures, allowing content to be traced back to the AI model or source that produced it.

By embedding these hidden markers, generative watermarking provides a way to verify if content was created by a human or generated by an AI. The markers can be checked later with the right detection software, helping users and platforms confirm content authenticity.

Generative watermarking has rapidly moved from concept to practice in just a few years. In 2022, As AI models like Open AI's ChatGPT exploded in popularity, the need for content authentication became evident. The concept of watermarking AI-generated content gained traction this year as a possible solution to growing concerns about deepfakes and misinformation. Under increasing regulatory and public pressure, leading AI companies committed to implementing watermarking in their tools. OpenAI, Google, Meta, and others in 2023 announced plans to incorporate invisible watermarks into the output of their generative AI systems. This marked an industry-wide acknowledgment that some form of content provenance is necessary for responsible AI deployment.

In 2024, technical breakthroughs and wider rollouts occurred. Google DeepMind made headlines by open-sourcing SynthID, its AI watermarking technology, making it more widely available to developers and organizations. Around the same time, Meta introduced VideoSeal, a system for watermarking AI-generated videos on its platforms. These developments signaled that watermarking tools were maturing and becoming accessible for broader use.

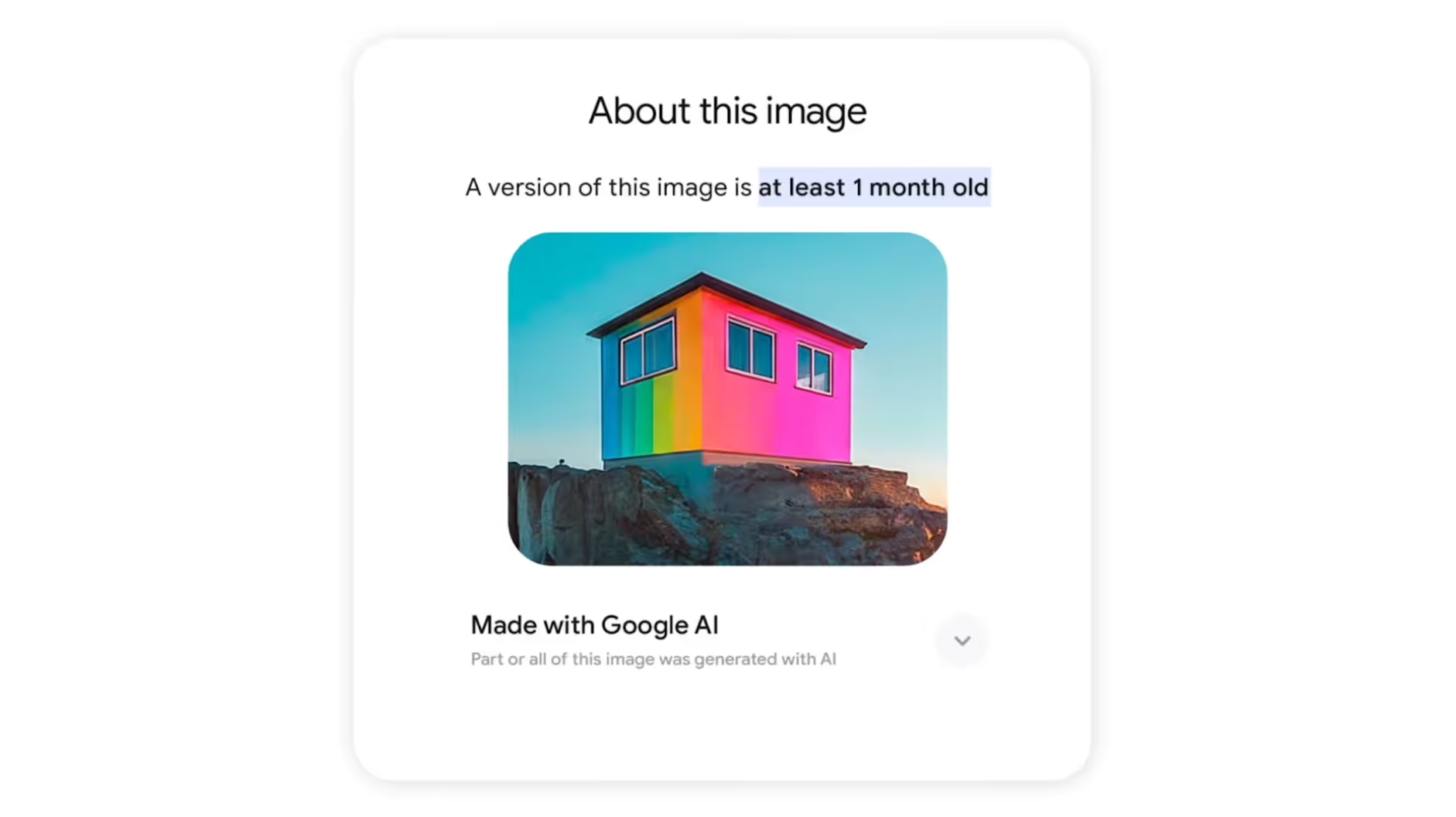

Today, many AI-driven platforms are beginning to integrate watermarking features. Google is embedding SynthID into images, text, and videos generated by its services to ensure any AI-created content can later be identified. Meta has started adding invisible watermarks and metadata tags to AI-generated content on Facebook, Instagram, and Threads. Additionally, AI companies are collaborating through groups like the Partnership on AI to promote what they call "synthetic media transparency" establishing best practices so that content created by AI can be transparently marked and identified.

A determined user can attempt to remove or obscure watermarks. For example, cropping or slightly editing an image might cut out a watermark if it’s only in a corner, and adjusting wording in AI-generated text (or using an AI paraphraser) might break a textual watermark pattern. There are even emerging tools specifically designed to detect and strip out AI watermarks. These simple modifications can sometimes fool current detection systems.

If only some companies or platforms watermark their AI content and others do not, the effectiveness is weakened. Lack of universal standards means one system might not recognize another’s watermark. Uneven adoption across the industry could create gaps that make it easy for watermarked content to be lost or ignored. A patchwork approach may undermine the overall reliability of watermarking in practice.

No technique is foolproof (yet!). There is a risk of falsely labeling real, human-made content as AI-generated (a false positive). Conversely, someone might maliciously claim a human creator used AI based on a mistaken watermark detection. Such errors could lead to wrongful accusations — for instance, a student or journalist being accused of using AI when they did not. These scenarios are especially concerning in academic and professional settings, potentially harming reputations. Ethical guidelines and oversight will be needed to handle disputes and mistakes in detection.

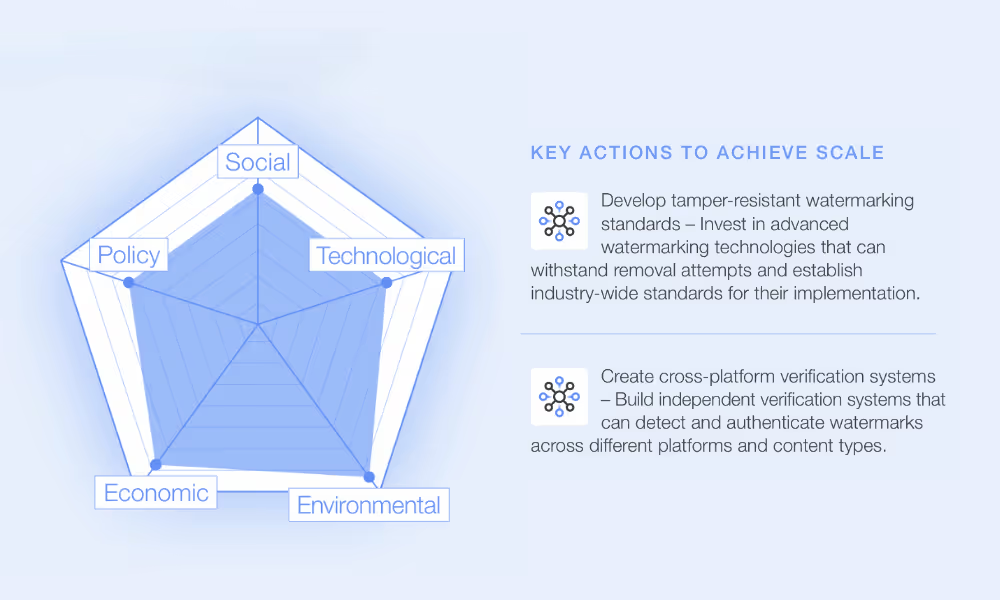

As watermarking methods improve, so do attempts to defeat them. This is an ongoing cat-and-mouse game. Watermarks must become more robust (for example, tamper-resistant and present across various content formats) to stay ahead of removal techniques. Achieving a watermark that is practically impossible to remove without ruining the content is a difficult technical challenge. Some have raised concerns about how watermarking might be misused — for example, oppressive regimes falsely labeling authentic user-generated content as "AI propaganda," or invasive tracking of content origin. Clear policies will be required to prevent misuse of watermarking for censorship or surveillance beyond its intended purpose of transparency.

Addressing these challenges will require not just better algorithms, but also industry-wide cooperation and governance. In fact, for watermarking to be successful, it must be accompanied by sophisticated guidelines and standards for its use. Without common agreements on how and when to watermark content, the system could become confusing or be manipulated.

Recognizing both the promise and pitfalls of generative AI, governments and industry groups have started to develop rules and standards around watermarking:

Some governments are moving faster than others. China has enacted regulations requiring that AI-generated content be clearly marked (watermarked) to inform users of its origin. In the West, the European Union Artificial Intelligence Act manages the security and authenticity of digital content. California has also looked into legislation that would mandate labels for AI content in political ads and beyond. These converging regulatory efforts which even threaten steep penalties (in some proposals, fines up to tens of millions of dollars or a significant percentage of a company’s revenue) show a growing global interest in content provenance.

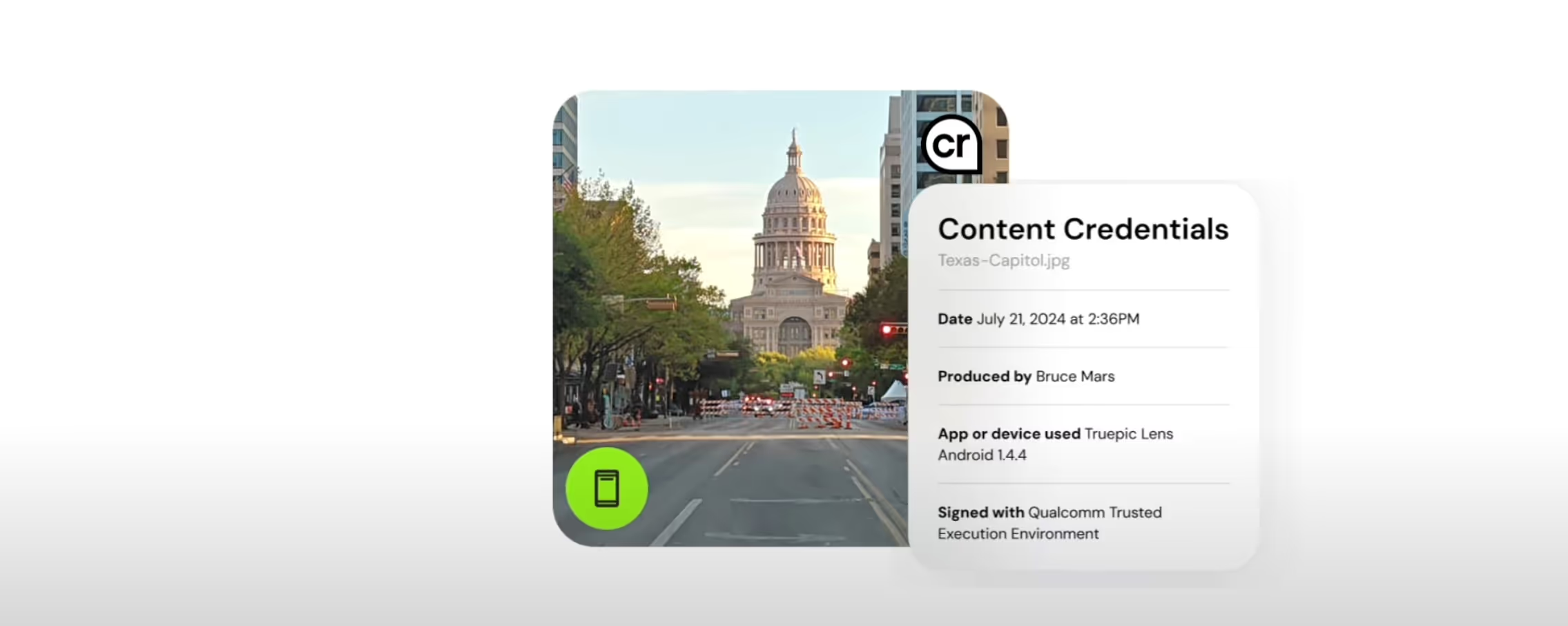

Beyond laws, industry coalitions are forming to set technical standards. The Coalition for Content Provenance and Authenticity, which includes major tech and media companies, is developing specifications for certifying the source and history of media content. Such standards can help create a common language for watermarking and digital content credentials. These collaborative efforts may move faster and be more technically nuanced than government regulations, setting a baseline that everyone can adopt to ensure authenticity.

These moves indicate that generative watermarking is more than just an optional feature, it’s becoming seen as essential infrastructure for the digital world. A flourishing ecosystem of start-ups has also appeared, each offering different technological approaches to watermarking AI content. This innovation push further underscores that watermarking is viewed as a cornerstone of responsible AI deployment, balancing the rapid innovation of AI with the need for accountability and authenticity.

Looking ahead, generative watermarking could evolve from a handy safeguard into a fundamental layer of digital trust on the internet. As AI-generated text, images, and videos become increasingly common, having a reliable way to verify content origin will be crucial. Watermarks embedded in content might form the foundation of a global verification ecosystem that helps anyone quickly distinguish between human-made and machine-made material.

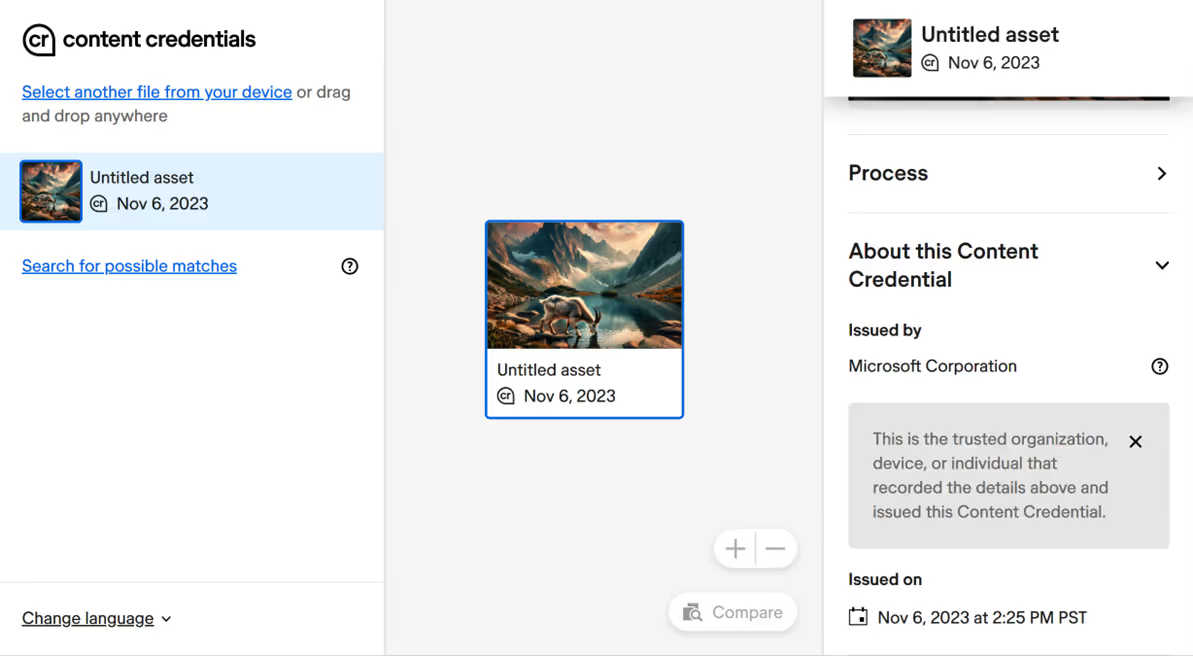

In the media and creative industries, we may see significant transformation. If regulations in multiple major markets (like US, China, and the EU) require AI content disclosure, compliance will become mandatory for big publishers and platforms. Major tech players are already positioning themselves for this future. For instance, Adobe’s Content Credentials feature, TikTok’s experiments with AI-generated content labels, and Google’s SynthID watermarking are early attempts to set the norms and capture market leadership in content authenticity. It’s the beginning of a new way to govern digital content. Companies that adopt watermarking early and transparently could gain a competitive edge as trusted platforms, while those who lag behind risk reputational damage or even exclusion if their content becomes seen as suspect.

Nations and organizations leading the development of watermarking standards will effectively set the rules of the emerging synthetic media economy. In other words, they will influence how AI-generated content is treated, verified, and monetized worldwide. Much like early internet protocols defined the Web, early watermarking protocols and practices could define how we handle AI content for decades. This leadership could confer advantages, whereas non-compliant creators and platforms might find themselves marginalized in a future where trust is a key currency.

Beyond the creative fields, the implications of widespread watermarking extend into legal and financial domains. Courts might eventually accept watermarked content as evidence of authenticity or origin in intellectual property disputes and defamation cases. For example, if an artist’s work is watermarked, it could help prove whether a certain image was original or AI-generated copy in an IP lawsuit. Similarly, insurance companies might develop new policies or coverage tiers based on content authenticity—imagine insurers offering lower premiums or specific coverage if a company can demonstrate all its digital content is verified and watermarked, reducing the risk of deepfake-related fraud or reputation damage. For individual creators and professionals, having verifiable proof of one’s own work could become an asset. In a market flooded with synthetic media, a photographer, writer, or designer who can prove their work is human-made (or properly flagged as AI-assisted) may command premium prices. Authenticated content could become a selling point, much like organic food labeling in supermarkets, it’s all about assured origin and quality.

Technology alone will not solve the problem of authenticating digital content. Building a robust generative watermarking ecosystem also depends on people’s awareness and oversight:

Educating content creators and the public about AI-generated media is crucial. Users should know that tools exist to verify content and should learn to look for authenticity cues. For instance, a journalist or a teacher might use a watermark detection tool to check if an essay was written by AI. Improving general media literacy helps everyone understand what generative AI can do and why verification is important. When people are informed, they are more likely to demand and value authenticity signals like watermarks, creating a virtuous cycle that encourages adoption.

Even with sophisticated watermarking, human oversight remains important. Moderators, editors, or platform curators will need to enforce policies around AI content. For example, if a platform requires AI-generated images to be watermarked, there must be human review and consequences when someone tries to pass off AI content as human-made without disclosure. Humans are also needed to interpret borderline cases and handle disputes—technology can flag content, but people must decide what to do with that information in context. A combination of automated detection and human judgment will yield the best results in maintaining trust.

Major social media platforms have a huge influence on how content spreads, and they have sometimes been slow to adopt new standards. However, with the right societal and regulatory pressure, these platforms will likely embrace generative watermarking over time. We are already seeing early steps: platforms like Twitter (X), Facebook, and TikTok have been urged to label AI-generated posts or deepfake videos. As public awareness grows and policies solidify, it’s reasonable to expect that social networks will succumb to pressure and integrate robust watermarking and labeling systems. In the long run, no large platform will want to be known as a haven for untraceable AI-driven misinformation. By adopting watermarking and transparency measures, social media companies can show they are contributing to a healthier information ecosystem and complying with user expectations and laws.

Generative AI watermarking represents more than just a technical trick for tagging content, it is a reimagining of how we establish trust in our increasingly synthetic digital landscape. By embedding a layer of authenticity into the content we consume, watermarking can help ensure that the rapid advancements in AI do not come at the cost of truth and transparency. The coming years will be critical for this technology: its success will depend on collaboration between tech companies, policymakers, creators, and communities. Together, they must balance innovation with safeguards, ensuring that as AI-generated content becomes ubiquitous, our ability to verify and trust what we see and hear keeps pace.

In the end, generative watermarking could become as commonplace and essential as nutrition labels on food or SSL locks in web browsers, a familiar assurance that what we’re consuming is safe and as advertised. By combining advanced watermarking techniques, supportive policies, and an informed public, we can build a digital content ecosystem where creativity flourishes hand-in-hand with credibility and accountability.