What Is Model Collapse?

.avif)

.avif)

Discover what “model collapse” means in generative AI, why it’s a growing concern, and how organizations (with help from platforms like Microsoft’s Copilot Studio and Azure AI Foundry) can prevent AI models from degrading over time.

Model collapse is a phenomenon where an AI model gradually loses its ability to generate diverse, accurate outputs because it’s trained on data produced by other AI models rather than original human-generated data. Over time, the model starts to “forget” the true complexity of real-world data and instead produces homogenized results. In the research community, this self-degrading feedback loop is sometimes formally called model autophagy disorder (MAD) – and more colloquially, “AI cannibalism.” These terms paint a vivid picture: the AI is essentially feeding on its own synthetic outputs and, like a photocopy of a photocopy, each generation becomes a blurrier version of the last.

To visualize it, imagine copying a document, then copying the copy, and so on. Eventually, the text becomes illegible. Similarly, a collapsed model’s outputs become bland and error-prone, having lost the richness and nuance of the original data it once learned from. In generative AI (like large language models or image generators), model collapse might mean a chatbot starts giving very repetitive, generic answers or an image model produces look-alike images regardless of the prompt. The “snake eating its own tail” analogy (an ouroboros) is often used to describe this problem – the AI ends up consuming its own prior work, to its detriment.

In short, model collapse is AI’s version of an echo chamber: if a model trains mostly on content that other models created, it gets stuck in a loop of reinforcing its own mistakes and biases. This leads to a noticeable decline in output quality over time. Now, let’s explore how and why this happens.

Model collapse usually unfolds gradually through recursive training loops and data contamination in the AI’s learning process. It typically happens in these scenarios:

AI Trains on AI-Generated Data: In the beginning, AI models are trained on large datasets of human-created text, images, code, etc. The trouble starts as more AI-generated content (from models like ChatGPT, DALL-E, etc.) floods the internet and other data sources. Future models might unintentionally ingest this AI-produced data as if it were “real” data. When an AI model is trained on the outputs of another AI (or even its own outputs), it’s like making a copy of a copy. The subtle errors or biases in the first generation get amplified in the next. For example, if Model A writes an article full of slightly skewed facts and Model B later trains on a dataset that includes that article, Model B will learn those skewed “facts” as if they were true – and might even exaggerate them further. Each iteration can drift further from reality.

Loss of Data Diversity: AI-generated data tends to be more average or biased towards what the model already knows. When models start learning from such synthetic data, they over-emphasize common patterns and forget outliers or rare events. Essentially, the training data’s variety and nuance get lost. Imagine a language model that, in an early generation, sees both formal news articles and casual social media slang. If in a later training round it mostly sees AI-written text that uses a middle-of-the-road style, it may start dropping both the high-formality and the extreme slang. The model’s “knowledge distribution” narrows. Over time, this leads to very homogenized outputs – everything it writes or creates starts looking the same. The model might also become biased, because it has lost the balance that came from real-world diversity. This is why model collapse is sometimes called the “curse of the photocopy” – each copy loses a bit of information, especially the uncommon bits.

Error Accumulation: Along with losing diversity, any small errors introduced by AI in one round can snowball. A good way to think about this is a game of telephone: if one AI generates slightly incorrect information and another AI trains on it, the second AI might amplify that error or combine it with another. After many generations, you can end up with significant misinformation or gibberish that no human ever intended. This feedback loop means the AI starts reinforcing its own mistakes. It might also reinforce its own style to the exclusion of others, which is why a collapsing model’s output can feel oddly samey and detached from reality.

Lack of Fresh Training Data: Models don’t collapse instantly; it often requires repeated rounds of training without grounding in fresh, real data. If an organization continually fine-tunes a model on its previous outputs (without adding new human-generated examples or resets), the model moves further away from the ground truth. In technical terms, the model’s approximation of the true data distribution deteriorates. This is why maintaining a healthy influx of original content is vital for long-term AI learning. When that practice is neglected, model drift and collapse set in.

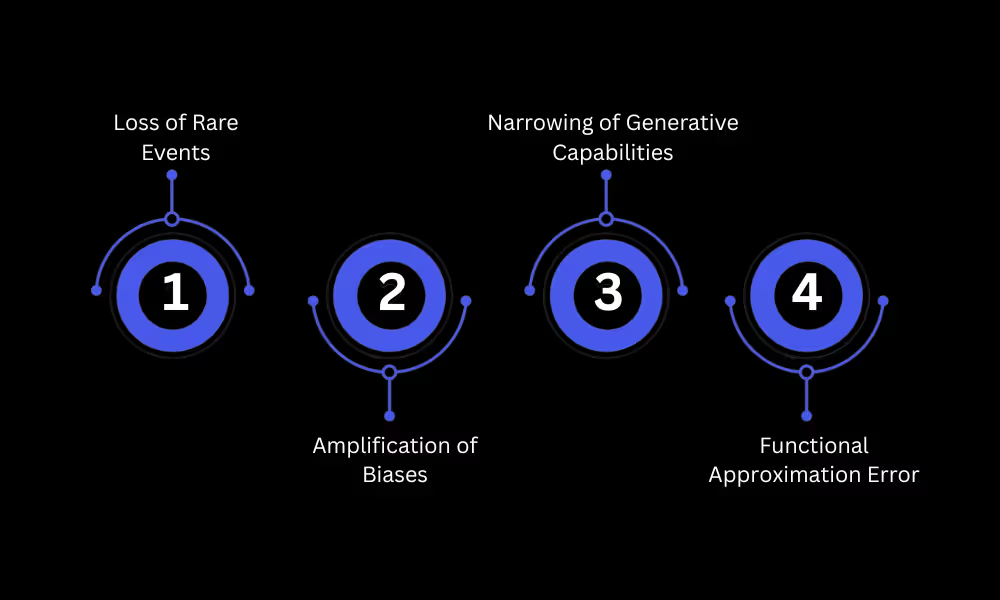

Early signs of model collapse include the AI starting to forget rare or nuanced facts, or an image model missing unique details it once could generate. You might notice an uptick in generic responses, reduced creativity, and outputs that feel “averaged out.” In later stages, the model’s answers or creations might be full of repetitions, catchphrases, or bland defaults. The outputs also often have low variance – meaning very little change from one response to another, even for different prompts. If left unchecked, the AI could converge on delivering the same dull answer or the same trivial image every time. Essentially, the AI’s imagination has starved.

Model collapse isn’t just a theoretical quirk – it has serious implications for both AI practitioners and organizations using AI, as well as for the broader society and knowledge ecosystem. Here’s why it’s a big deal:

Degraded Performance and Utility: For businesses and users, an AI model that collapses becomes far less useful. If you deployed an AI assistant or a generative model in your product, you expect it to continue providing value (accurate information, creative outputs, etc.). If over time it starts producing nonsense or the same cookie-cutter answer, that’s a failure. In critical applications – say an AI model that helps with medical diagnoses or financial forecasting – this degradation could lead to costly errors or even dangerous outcomes. IT leaders need to be confident that their AI investments won’t deteriorate into liabilities. A collapsed model could, for instance, overlook a rare but important pattern (maybe a warning sign of a cyberattack or a unique customer requirement) because it “forgot” how to recognize anything outside the ordinary. The loss of reliability directly undermines the ROI of using AI.

Bias and Fairness Issues: As models collapse and focus only on the most common patterns, they tend to ignore minority cases and edge scenarios. In a social context, this could exacerbate bias. For example, if an AI writing assistant gradually “forgets” less common dialects or viewpoints because it hasn’t seen them in the AI-generated content it’s trained on, it might produce outputs that marginalize those voices. Over time, the AI could reinforce stereotypes (the majority perspective) and fail to represent diversity. This is a concern for AI ethics and fairness. In other words, model collapse can make an AI less fair and inclusive, because it effectively tunes out the long tail of data that includes many minority and nuanced perspectives.

Knowledge Homogenization: On a larger scale, consider what happens if many AI models out there start collapsing. Since generative AIs are now writing articles, creating images, and generally contributing content, a feedback loop emerges: models train on content produced by previous models, and if each generation is a bit more distorted than the last, the entire pool of available information could degrade over time. This threatens the global knowledge ecosystem. Instead of AI being a tool to enhance human knowledge, it could start to pollute the data pool with errors and blandness. Some researchers warn of a future where the internet (a primary source of training data) becomes so saturated with AI-generated text and images that it loses authenticity. In such a scenario, it becomes harder for AI – or even humans – to find the original signal in the noise. In effect, humanity’s recorded knowledge could spiral into an “AI-generated fog” where truth and creativity are harder to come by. This prospect is sometimes described as a closed-loop of misinformation and monotony – definitely something we want to avoid!

Loss of User Trust: If people notice that an AI service is getting worse (less accurate or just repeating itself), trust in that service erodes. For companies offering AI-powered products, trust is paramount. An AI chatbot that once impressed users with witty or insightful answers, but later only gives robotic, unhelpful responses, will quickly drive users away. For IT leaders championing AI projects internally, model collapse can be a nightmare scenario: you roll out a successful AI system, and months later stakeholders ask why it’s behaving so poorly. It reflects badly on the decision to use that AI in the first place. Therefore, being aware of model collapse is also about maintaining credibility and satisfaction with AI solutions over their lifecycle.

Long-Term Innovation Stagnation: From a broader innovation standpoint, if AI researchers and engineers had to constantly battle collapsing models, progress in AI capabilities could stall. Model collapse, if widespread, means each new generation of AI might actually perform worse than the one before. Typically we expect AI to improve with more data and training, not worsen. A collapse scenario flips that and could slow the overall advancement in the field. It’s a bit of a “black swan” risk for the AI industry: everyone is racing to build bigger and better models, but if we collectively taint our data sources, we might hit a quality wall. Fortunately, now that this risk is known, many smart minds are working on preventing it (more on that soon).

In summary, model collapse matters because it endangers the quality, fairness, and reliability of AI systems. For those of us in AI consultancy and development, it’s become a key topic to monitor. After all, AI is now woven into business and daily life, from customer service bots to decision support systems – a widespread collapse would undermine a lot of that progress. Next, let’s look specifically at how one major AI player, Microsoft, is addressing this concern in their tools and platforms.

The challenge of model collapse has not gone unnoticed by industry leaders. In fact, Microsoft – a company deeply invested in generative AI across products like Azure OpenAI, GitHub Copilot, and Microsoft 365 Copilot – has been building features and guidelines to mitigate this very problem. Two initiatives in particular provide a “Microsoft lens” on model collapse: Azure AI Foundry and Copilot Studio.

Azure AI Foundry is Microsoft’s unified platform for designing, customizing, and managing AI models and agents at enterprise scale. Essentially, it’s a suite that lets businesses develop their own generative AI solutions (from chatbots to image generators) using both Microsoft’s models and their own data. Why is this relevant to model collapse? Because Azure AI Foundry emphasizes using high-quality data and a diverse set of models. As of 2025, Azure AI Foundry offers access to over 1,900 AI models from Microsoft and partners. This huge model catalog means organizations can choose the right model for the right task – and even switch or ensemble models – rather than leaning on a single model’s outputs over and over. In other words, it helps avoid putting all your eggs in one AI basket. If one model starts showing limitations, you can augment it with another. By design, this reduces the chance of a narrow feedback loop forming.

Moreover, Azure AI Foundry supports multi-agent AI systems, where multiple AI agents can work together or in sequence. This is enabled through technologies like the Azure Foundry Agent Service and open protocols for agent collaboration. The benefit here is that when you have several specialized agents (which could be powered by different models) handling a complex task, the system can cross-check and incorporate information from various sources. It’s far less likely to just regurgitate one model’s quirks. Think of it as having a panel of AI “experts” rather than a single know-it-all – you get a more balanced outcome. This approach can keep generative AI outputs on track and coherent, avoiding the collapse scenario where everything converges into one style or one error.

Copilot Studio, on the other hand, is Microsoft’s toolkit for building custom AI copilots and virtual agents. A “copilot” in Microsoft’s terminology is an AI assistant that helps with specific tasks (like coding, content generation, scheduling, etc.) within an application or workflow. Copilot Studio allows developers and organizations to create these assistants with custom knowledge bases and task definitions. From a model collapse perspective, what’s important is that Copilot Studio encourages grounding the AI with reliable data and human-in-the-loop design. For example, if you build a customer service chatbot via Copilot Studio, you’ll likely connect it to your company’s knowledge base, FAQs, or documentation. This means the AI isn’t freewheeling purely on what it learned from some generic internet training – it’s constantly referring to vetted information. That reduces the risk of it drifting off into nonsense, because it has a tether to truth.

Microsoft also bakes in guardrails and monitoring tools with Copilot Studio. According to recent announcements, Copilot Studio provides features like content moderation, usage monitoring, and feedback loops where human developers can review what the AI is doing. These guardrails act like an early warning system for model collapse. For instance, if an agent starts giving answers that are too repetitive or irrelevant, developers will catch it through monitoring and can intervene – perhaps by retraining the model with fresh data or tweaking its prompts. In essence, Microsoft’s platform is saying, “Don’t just set your AI loose and hope for the best – watch it, guide it, and keep it fed with the right data.” This philosophy aligns perfectly with avoiding collapse.

Another angle: Microsoft Research is actively studying ways to use AI without causing collapse. They’ve explored generating synthetic data with multiple AI “agents” to keep quality high. The idea is if you must use AI-generated data to train a model, do it in a smart way: have agents critique and improve each other’s outputs, use tools (like web search or calculators) to inject real facts, and filter the generated data heavily. Microsoft’s Orca research (and the AgentInstruct approach) is a good example – it’s about producing synthetic training data that’s as diverse and rich as human-generated content, so that using it won’t collapse the model. All of these efforts show that Microsoft is well aware of the model collapse issue and is engineering enterprise AI solutions to be resilient against it.

For IT leaders evaluating platforms like Azure AI, this is encouraging. It means you can adopt Microsoft’s AI services and still adhere to best practices that an AI consultancy or data science team would recommend to avoid collapse. By leveraging features of Azure AI Foundry and Copilot Studio, organizations can continue their AI learning and development journeys with more confidence that their models will stay healthy over time. Next, let’s broaden back out and discuss general strategies anyone working with AI should use to prevent model collapse.

Preventing model collapse requires a combination of good data discipline, technical safeguards, and human oversight.

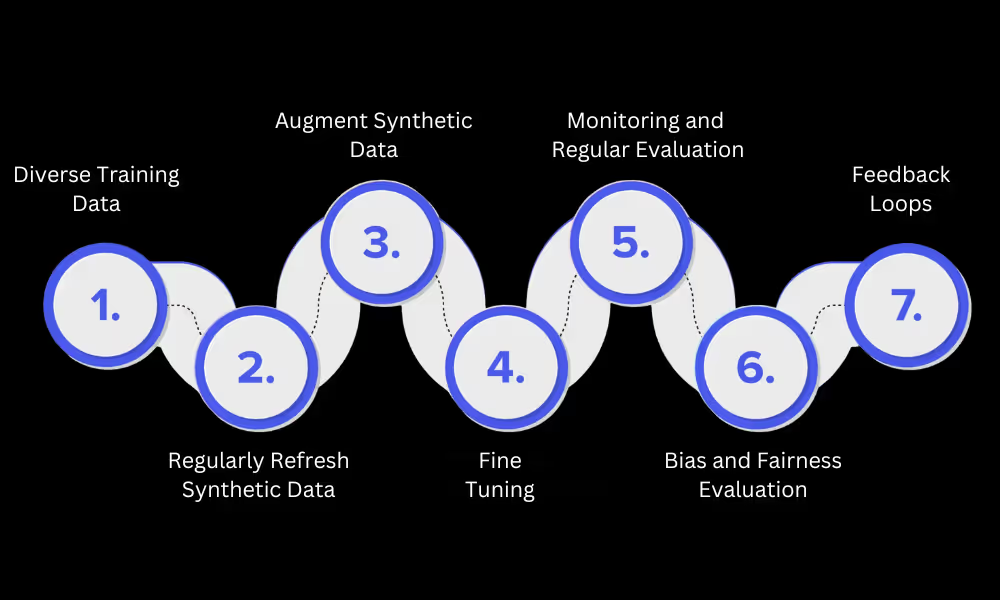

Train on High-Quality, Diverse Data: The simplest way to avoid a “photocopy effect” is to always include plenty of original human-generated data in your training mix. Don’t rely solely on synthetic or AI-generated content. If you’re fine-tuning a model, use real user data, curated datasets, or data from reliable domain sources. Diversity matters too – make sure the training data spans different styles, viewpoints, and edge cases. This helps the model maintain a broad understanding and not narrow in on one pattern. Some organizations even implement data filters to exclude AI-generated text from their training corpus (or at least label it) so the model knows what’s human vs. AI content.

Refresh and Retrain with New Data: Staleness can lead to collapse. Plan to update your models periodically with fresh data from the real world. This could mean scheduling regular retraining with data from the last few months, or fine-tuning on new examples of human feedback. By injecting new information, especially as the world changes, you prevent the model from endlessly recycling the same learned content. For example, a company might retrain its internal GPT-based model every quarter with a batch of recent genuine customer queries and answers provided by human staff. That way the model stays current and doesn’t drift into an echo chamber of its own making.

Human-in-the-Loop Monitoring: AI shouldn’t be a fire-and-forget solution. Establish processes where experts regularly evaluate the model’s outputs. This could be as formal as quarterly model performance audits, or as simple as having staff who use the AI flag when it gives a poor result. By catching early signs of model collapse (like the AI starting to get one-track in responses or making odd errors), you can intervene early. Human oversight is a powerful tool: it can provide corrective feedback signals to the model (through reinforcement learning from human feedback, for instance) or prompt a retraining with more varied data. Many AI consulting firms recommend setting up alert systems for drift – e.g., if certain output metrics (like diversity or error rate) degrade beyond a threshold, the system notifies engineers to take action.

Use Hybrid AI Strategies: A very practical way to keep generative AI outputs accurate is to combine pure generative models with retrieval or external knowledge. For example, Retrieval-Augmented Generation (RAG) is a technique where the model searches a knowledge database for relevant info and uses that in its answer, instead of relying purely on its internal memory. If your chatbot can pull facts from a vetted database or the web at runtime, it’s less likely to spiral into made-up content even if its base model is somewhat skewed. Similarly, consider using ensemble models – multiple models voting or contributing to the final answer. If one model has learned a weird quirk, another might counterbalance it. Many modern AI systems use a combination of an LLM plus tools (calculators, search engines, etc.) to ensure outputs remain factual and diverse. These hybrids inherently curb the feedback loop that leads to collapse by constantly referencing outside sources of truth.

Incorporate Adversarial Checks: In machine learning, an adversarial approach can help maintain output quality. One example from research is PacGAN, an approach in GAN training where the discriminator (the model that critiques generated data) is given multiple samples at once. This makes it easier to spot lack of variety in the generator’s output (i.e., collapse) and penalize it. In a broader sense, you can employ similar adversarial checks for your models. Have a secondary model or process that scrutinizes outputs for signs of collapse (like repetition or too-high confidence on likely incorrect answers). If it detects an issue, it can trigger adjustments to training. Some companies implement continuous evaluation pipelines where generated content is checked for originality and correctness by another system. Think of it as quality control for the AI: never let it just generate freely without some watchdog in place.

Governance and Policies for Data: Beyond the tech, having a governance policy can save you from accidentally causing model collapse. For instance, set a rule that “we do not train on our own AI’s outputs unless they’ve been reviewed or significantly augmented.” If you’re using AI to generate synthetic data to augment training (which is sometimes done to get more data), make sure that synthetic data passes a high bar (as Microsoft did in their research by using multi-agent generation to keep it varied). Encourage a culture of careful data curation: data scientists should know the provenance of their training data and be cautious if a large chunk seems to be AI-created. In regulated industries, this may even become part of compliance – proving that your model’s training data hasn’t become a self-referential soup. Essentially, treat training data quality as seriously as software quality.

Implementing these practices can greatly reduce the risk of collapse. It’s similar to maintaining healthy eating habits for humans – if an AI model “eats” a balanced diet of data and gets regular check-ups, it’s likely to stay fit. Organizations often bring in AI learning and development programs or external AI consultancy experts to establish these best practices. The good news is that by being proactive, we can enjoy the benefits of generative AI while keeping it robust and trustworthy.

Q: What is the difference between “model autophagy disorder” and “AI cannibalism”?

A: These two terms refer to the same core phenomenon as model collapse. Model autophagy disorder (MAD) is a formal term used in research to describe a model training on its own outputs (autophagy meaning “self-consuming”) and degrading as a result. AI cannibalism is a more casual nickname that means the AI is “eating itself” by consuming AI-generated data. Both paint a picture of the model gradually destroying its own performance by training on synthetic content. In essence, there’s no functional difference – MAD is the scientific label, and AI cannibalism is a colorful way to describe it in plain English.

Q: What causes model collapse in generative AI?

A: Model collapse is caused by a combination of error accumulation, feedback loops, and data contamination during training. Specifically, when an AI model is repeatedly trained or fine-tuned on data that includes content produced by AI (rather than ground-truth human data), the model starts learning from an already distorted version of reality. Each training iteration amplifies common patterns and erases nuances, due to the lack of fresh real examples. Small errors or biases in the AI-generated training data get reinforced and grow over time. Also, if the training process doesn’t enforce diversity (for example, no mechanism to ensure the model’s output stays varied), the model will converge to more uniform outputs. Think of a rumor that keeps getting repeated and distorted – by the end, the story is very different from the truth. In technical terms: recursive self-learning without proper checks, bias toward high-frequency data patterns, and lack of novel information are what drive model collapse.

Q: Why is model collapse a growing concern today?

A: Two main reasons: the ubiquity of generative AI and the changing composition of available data. Generative AI models like GPT-4, DALL-E, Stable Diffusion, etc., are now being used to produce huge amounts of text, images, and other content. The internet and datasets at large are increasingly filled with AI-generated material – whether it’s AI-written articles, fake images, or AI-created code. As this trend accelerates, any new model trained naively on “what’s out there” could inadvertently be trained on a lot of AI-produced data from older models. This creates a fertile ground for model collapse to happen. Researchers started raising alarms in the last couple of years when they realized how much AI output is going online. It’s a bit of a ticking time bomb: if we don’t adjust our training methods, future AI could end up being an echo of an echo. Additionally, the hype and rush to build ever-bigger models quickly means some teams might cut corners on data curation, which heightens the risk. In summary, it’s a hot topic now because AI is everywhere, and we need to ensure it doesn’t end up learning mostly from itself in a vicious cycle.

Q: How can model collapse be prevented?

A: The key to prevention is maintaining a connection to reality and diversity in the model’s training and usage. Concretely: always include ample real, human-generated data in training and keep that data as up-to-date as possible. Avoid training loops that feed a model its own outputs without oversight – if you generate synthetic data to augment training, carefully filter and mix it with real data (and don’t do many generations of this). Use techniques like human-in-the-loop feedback, where humans regularly correct the model or rate its outputs, to steer it away from bad habits. Technically, one can implement safeguards like adversarial training or diversity-encouraging regularization (so the model is penalized if it becomes too repetitive). At the deployment level, monitor the model’s output quality; if you see signs of collapse, retrain with a fresh dataset. And, as discussed, utilize platforms or tools that support these practices – for example, Microsoft’s Copilot Studio provides monitoring and allows integration with external knowledge sources, which helps prevent collapse in deployed AI agents. By combining good data hygiene, algorithmic techniques, and oversight, we can largely avoid model collapse.

Q: What are the risks of model collapse for real-world applications?

A: In real-world applications, model collapse can undermine the very purpose of using AI. If you have a customer support chatbot, a collapsed model might start giving irrelevant or duplicate answers, frustrating customers and damaging your company’s reputation. In high-stakes fields like healthcare or finance, an AI model that has quietly collapsed might miss important anomalies – perhaps failing to flag an unusual symptom in a medical diagnosis scenario, or overlooking a rare fraud pattern in banking – because it’s biased toward only the common cases. This could lead to harm or financial loss. There’s also a risk in creative applications: imagine a content generation AI that many writers use. If it collapses, suddenly everyone using it gets very bland content; it could stifle creativity on a broad scale. Furthermore, from a strategic business view, if a company’s AI systems degrade, it could lose its competitive edge or face compliance issues (especially if the AI starts producing incorrect outputs that lead to bad decisions). In summary, the risks range from operational inefficiency and user dissatisfaction all the way to safety issues and financial errors, depending on how critical the AI’s role is. That’s why organizations and data & AI consultancy experts are treating this as a significant reliability issue to address proactively.

Model collapse is a critical concept to understand as we integrate AI deeper into business and society. The idea that an AI could essentially “starve” itself of knowledge by consuming its own synthetic outputs is a cautionary tale of unrestrained AI development. Fortunately, awareness of this issue is growing, and so are the solutions. By ensuring variety and reality in training data, keeping humans in the loop, and leveraging advanced tools (like those Microsoft and others provide for monitoring and multi-model orchestration), we can prevent our AI models from falling into the collapse trap.

For IT leaders and AI practitioners, the takeaway is clear: treat your AI’s learning process with care and vigilance. Much like tending a garden, you have to pull out the weeds (bad data), rotate the crops (diverse inputs), and watch for blight (signs of collapse). With the right approach, AI models can continue to flourish, providing innovative and trustworthy solutions without fading into a shadow of their former selves. In the rapidly evolving landscape of AI, being proactive about issues like model collapse is part of a responsible, long-term strategy. After all, the goal is to build AI systems that get better with time – not ones that burn bright and then unexpectedly burn out. Let’s keep our AI healthy, robust, and always learning the right lessons.