Data Annotation for AI: A Complete Guide to Labeled Training Data

.avif)

.avif)

Imagine you are developing a self-driving car. To make the car "see" and understand the road, it must learn to recognize objects like pedestrians, traffic signs, and other vehicles. For this to happen, the car’s machine learning system requires thousands—if not millions—of examples from real driving scenarios. But an autonomous car doesn’t inherently know that a red octagon is a stop sign or that a figure in a crosswalk is a pedestrian. This is where data annotation comes in.

Data annotation is the process of labeling raw data (images, text, audio, etc.) so that machine learning models can understand and learn from it. In other words, data annotation provides the labeled examples that AI systems need to recognize patterns and make accurate predictions. High-quality annotated data is essential for AI/ML success, as accurately labeled data directly improves model performance. In fact, one of the most common reasons AI models underperform is poor-quality or insufficiently annotated data. At Digital Bricks, we’ve seen firsthand that robust data annotation is a foundational step in any successful AI project. As an AI development partner, Digital Bricks specializes in helping organizations on their AI journey by ensuring their data is meticulously labeled and ready for model training.

Here’s what we will explore in this article:

Let’s get into it!

The best way to understand data annotation is by analogy. Think about teaching a child to recognize different animals. You might show them a picture of a dog and say, "This is a dog," then show a cat and say, "This is a cat." Over time, by seeing labeled examples, the child learns to identify dogs versus cats on their own. Data annotation works in a similar way for machines. Just as a child needs examples with labels (e.g. being told "this is a dog"), machine learning models require annotated data to learn from.

In short, data annotation is the act of tagging or labeling data – such as images, text, audio, or video – with informative labels so that ML algorithms can recognize and understand the content. These labels serve as ground truth examples that teach the system what patterns to look for. For instance, if we provide a model thousands of images annotated with labels like "dog" or "cat," the model can learn the visual differences between dogs and cats. Later, it can take unlabeled images and predict whether they contain a dog or a cat, much like the child eventually recognizes animals without help.

It’s worth noting that the terms data annotation and data labeling are often used interchangeably. Technically, data labeling usually refers to the more specific act of assigning labels or categories to data points (e.g. tagging an image as "cat" or marking a tweet as "positive sentiment"), whereas data annotation is a broader term that can include labeling as well as adding detailed notes, bounding boxes, or metadata to enrich the data. In practice, though, you’ll hear both terms used to describe the overall process of preparing labeled datasets for AI.

Different data modalities (images, text, audio, video, etc.) require different annotation methods to prepare them for machine learning tasks. Depending on the nature of the data, there are various approaches to labeling and tagging. Understanding these types will help you choose the most effective way to train models for a given application. Below, we explore the most common categories of data annotation and the typical techniques used in each.

Image annotation involves labeling images so that ML models can identify and interpret objects or features within them. Think of it as teaching a vision model what’s in a picture. There are several techniques for image annotation, each varying in the level of detail:

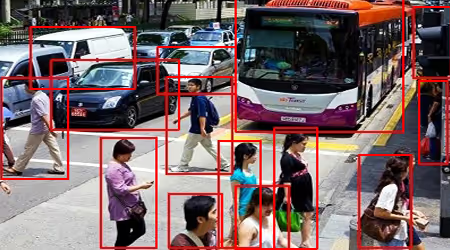

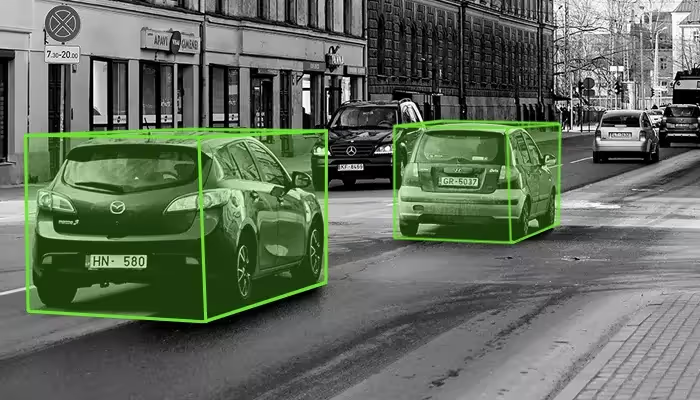

Bounding box annotation on an image: each object (cars, in this case) is enclosed in a rectangle.

Bounding boxes are one of the simplest and most widely used image annotation methods. This technique involves drawing a rectangle (box) around an object in an image to indicate its presence and location. For example, in self-driving car development, annotators might draw bounding boxes around pedestrians, cars, or road signs in thousands of street images. These boxed regions, labeled appropriately (e.g. "pedestrian" or "stop sign"), help the model learn to detect those objects in new images. Bounding boxes are especially common in object detection tasks where the goal is to locate and identify objects within an image.

Semantic segmentation example: each pixel is colored according to the object class (road, sidewalk, pedestrian, etc.).

Semantic segmentation takes image annotation to a granular level. Instead of just drawing a rough box around an object, semantic segmentation involves labeling each pixel in an image with a category. In a street scene, for instance, every pixel belonging to a pedestrian might be labeled one color, road pixels another color, buildings another, and so on. The result is that the model learns not only what objects are present but also the exact shapes and boundaries of each object or region. This gives the AI a much more detailed understanding of the scene. Semantic segmentation is crucial for applications like autonomous driving (distinguishing road vs. sidewalk vs. obstacles) or medical imaging (identifying the precise outline of a tumor in an MRI scan).

Landmark annotation: key points (dots) marked on a face image to identify facial features.

Landmark annotation, also known as key-point annotation, involves marking specific points of interest within an image. Rather than outlining entire objects or regions, annotators place points at critical locations. For example, on a human face, landmarks might be placed at the corners of the eyes, the tip of the nose, the edges of the lips, etc. In a pose estimation task, landmarks might mark key joints on the human body (elbows, knees, etc.). This technique is especially useful in applications like facial recognition (where the distances and relationships between facial landmarks help identify a person) and motion analysis (like determining the posture of a person for sports or physical therapy). By tracking these key points, a model can learn to recognize patterns such as facial expressions or body movements.

Text annotation is the process of labeling and tagging text data to make it understandable to natural language processing (NLP) models. Human language is rich and nuanced, so to teach machines to comprehend it, we must annotate various features in text. Text annotation enables AI to interpret context, meaning, intent, and other linguistic elements in documents or speech transcripts. Common text annotation techniques include:

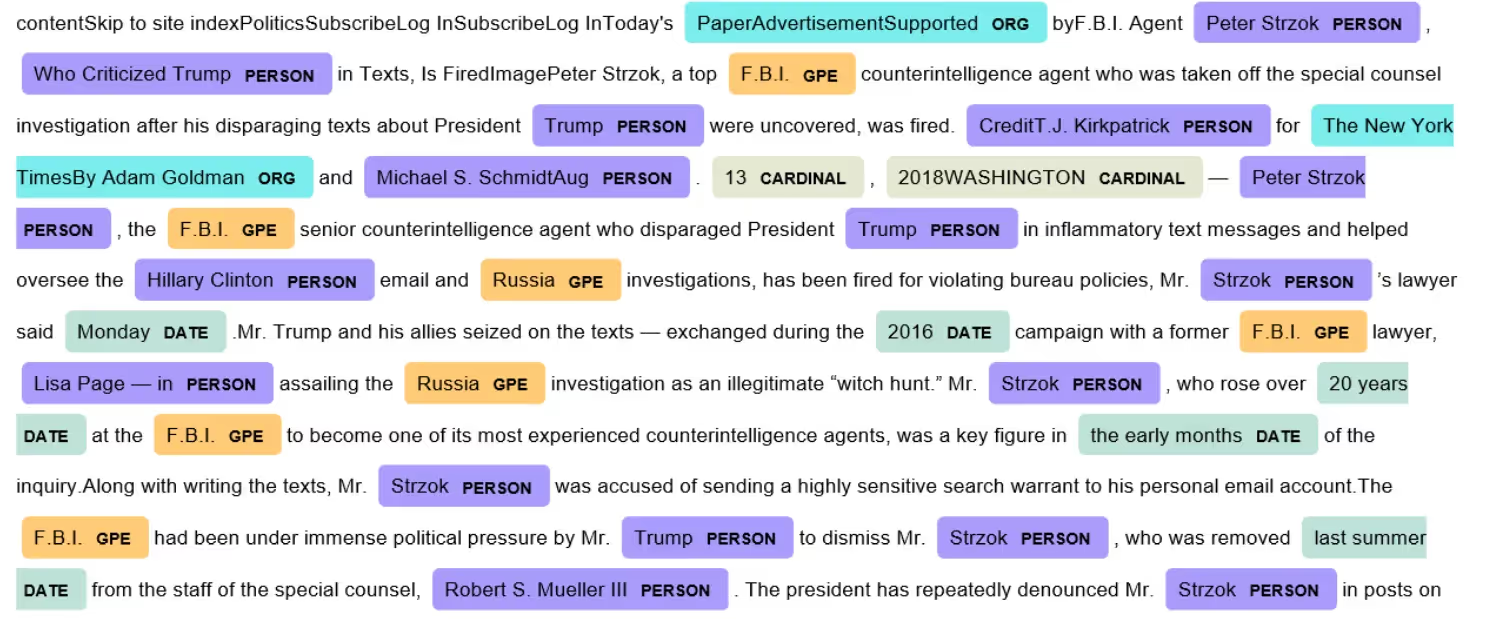

Named Entity Recognition (NER) example: entities like names, organizations, and locations are highlighted in text.

Entity recognition involves identifying and tagging specific entities (key items of information) in text. These entities could be names of people, places, organizations, dates, product names, and so on. For example, in the sentence "Apple opened a new store in New York," an annotator (or a trained model) would label "Apple" as an Organization and "New York" as a Location. The model trained on such data will learn to recognize those categories in new text. This technique, often called Named Entity Recognition (NER), is fundamental for tasks like information extraction, question answering, and building knowledge graphs, where understanding who did what and where is crucial.

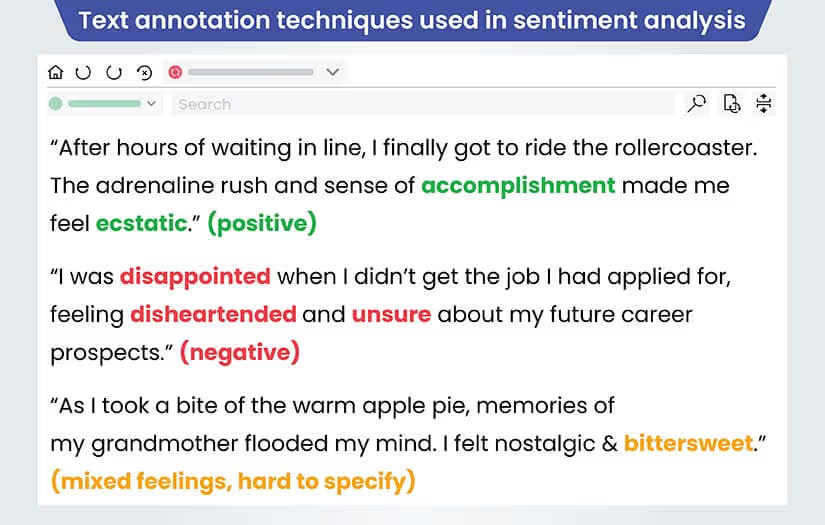

Sentiment tagging example: text from a customer review labeled as positive, negative, or neutral sentiment.

Sentiment tagging is the process of annotating text with the sentiment or emotion expressed. For instance, a product review might be labeled as positive ("I love this product!"), negative ("It broke after one use."), or neutral if the text is just factual with no clear sentiment. This is widely used in sentiment analysis for social media monitoring, customer feedback analysis, and reputation management. By training on sentiment-tagged data, AI models can learn to gauge public opinion or customer satisfaction automatically. This helps organizations understand how users feel about their brand or product in real time.

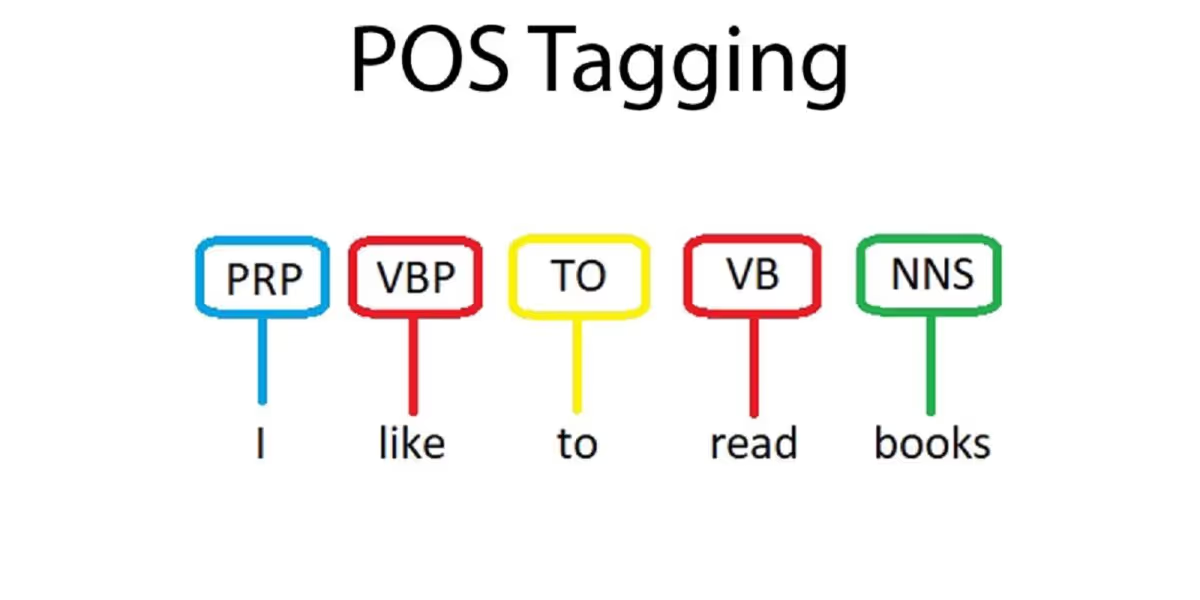

Part-of-speech (POS) tagging example: each word in a sentence is tagged with its grammatical role.

Part-of-speech (POS) tagging involves labeling each word in a sentence with its grammatical category: noun, verb, adjective, adverb, etc. For example, in the sentence "The quick brown fox jumps over the lazy dog," an annotator would tag "The" as an article, "quick" as an adjective, "brown" as an adjective, "fox" as a noun, "jumps" as a verb, and so on. POS tagging is fundamental for understanding sentence structure and is used in many NLP pipelines. It helps models with downstream tasks like parsing sentences, machine translation, and speech recognition by providing grammatical context. Essentially, it’s teaching the AI the role each word plays in a sentence, which is key to interpreting meaning.

Audio annotation involves labeling sound files to train models for speech recognition, music classification, event detection, and more. Audio data can be complex – it might contain speech, background noise, multiple speakers, music, etc. By annotating audio, we make these patterns legible to AI. Common audio annotation techniques include:

Transcription is one of the most straightforward types of audio annotation: converting spoken words in an audio file into written text. This is the basis of speech-to-text applications like voice assistants, dictation software, or automated customer service systems. In transcription tasks, annotators listen to audio recordings (e.g. phone conversations, interviews, voice commands) and write down exactly what is said. The transcribed text is then used to train speech recognition models. High-quality transcription is crucial because any errors in the text (misheard words, missing words, etc.) can teach the model incorrectly. Often, transcribers will also annotate additional details like speaker identity (who said what when there are multiple speakers) or even mark special sounds (laughs, coughs, background noise) if needed by the application.

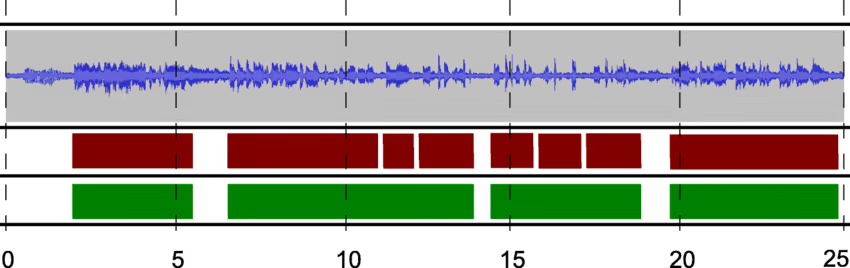

Speech segmentation: an audio waveform with segments marked for different speakers or sections.

Speech segmentation is the task of dividing an audio stream into distinct sections, often to separate different speakers or different audio events. For example, in a recorded conversation or interview, speech segmentation might involve annotating where Speaker A stops talking and Speaker B starts, throughout the audio. This is crucial for tasks like answering "who spoke when" and for cleaning up audio data (removing long silences, separating music from speech, etc.). In call center analytics, for instance, segmentation helps isolate the customer’s voice from the agent’s voice. By training models on segmented audio, we enable them to handle real-world audio where multiple sounds overlap.

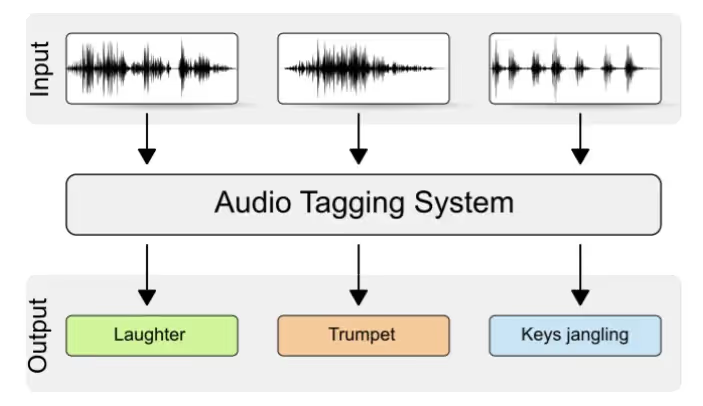

Sound tagging: labeling specific sounds or events (e.g. siren, dog bark) in an audio clip.

Sound tagging is when specific sounds or events within an audio file are labeled. Unlike transcribing speech, this is more about identifying non-speech audio events or categories of sounds. For instance, in an audio recording from a city street, annotators might tag occurrences of "car horn", "siren", "footsteps", "bird chirping", etc. Sound tagging is useful for training models in domains like urban noise analysis, wildlife monitoring (e.g. tagging animal calls in nature recordings), or multimedia search (e.g. find all videos that have an applause sound). By labeling these sounds, we teach AI models to recognize them – so a model could, say, listen to a piece of audio and detect that a siren is present, which could be useful in an emergency detection system.

Video annotation involves labeling objects or actions in video footage to help ML models interpret moving visuals. Video is essentially a sequence of images (frames) over time, so it includes the challenges of image annotation plus the temporal dimension (motion and changes over time). Key video annotation techniques include:

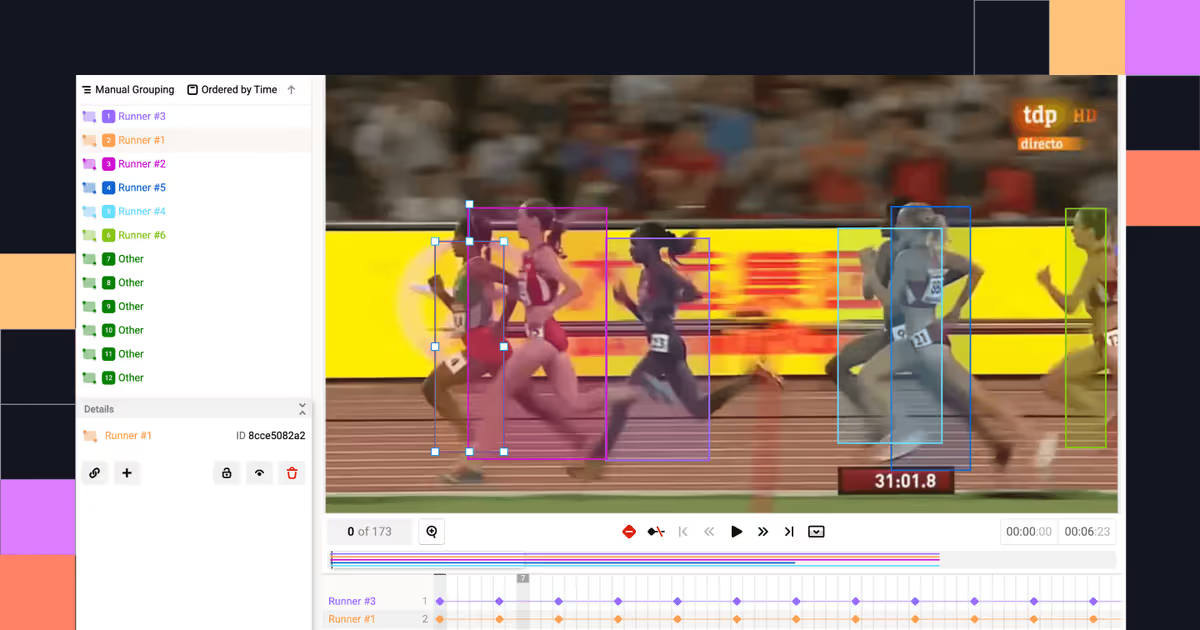

Object tracking in video: the same car is tracked and annotated across consecutive frames.

Object tracking is the technique of continuously labeling an object across multiple frames in a video so that its movement can be followed. Instead of just drawing a bounding box around a car in a single image, for example, an annotator would draw a box around the car in frame 1, then in frame 2, and so on, ensuring the same car is consistently labeled across the video. Often annotation tools assist with this by propagating the box forward and the annotator adjusts it as needed. This creates a track for the object. In autonomous driving scenarios, object tracking is crucial – e.g. following a pedestrian as they cross the street or a car as it changes lanes helps the AI predict trajectories and avoid collisions. Object tracking data teaches models to understand movement and persistently identify objects even as they shift position or appearance over time.

Activity labeling: tagging actions (like walking vs. running) in a video scene.

Activity labeling (or action recognition) means assigning labels to particular actions or behaviors observed in a video. For instance, in a security camera video you might label segments where "a person is walking" versus "a person is running" or "falling down". In sports analytics, you might label actions like "shooting," "dribbling," or "scoring" in a basketball game footage. By marking these activities, we enable models to learn how different actions look over time. This is key for applications like video surveillance (identifying suspicious behavior), sports video analysis (tagging highlights), or any context where recognizing what is happening in the video is the goal (rather than just identifying who or what is present). With activity-labeled data, a model can be trained to automatically detect when certain actions occur in new videos.

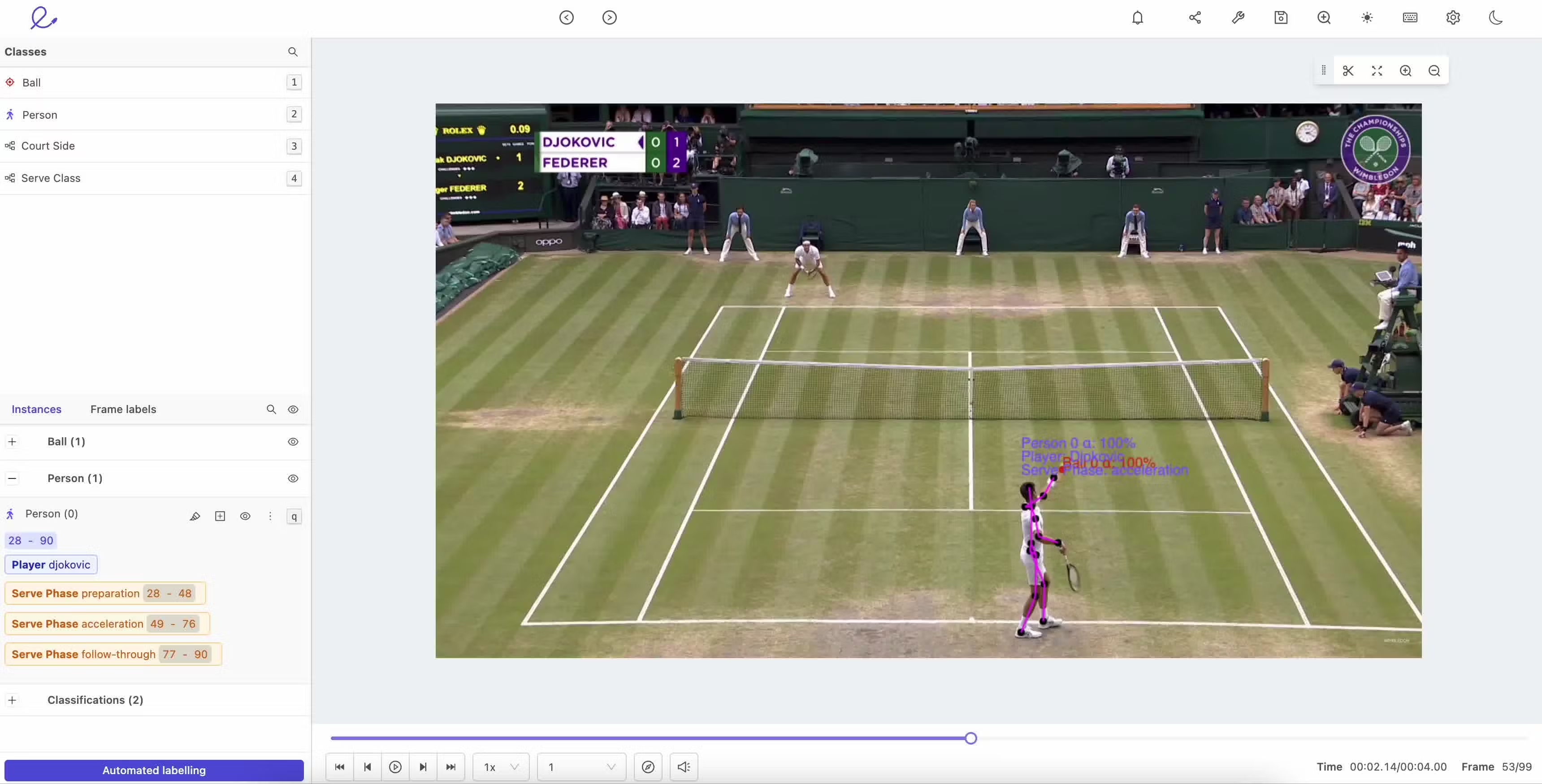

Frame-by-frame annotation: each video frame labeled in detail (e.g. for precise motion analysis).

Frame-by-frame annotation is essentially treating every frame of a video as an image and labeling it exhaustively, often with high detail. This can be thought of as a combination of image annotation and object tracking: every frame is annotated (with boxes, segmentation masks, etc.), without skipping, to capture fine-grained changes. This method is used when maximum precision is needed. For example, in medical or scientific videos (like analyzing the motion of a patient doing rehab exercises, or cells moving in a microscope video), you might need to label every single frame to track subtle changes. It’s a very time-consuming approach but yields a richly annotated dataset. Frame-by-frame labeled video can train models for tasks like motion analysis, where understanding every step of a movement is necessary. With modern tools, sometimes automation assists this (interpolating between keyframes), but human oversight ensures that each frame’s labels are correct.

Having covered the major types of data annotation by data modality, we see that each has specialized techniques – from drawing boxes and masks on images to tagging text and audio. Next, we’ll discuss how these annotations are actually done in practice (the techniques and workflows) and the tools that make the job easier.

The approach used to annotate data can vary depending on factors such as the complexity of the task, the size of the dataset, and the level of precision required. In some cases, you might rely entirely on human annotators; in others, you might use automation or a mix of both. Here we’ll explore the various approaches to annotation, ranging from fully manual to fully automated, including hybrid strategies like human-in-the-loop and crowdsourcing.

Manual annotation means human annotators label the data by hand, without any automated assistance. In this approach, trained individuals (or domain experts for specialized data) carefully review each piece of data and apply the appropriate labels or tags. This could mean drawing bounding boxes on images one by one, reading texts and marking entities or sentiments, listening to audio and writing transcripts, etc., all done by people.

The biggest advantage of manual annotation is accuracy. Humans can understand context, nuance, and complex patterns better than current machines, so they tend to produce very precise and high-quality labels. For projects where quality is paramount – such as medical image annotation (where a mistake could mean a disease goes undetected) or legal document annotation – having skilled humans in charge of labeling ensures a level of reliability that automated methods might not match. Manual annotation also allows handling of subtle cases (like sarcasm in text or faint objects in images) that automated tools might miss or mislabel.

However, manual annotation comes with significant challenges:

Despite these challenges, manual annotation remains essential for many projects where precision is critical. It’s often the gold standard against which other methods are measured. Many organizations try to balance manual annotation with efficiency, by leveraging tools or techniques (like the ones below) to improve speed without sacrificing too much accuracy.

(Side note: In practice, even when other techniques are used, a manual review step is often kept in the loop for quality control. It’s common to see workflows where an automated system suggests labels and humans verify or correct them – which leads us to the next approach.)

Semi-automated annotation combines human expertise with machine assistance. In this approach, a machine learning model or algorithm helps the human annotators by pre-labeling the data or suggesting likely labels, and then humans review and refine those labels. This is a form of human-in-the-loop annotation where automation and human judgment work together.

The benefit of semi-automated annotation is that it can significantly speed up the labeling process while still retaining a high level of accuracy. The idea is that the machine handles the easy or repetitive parts, and humans focus on the tricky or ambiguous parts. For example, imagine annotating thousands of images of cats and dogs. A semi-automated system might first run a simple model to guess which images have cats vs. dogs, labeling them accordingly, and then human annotators just double-check and correct mistakes instead of starting from scratch on each image. This can save a lot of time. In text annotation, a model might highlight likely entities or sensitive content in documents for a human to confirm or adjust, rather than the human reading blind.

Semi-automation is ideal for large projects where doing everything manually would be too slow or expensive, but you can’t fully trust an automated system either. It strikes a balance between speed and quality control. Many modern annotation tools (which we’ll discuss soon) include features for this, like active learning loops where a model continuously retrains on newly labeled data and suggests labels for the next batch of data.

However, semi-automated annotation faces certain challenges as well:

Despite these cons, many organizations find that semi-automation boosts efficiency substantially. By one estimate, a good human-in-the-loop setup can cut annotation time by 50% or more, which is huge for big datasets. It’s a popular approach when you have some confidence in automation but still need human judgment as a safety net.

Automated annotation means using software or algorithms to label data with minimal to no human intervention. In this approach, you might use pre-trained machine learning models or AI-driven tools that can automatically annotate large volumes of data. For example, an AI model might automatically classify images into categories, or transcribe audio to text, without a person doing the labeling.

The main advantage of automated annotation is efficiency. Machines can process vast amounts of data much faster than people can. An automated pipeline could potentially label millions of images or documents in a fraction of the time it would take a team of humans. This makes it an attractive solution for projects with huge datasets – for example, tagging every frame of a 100,000-hour video archive or labeling every post on a large social network for content moderation. If done well, automated annotation can also be cost-effective in the long run, since after the initial setup, you’re not paying for each label.

However, relying on fully automated annotation has some notable drawbacks:

In practice, fully automated annotation is best suited for situations where speed and volume are top priority, and a certain margin of error is acceptable. Often, teams will use automated annotation to do an initial labeling of a large dataset, and then have humans review and correct the labels on a subset of the data to clean it up – a bit of a hybrid approach. Alternatively, automated labeling might be used to pre-label data that will later be double-checked by crowdworkers or internal staff.

Crowdsourcing involves outsourcing data labeling tasks to a distributed, often large, group of people (the "crowd") via online platforms. Instead of having a fixed in-house team, you can tap into thousands of remote annotators on services like Amazon Mechanical Turk or Appen to get the job done. Each annotator might do a small portion of the task, but collectively they annotate a huge dataset quickly.

The primary advantage of crowdsourcing is scalability and speed. By distributing the work to many individuals in parallel, you can accomplish in days what might take an in-house team months. For example, if you have 100,000 images to label, you could post them as tasks on a platform and potentially have all of them labeled within a day if enough crowdworkers pick up the tasks concurrently. It’s also relatively cost-effective for straightforward tasks – you pay per annotation (often only a few cents each), and because many can be done at once, the total turnaround time is short.

However, crowdsourcing has some downsides:

Crowdsourcing is ideal for large-scale projects that require a burst of labeling in a short time, especially when the task can be broken into micro-tasks for many people to do independently. At Digital Bricks, when clients have massive datasets and tight timelines, we often help design crowdsourcing workflows that include strong quality assurance (like having our team audit samples of the crowd-labeled data). In many cases, a combined approach works: use the crowd for volume, and use an in-house or expert team for quality control on a subset of data.

After considering these techniques – manual, semi-automated, automated, and crowdsourced – you might wonder what tools support these processes. Fortunately, there are many data annotation tools available that provide interfaces and features to implement these approaches efficiently. Let’s look at some popular tools and platforms next.

There is a wide range of software tools designed to make data annotation faster, easier, and more organized. These tools range from free open-source applications to full-fledged commercial platforms. Some are specialized for certain data types (like images or text), while others support multiple types. Choosing the right tool can depend on your project’s needs – whether you prioritize cost (free vs. paid), collaboration features, automation capabilities, or integration with your machine learning pipeline.

In this section, we’ll explore some of the most popular data annotation tools and how they can help with different types of projects:

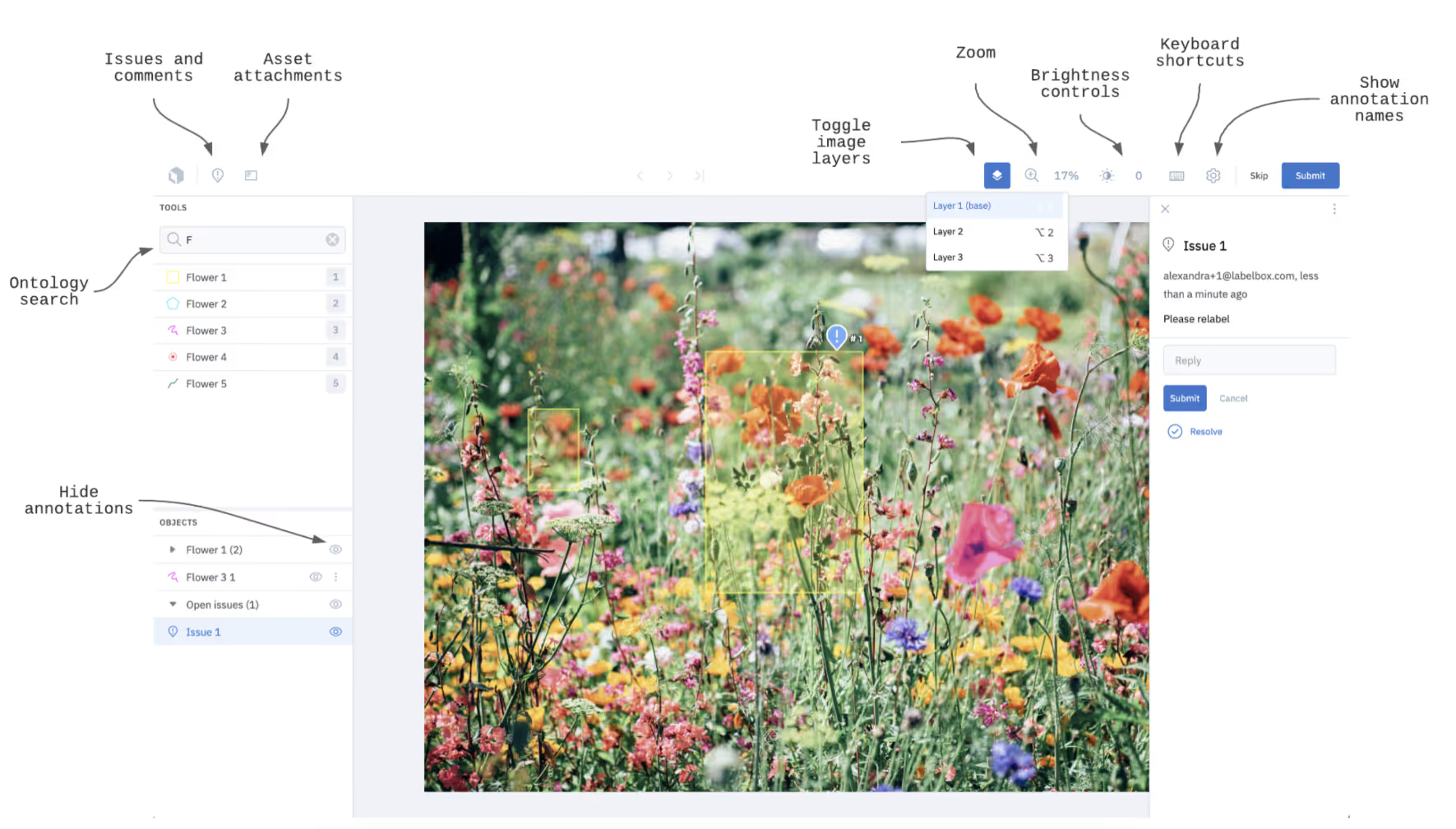

Labelbox is a widely-used commercial platform designed to streamline the entire data annotation workflow, with a strong emphasis on collaboration and quality control. Founded in 2018, Labelbox’s mission has been to build a best-in-class platform for managing training data for AI. The platform provides an intuitive web-based interface where teams can upload data and start labeling right in the browser. It supports images, video, text, and even geospatial data annotation, making it versatile for various machine learning projects.

One standout feature of Labelbox is its built-in project management and quality assurance tools. Team members can be assigned roles (annotators, reviewers, etc.), and the tool facilitates reviewing and approving annotations within the platform. For example, you can set up a second layer of review where one person’s labels must be verified by another – this ensures consistency and catches errors. Labelbox also provides analytics on the annotation progress and quality metrics, so project managers can track how well the annotation process is going.

Another strength is integration and automation. Labelbox can integrate with machine learning models to do things like pre-label images (semi-automated annotation) or suggest annotations, which the human annotator can then accept or adjust. This aligns with the active learning approach to speed things up. Labelbox’s API also allows organizations to plug the tool into their ML pipelines, so data flows in and out smoothly.

Overall, Labelbox is ideal for organizations looking to scale up annotation with a structured, team-centric approach. Many enterprises choose it because it offers a good balance of ease-of-use and advanced features like automation and quality control. (On the flip side, as a commercial product, it has licensing costs – so very small teams or hobby projects might lean towards open-source alternatives.)

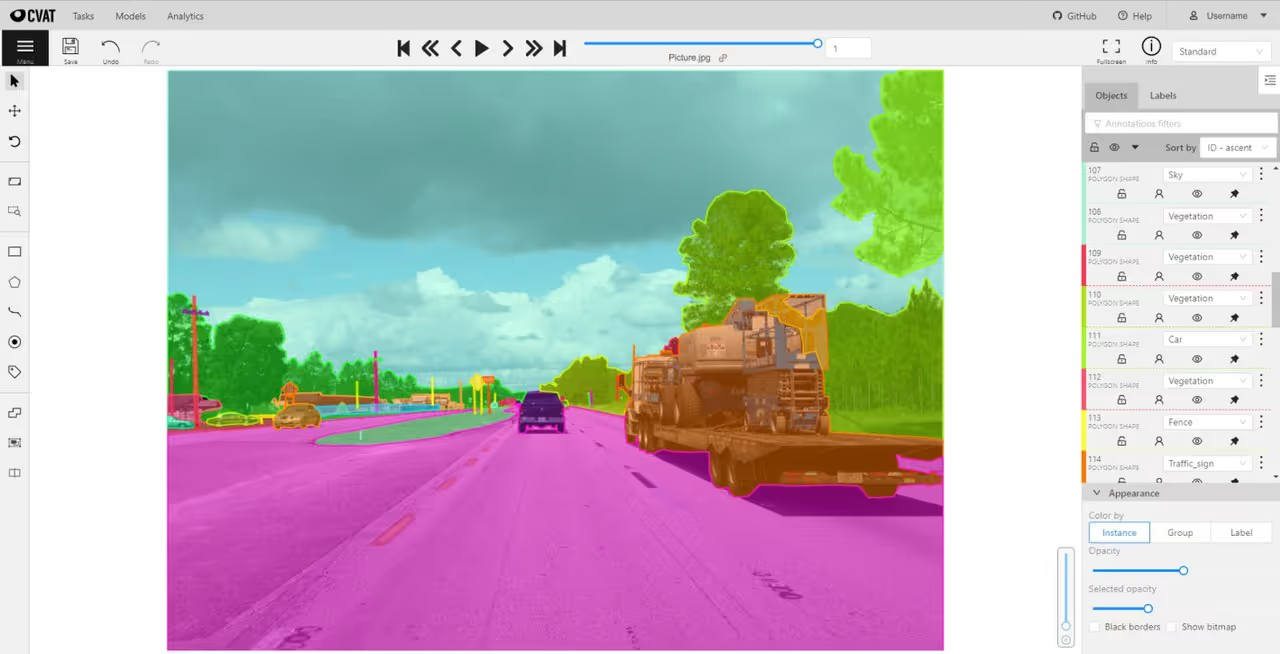

CVAT is an open-source tool specifically designed for annotating image and video data. Developed by Intel, CVAT is a web-based application that you can self-host. Because it’s open-source, it’s free to use and highly customizable – which is great for teams with technical expertise who want to tailor the tool to their needs or integrate it tightly with their systems.

CVAT offers a wide range of annotation techniques out-of-the-box. For images, you can create bounding boxes, polygons, polylines, and keypoints; for video, it has powerful support for object tracking across frames (interpolation between key frames, etc.). This flexibility makes it suitable for many computer vision tasks, from basic object detection to detailed image segmentation or pose estimation. For instance, if you need to annotate a set of images with polygons around every building (for a satellite imagery project), CVAT can do that. If you need to track a car in a video, CVAT can help interpolate the boxes between manually annotated frames, saving you effort.

One key advantage of CVAT is that since you host it yourself, your data stays on your servers – important for privacy/security or compliance reasons, especially in industries like healthcare or finance where using a cloud service might be problematic. Additionally, the open-source community around CVAT means there are plugins and extensions available, and users continue to contribute improvements.

CVAT does require a bit more setup (you need to deploy it on a server or locally via Docker), and its interface, while powerful, may have a steeper learning curve than some commercial tools. However, for researchers and small teams on a budget, CVAT is a popular choice because it delivers enterprise-grade annotation capabilities for free.

Prodigy is an advanced annotation tool focused primarily on text and NLP tasks, though it also supports image annotation. It’s a commercial tool developed by Explosion AI (the creators of the spaCy NLP library). What makes Prodigy stand out is its strong emphasis on active learning and scripting – it’s designed for machine learning developers who want to quickly create custom annotation workflows.

With Prodigy, you can set up an annotation session where a model is running in the background and continuously updating based on the labels you provide. For example, if you’re labeling text for sentiment, Prodigy can use your labels to train a model on the fly and start prioritizing examples where the model is uncertain, which tend to be the most informative examples to label next. This means you spend your labeling effort on the data points that matter most, potentially reducing the total amount of data you need to label to train a good model.

Prodigy is highly scriptable: users can write Python recipes to customize the interface, handle custom data formats, or implement unique labeling logic. This makes it very flexible – one project might use it for tagging named entities in text, the next for image classification, the next for audio transcription. It’s especially popular in NLP research and among AI startups that need to bootstrap training data quickly and want the process tightly integrated with model training.

Despite being a paid tool, Prodigy is lightweight and can be run on a personal machine (no need for a big server setup). Many small teams find that its efficiency gains justify the cost, because it lets one person do the work of what might otherwise require a small team of annotators, thanks to the model assistance. In summary, if you’re a developer who wants to teach a model interactively and create a dataset at the same time, Prodigy is a great choice.

One of the distinguishing features of Ground Truth is the option to use Amazon’s Mechanical Turk workforce, third-party providers, or your own in-house annotators in the loop. Essentially, Ground Truth can coordinate the labeling job for you: it will copy data to a labeling portal, allow workers to label it, and then collect the labels in an S3 bucket. It also offers annotation consolidation algorithms, which are useful if you employ multiple workers for quality control (e.g., it can automatically decide the final label based on several workers’ inputs).

Ground Truth supports a variety of data types – images, text, video, and even 3D point clouds (for tasks like LiDAR data annotation in autonomous vehicles). It also includes built-in auto-labeling: for instance, if you have a partially labeled dataset, Ground Truth can train a model on it and attempt to label the rest of the data automatically, with humans just reviewing a subset for quality. This can dramatically reduce the cost of labeling large datasets.

Because it’s part of SageMaker (AWS’s machine learning platform), the labeled data is immediately available for model training in the cloud. It’s built to scale, so enterprises that have very large datasets and need enterprise-grade security and auditing often gravitate to Ground Truth.

One thing to note: Ground Truth, being an AWS service, comes with AWS costs. While Mechanical Turk can be cheap per label, the costs add up with volume, and using AWS infrastructure means you pay for data storage and possibly the use of their workforce. Still, for organizations already on AWS, the convenience and integration can outweigh those costs.

Each tool has its strengths, and in practice, choosing the right one depends on factors like the size of your project, your budget, the types of data you have, and whether you need advanced features like automation or just a basic labeling interface. Digital Bricks often helps clients evaluate and set up these tools as part of our development services – ensuring that organizations use the right toolchain for their AI journey. The good news is that with the growing AI ecosystem, there’s likely a tool out there that fits your needs perfectly, or can be adapted to do so.

Effective data annotation is critical to the success of machine learning models because the quality of the training data directly impacts the model’s performance. Garbage in, garbage out, as the saying goes – if your annotations are inconsistent or incorrect, your AI system will learn the wrong patterns. By following best practices in data annotation, you can make the process more efficient and ensure you get reliable, high-quality datasets.

Here are some essential best practices to adopt:

One of the most important steps in any annotation project is to create clear, detailed guidelines before the labeling work begins. These guidelines are essentially the instructions for annotators, explaining exactly how each piece of data should be labeled and what rules to follow for consistency.

Clear guidelines help to:

Creating the guidelines often involves deciding on a labeling schema or ontology (the set of labels or classes you will use) and providing examples for each. It’s a great idea to include illustrative examples and counter-examples in the instructions. For instance, a guideline might say: "Label an email as Spam if it is unsolicited and contains marketing content. Example: 'You won a free prize, click here.' Counter-example: A genuine newsletter the user subscribed to should not be labeled as spam."

When data is complex or subjective (e.g., determining the sentiment of a tweet that could be interpreted in different ways), detailed guidelines become even more crucial. They act as the single source of truth that all annotators refer to, which is how you maintain uniformity across the dataset. At Digital Bricks, when we kick off annotation projects for clients, we often run a small pilot with initial guidelines, then refine those guidelines based on annotator feedback and any confusion encountered, before scaling up to label the full dataset.

Quality assurance (QA) in data annotation means having processes to review and verify the labeled data to ensure it meets the required standards of accuracy and consistency. It’s not enough to just label the data and assume it’s correct – you need to actively check and maintain quality.

There are several ways to implement QA in annotation:

The main point is that you shouldn’t treat annotation as a one-pass process. Plan for iteration: label, review, correct, and improve. By implementing a robust quality assurance process, you can catch errors or biases in the data early on and fix them. This is vital because if you only discover poor annotations after training a model (when it performs badly), you’ve wasted a lot of time. It’s more efficient to ensure the data is right from the get-go.

Active learning is a strategy where the machine learning model in training actively participates in the annotation process by identifying the most informative data points for humans to label. The idea is to label smarter, not harder – instead of annotating everything blindly, let the model tell you which examples would yield the most benefit if labeled.

In practical terms, an active learning setup often works like this: you train a model on a small initial set of labeled data. Then you run that model on a large pool of unlabeled data to get predictions. The model will be more confident on some and less on others. You then select a batch of examples where the model is least confident (or where it’s most likely wrong, e.g., it’s uncertain between two classes) and present those to a human annotator to label. Once those are labeled, you add them to the training set, update the model, and repeat the process. The model’s job is to point out “I’m not sure about these – please label them,” focusing the human effort on the tough cases that the model can’t figure out on its own.

Why do this? Because often in datasets, especially large ones, many items are easy and the model could predict them correctly even without seeing them labeled. The hardest, most confusing examples are the ones that really improve the model when clarified. By using active learning, teams have found they can achieve high model performance with significantly fewer labeled examples than if they just randomly selected data to label. This is a big win when annotation is expensive.

For example, imagine a text classification task to filter out abusive comments. Perhaps 90% of comments are obviously non-abusive and 5% are obviously abusive – the model can learn those from relatively few examples. The remaining 5% might be borderline cases or require context. Active learning would quickly skip over the straightforward ones and zero in on those borderline cases for humans to label. Thus, the resulting training set is enriched with tricky examples, making the model more robust.

From the annotator’s perspective, active learning can make the work more interesting too – they’re not labeling redundant easy items as much, and they get to focus on nuanced decisions. Many modern annotation tools (like Prodigy, as mentioned) have active learning components, and even frameworks like scikit-learn and PyTorch have libraries to help implement it. Leveraging active learning is a best practice when you have large unlabeled pools and want to maximize the impact of each label you spend time/money on.

Just as we train models, we should also train the annotators! Even skilled annotators need to be onboarded to the specifics of your project. Taking time to train the people who will do the labeling often pays huge dividends in quality.

Annotator training can involve:

Remember that data annotation can be tedious and cognitively demanding work. Well-trained annotators are not only more accurate but also typically faster because they don’t second-guess what they should do in a given situation – they have clear guidance. Moreover, investing in training helps annotators feel more engaged and valued, potentially reducing turnover and improving focus.

At Digital Bricks, when we manage annotation teams, we often create a “study guide” or cheat-sheet version of the guidelines and encourage annotators to reference it as they work. We also set up channels (like a chat group) where annotators can quickly ask questions if unsure about a particular item. It’s far better for them to ask and get it right than silently guess and possibly label a whole batch incorrectly.

By following these best practices – clear guidelines, quality checks, active learning, and thorough annotator training – you set up your data annotation project for success. These practices help ensure that you’re not just churning out labels, but creating high-quality training data that will genuinely teach your AI models the right lessons.

While data annotation is indispensable for building AI models, it’s not without difficulties. Anyone who has run a large annotation project can attest that it can be a painstaking and complex process, with several common challenges arising along the way. In this section, we’ll discuss some of the key challenges and briefly mention ways to address them.

One of the biggest challenges in data annotation is the sheer cost in terms of time and money. High-quality annotation is labor-intensive. If you have a large dataset – imagine millions of images or pages of text – annotating every instance can take a very long time if done manually, or it can cost a lot if you pay people to do it.

The more complex the data or the task, the more time each item takes to annotate. Labeling a simple image with one object might be quick, but segmenting every pixel in high-resolution images or transcribing messy audio can be slow work. It’s not unheard of for companies to spend weeks or months on annotation before they ever get to train a model. This time investment can delay projects significantly.

From a cost perspective, if you need expert annotators (say, radiologists for medical data or lawyers for legal document annotation), the cost per hour is high. Even with general annotators or crowdworkers, when you multiply a modest per-label cost by hundreds of thousands of labels, the total can be substantial. Startups and researchers often find the annotation phase to be a budget bottleneck.

What can be done?

To mitigate time and cost issues, organizations can leverage some of the techniques we discussed earlier:

A combination of these approaches can substantially reduce both the time and money spent on annotation. Many organizations also adopt an iterative approach – label some data, train a model, see how it does, then label more specifically in areas where the model is struggling, rather than labeling everything upfront.

Another major challenge is that annotation can be subjective, and human annotators may introduce biases or inconsistencies into the data. Not all data has a clear-cut ground truth. If you ask five people to annotate the sentiment of a given movie review, you might get some saying neutral, some saying positive, depending on their interpretation. Or if labeling images as “offensive content” vs “benign”, different annotators might have different personal thresholds for what counts as offensive.

This subjectivity can lead to inconsistent labels across the dataset. If one portion of your dataset was labeled by a person with one interpretation and another portion by someone with a different interpretation, the model will get mixed signals. It might then perform poorly or pick up one person’s bias as part of the “pattern”.

Bias can also come from cultural or gender perspectives. For example, an annotator’s own background might influence how they annotate text about certain topics (what’s considered sarcastic or not, or what emotions are inferred). In image annotation, there have been cases where annotators bring stereotypes (e.g., associating certain tools or activities with a particular gender in image captions).

How to reduce subjectivity and bias?

Ultimately, some level of subjectivity might remain (especially for tasks like sentiment, intent, or anything requiring interpretation). The goal is to minimize it so the model isn’t confused or, worse, learning a bias. If done well, consistent annotations lead to a model that makes consistent predictions.

Data annotation often involves handling sensitive data, which can raise privacy concerns. If your data includes personal information – say, images of people, medical records, user chat logs – sending that data to a pool of annotators (whether in-house, outsourced, or crowdsourced) can violate privacy regulations or company policies if not done carefully.

Consider industries like healthcare or finance. There are strict laws (like HIPAA in the US for health data, GDPR in Europe for personal data) that might restrict how data can be shared or mandate that certain identifiers be removed. If annotators are not employees but contractors or crowdworkers, ensuring they handle data confidentially is a challenge.

Also, annotation itself can create privacy issues. For example, if you’re annotating images for face recognition and drawing bounding boxes around faces, you’re essentially highlighting and extracting personal data (someone’s face is personal data). Or annotating surveillance footage for “suspicious behavior” could be sensitive from a surveillance ethics perspective.

How to address privacy concerns?

Before sending data out for annotation, remove or obfuscate personal identifiers when possible. For text, this might mean redacting names or sensitive details (tools can automatically mask things like phone numbers or social security numbers in documents). For images, perhaps blur faces or license plates if they are not the focus of what needs labeling (though if the face is what you need labeled, you then need a secure way to handle that).

Use annotation platforms that offer secure data handling – for example, platforms that don’t allow annotators to download data, that log all access, and that can be configured to meet compliance standards. Some enterprise tools allow you to restrict that only annotators who have signed specific NDAs and are in certain regions can access the data.

For very sensitive data, some organizations choose to do annotation in-house or with a trusted partner like Digital Bricks rather than a public crowd. The annotators might be employees or tightly managed contractors with background checks. The data might never leave the company’s own network. This can be slower or more costly, but sometimes it’s the only acceptable way due to regulations.

Ensure that any third-party service or crowd platform you use has proper agreements in place about data usage. The data should typically remain your property, and the service providers should commit to not storing or using it beyond the task. Also, all annotators can be required to sign confidentiality agreements (some platforms handle that with a click-wrap agreement for their workers).

Keep track of who labeled what and when. If there's ever a privacy incident or a need to demonstrate compliance, having logs helps. Some tools will automatically log which user account accessed which data.

Always evaluate the sensitivity of your data before starting an annotation project. The more sensitive, the more you should lean towards closed, secure annotation workflows. Digital Bricks often assists clients in setting up on-premise annotation solutions or vetted annotator teams when data privacy is a paramount concern – ensuring that organizations can still get the labels they need without exposing data improperly.

By anticipating and addressing these challenges – cost/time, subjectivity/bias, and privacy – organizations can plan their data annotation projects more effectively and avoid common pitfalls. It’s also a reminder that data annotation isn’t just a trivial task before the “real work” of modeling; it requires thoughtful management and resources of its own. The good news is, with the right strategy (and a good AI development partner, if needed), these challenges are surmountable.

To appreciate why data annotation is so vital, it helps to see how it’s used in real-world AI applications. Virtually every AI system that interacts with the real world relies on annotated data. Here are a few notable domains and examples:

In the realm of self-driving cars and advanced driver assistance systems, data annotation is the foundation for teaching AI to interpret the world on the road. An autonomous vehicle is equipped with cameras, LiDAR, radar, and other sensors, producing vast amounts of raw data about the car’s surroundings. To make sense of this data (especially camera images and videos), these systems are trained on annotated examples.

For example, fleets of vehicles or human data collectors gather video footage of driving. Annotators then label these video frames: drawing bounding boxes around pedestrians, other vehicles, lane markings, traffic signs, traffic lights, bicycles, animals, and all sorts of objects a car might encounter. They might also use segmentation to mark out drivable road area vs. sidewalk vs. buildings. By feeding millions of such labeled images into a deep learning model, the car’s AI “learns” how to recognize a stop sign in various lighting conditions, how to detect a pedestrian who’s about to step off the curb, or how to tell where the lane lines are even when they’re faded.

Bounding boxes that follow vehicles from frame to frame (object tracking) allow the system to gauge how objects move, which is crucial for predicting trajectories (will that cyclist veer into my lane?). Landmark annotations might be used for things like identifying key points on pedestrians to estimate their pose (are they looking toward the car or away? Are their legs moving as if to walk?).

Without accurate data annotation, a self-driving car simply wouldn’t know what it’s seeing. The phrase often used is “garbage in, garbage out” – if the training data was poorly labeled (maybe traffic lights were sometimes mislabeled as street lamps), the car could make dangerous mistakes. That’s why companies like Waymo, Tesla, Cruise, and others invest heavily in large teams or services for data labeling. In fact, the autonomous vehicle industry has pushed the envelope on annotation tools and techniques, given the massive scale of data involved.

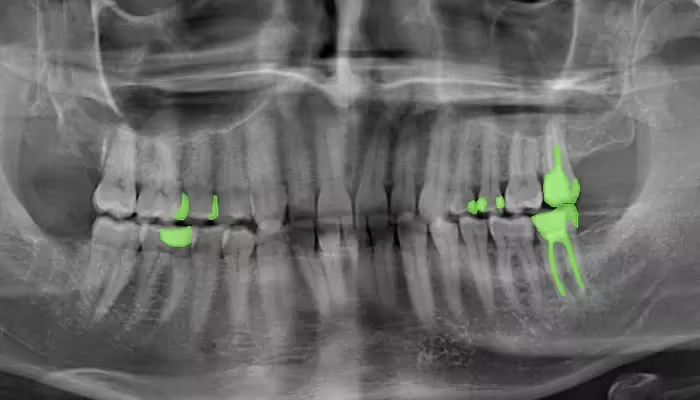

AI is making significant inroads in healthcare, and a prime example is in medical imaging. Here, data annotation typically means having medical experts label images such as X-rays, MRIs, CT scans, or pathology slides to identify abnormalities or regions of interest. These annotated images become the training data for algorithms that aim to detect diseases.

For instance, consider training an AI to detect early signs of cancer in radiology images. A radiologist might go through hundreds of lung CT scans and carefully outline (segmentation annotation) any tumors or suspicious lesions in each image. They might also add labels like “malignant” or “benign” if known, or classify the tumor by type. Similarly, in retinal scans for diabetic retinopathy detection, ophthalmologists label areas with microaneurysms or hemorrhages.

Another example is annotating cell images in pathology: coloring or circling cells that are cancerous vs. healthy, so a model can later detect cancer in new samples automatically. In some cases, annotation might be as simple as image-level labels (e.g., this whole slide has cancer vs. not), but often the nuanced approach (outlining exact regions) yields better training data.

The impact of this is huge – an AI model that learns from these annotations can then assist doctors by highlighting areas of concern on new scans, effectively acting as a second pair of eyes. This can lead to earlier detection of diseases like cancer, which in turn leads to better patient outcomes. It can also help reduce the workload on medical professionals by filtering out obvious normal cases and focusing their attention on the tricky ones.

However, it’s worth noting that due to the need for domain expertise, medical data annotation is challenging and expensive (radiologists’ time is costly!). This is again where methods like active learning can help – get the most value out of each expert-labeled instance. Also, privacy is a big concern here, so often this work has to be done under strict compliance conditions.

If you’ve interacted with a customer support chatbot (whether on a retail website, a bank, or any service), you’re seeing an NLP application that likely benefited from extensive text annotation. These chatbots need to understand what users are asking or saying in order to respond appropriately – this involves training them on annotated conversation data.

A key part of building a chatbot is intent recognition: determining what the user’s intention is. For example, if a user types "I need to reset my password," the bot should recognize the intent as something like “Password Reset.” If the user says "My internet is down again, I'm really frustrated," the bot might classify that as a “Service Outage Inquiry” plus a high frustration sentiment. How do they learn this? Annotators label hundreds or thousands of real or simulated chat transcripts, marking the intent of each customer utterance (and sometimes the entity slots too – e.g., if someone says "I'm calling about order 12345", the order number is an entity to capture).

Besides intent, text annotation for chatbots can include labeling utterances with the correct response or action for the bot, tagging parts of the text that correspond to important information (like dates, names, product types), and annotating sentiment or tone (so the bot can maybe route angry customers to a human agent faster).

For voice-based assistants, it’s similar but with voice-to-text transcription in the pipeline. If you say "Check my account balance," the system converts that to text, and then NLP models (trained on annotated data) interpret it as an intent to get account balance.

By training on these annotated dialogues, the chatbot’s language understanding model gets better at matching varying phrasings to the right intent. Users might say the same thing dozens of different ways (“I can’t log in,” “login isn’t working,” “having trouble signing in,” etc.), and the model needs to recognize them all as essentially the same ask. Only a richly annotated dataset covering those variations makes that possible.

The result of good annotation here is a chatbot that can handle a wide range of queries and still provide useful answers, improving customer experience and reducing load on human support. Digital Bricks, as an AI development partner, often helps organizations prepare these training datasets – for example, by taking conversation logs and annotating them – as well as building the chatbot models themselves.

We touched on sentiment annotation earlier, but to reiterate in an application context: many companies use AI to gauge public or customer sentiment at scale. This could be for brand monitoring, product feedback, or even market research.

Imagine a company launching a new product and wanting to know how customers feel about it. Rather than manually reading thousands of tweets, reviews, and blog comments, they can train an AI to do sentiment analysis. The training data for such a model comes from text that humans have annotated for sentiment.

For example, annotators might take a random sample of tweets about the product and label each as Positive, Negative, or Neutral (or even a finer scale like Very Positive, Slightly Positive, etc.). A tweet saying "Absolutely loving the new phone’s camera!" is positive, while "This update is terrible, my app keeps crashing" is negative. Neutral might be something like "The phone comes in blue and black colors" (just a factual statement).

Once you have a good amount of labeled data, you train a model that can then ingest the firehose of new tweets in real time and output sentiment scores. Companies use this to create dashboards – e.g., what’s the overall sentiment today vs last week? Did sentiment dip after that bug was reported? It’s like having a pulse on public opinion continuously.

Another scenario is analyzing customer reviews or support tickets. Sentiment analysis can automatically flag negative reviews or urgent-sounding support emails so the company can respond faster to unhappy customers. It can also summarize trends (e.g., "many people are complaining about battery life" if that phrase shows up frequently in negative contexts).

The key point is that without the initial annotation of sentiment, the model wouldn’t know what words or phrases correlate with positive or negative feelings. Humans need to give it those examples (sometimes with notes about sarcasm or context, since “Great, just great” might actually be negative in context). Some sentiment models also incorporate aspect-based sentiment – where you label not just overall sentiment but sentiment toward specific aspects (for instance, in a restaurant review, food might be good but service bad, and annotations reflect that).

Digital Bricks often helps organizations by setting up sentiment analysis pipelines that are tailored to their domain (like finance or hospitality), which usually involves custom annotation because sentiment expressions can be domain-specific (what’s positive in one context might not be in another).

These examples – self-driving cars, healthcare diagnostics, chatbots, and sentiment analysis – are just a few of the many areas where data annotation powers AI. Other notable mentions include: retail (product image tagging, inventory tracking), finance (fraud detection models trained on labeled transaction data), voice assistants (wake word detection data annotation), recommendation systems (labeling content for training ranking algorithms), and more. In all cases, the pattern is the same: we need to teach AI models using examples, and that teaching process is supervised learning through annotated data.

Data annotation is the process of labeling data to make it recognizable and useful for machine learning models. It might sound straightforward, but it’s truly the unsung hero of artificial intelligence development. Without annotated data, most of the AI applications we see today – from image recognition to language translation – simply wouldn’t work. High-quality labeled data is what allows AI to bridge the gap from raw information to meaningful insights.

In this article, we covered what data annotation entails and why it’s so critical. We explored various types (image, text, audio, video) and saw that each domain has its own specialized techniques for annotation. We discussed different approaches to performing annotation, whether by human experts, machines, or a combination of both, and touched on using crowdsourcing to scale up when needed. We also reviewed some leading tools that organizations use to manage annotation projects efficiently. Importantly, we went through best practices – from having clear guidelines to leveraging active learning – which ensure that the annotation process yields high-quality data. We didn’t shy away from the challenges either: it can be time-consuming, sometimes subjective, and raises privacy considerations. But with careful planning (and often with the help of an experienced AI partner), these challenges can be overcome.

Ultimately, data annotation serves as the foundation for building intelligent, reliable AI systems. The performance and accuracy of any machine learning model are directly linked to the quality of the data it was trained on. In other words, if you want a great AI model, you need great annotated data. It’s a step in the AI development pipeline that you cannot afford to rush or overlook. As the saying goes, “You get out what you put in” – investing in good annotation will pay off in a model that performs well in the real world.

For organizations embarking on AI projects, partnering with an AI development expert like Digital Bricks can make a huge difference in this stage. We help ensure that your training data is not only plentiful, but also precise and representative of the problem you’re trying to solve. From setting up the right annotation workflows and tools to providing skilled annotators or integrating active learning, Digital Bricks supports companies throughout their AI journey. By focusing on data annotation done right, we help you build AI solutions that are smarter, more accurate, and more aligned with your business goals.

In conclusion, while it may be labor-intensive, data annotation is an indispensable part of creating AI that truly learns from and reflects the nuances of the real world. It’s how AI learns from labeled data – and as AI continues to expand into new domains, the demand for quality annotated data (and the need for effective strategies to produce it) will only grow. Embracing the best practices and perhaps leaning on an AI partner for this phase will set you up for success in the exciting developments ahead.

The terms data annotation and data labeling are closely related and often used interchangeably, but there is a subtle difference in connotation. Data labeling usually refers to the act of assigning labels or tags to data samples – for example, saying an image is "cat" vs "dog," or a piece of text is "spam" vs "not spam." It’s often a more straightforward classification or tagging process. Data annotation is a broader term that can encompass labeling as well as more complex markings or notes on the data. For instance, drawing bounding boxes on an image, highlighting entities in a sentence, or adding metadata notes are all forms of annotation beyond just a simple label. In essence, all labeled data is annotated data, but annotation can include richer information than a single label. In practice, though, people might use either term to describe preparing data for AI. The key is that both involve adding informative annotations to raw data so that it can be used for training models. Whether it’s a category label or a detailed segmentation mask, it’s contributing supervised information that the model will learn from.

High-quality data annotation is critical because machine learning models learn exactly what you teach them. If your training data is incorrectly or inconsistently labeled, your model will learn the wrong patterns and produce bad predictions. Think of annotated data as the ground truth or the teacher’s answer key for the model – if the answer key has errors, the “student” (AI) will be confused or misled. For example, if some cats in your dataset are mistakenly labeled as dogs, the model might start to think pointy ears or certain fur patterns aren’t exclusively cat features and could misclassify dogs and cats in the real world. Conversely, when annotations are accurate and consistent, the model can reach its full performance potential because it’s learning from correct examples. High-quality labels also help in reducing bias – if done thoughtfully, they ensure the model isn’t picking up on human biases or noise. In short, the better your annotated data, the better (more robust, more accurate) your AI model will be. Many companies that faced underperforming models discovered the root cause was poor training data. So investing in quality annotation up front saves a lot of pain later and can be the difference between an AI project that succeeds and one that fails.

Ensuring consistency across annotators comes down to process and communication. Here are several key steps:

Develop thorough annotation guidelines: This document should define each label and annotation procedure clearly, with plenty of examples. It should anticipate common ambiguities and state how to handle them. All annotators should refer to this as their bible.

Train annotators and do calibration rounds: Before the main annotation begins, have all annotators label the same sample batch and then compare results. Discuss any differences and clarify the guidelines accordingly. This gets everyone on the same page.

Use overlap and consensus: Periodically, assign the same item to multiple annotators (even after training). If they disagree, that’s a flag that something might be unclear in guidelines or someone is diverging. Address it promptly – perhaps through additional training or refining rules.

Regular QA checks: As the project goes on, conduct quality audits of each annotator’s work. If one annotator is found to consistently deviate or make errors, you can correct them with feedback or have them redo certain parts.

Communication channel: Maintain an open channel (like a chat or weekly meeting) where annotators can ask questions and get clarifications when they encounter uncertain cases. It’s better they ask than guess. Share those Q&As with the whole team so everyone learns from one person’s question.

Software enforcement: Some annotation tools allow setting constraints (for example, you might enforce that every image must have at most one label of a certain kind, or forms that must be filled). While these can’t enforce conceptual consistency, they can ensure structural consistency (no missing labels, etc.).

By implementing these practices, companies ensure that one annotator’s work is consistent with another’s, making the overall dataset uniformly labeled. Consistency is crucial for the model to generalize well, because if each annotator had their own interpretation, the data will have contradictory signals.

Yes, data annotation can be outsourced safely, but it requires safeguards to protect data privacy and quality. When outsourcing, you’re typically engaging either a specialized data labeling service or a crowdsourcing platform. To do this safely:

Choose reputable partners: If using a data annotation service company (like those specializing in labeling), pick one with a track record, good security practices, and, ideally, relevant certifications (some might have ISO certifications for data security, for example). They should have no qualms about signing NDAs and contracts that specify data handling procedures.

Data privacy measures: Ensure that any personal or sensitive information is handled according to applicable laws. This might mean anonymizing data before sending out (removing names, blurring faces), or using a platform that allows you to restrict annotators by region (to keep data in certain jurisdictions). Some companies opt for on-site outsourcing – i.e., the annotators from the vendor work in the company’s own facilities or VPN for added security.

Platform security: If using a crowd platform like Mechanical Turk, understand that you’re giving data to unknown individuals. For non-sensitive data, this might be fine. For sensitive data, consider using platforms that offer a vetted pool of workers or a secure environment. Amazon SageMaker Ground Truth, for example, has options to use vendor workforces that operate under stricter agreements than the open Mechanical Turk crowd.

Contracts and compliance: Include clauses in your contract about data usage – e.g., the annotator or service is only allowed to use the data for the purposes of annotation, cannot retain it, cannot resell or share it, etc. If it’s crowd, the platform’s terms of service should cover that workers don’t have rights over the data. If your data falls under regulations like GDPR, make sure the vendor is compliant as a data processor under those rules.

Quality control: Outsourcing doesn’t mean you never look at the data until it’s done. Maintain a quality feedback loop. Usually, you start with a small batch, review it thoroughly, give feedback, then scale up once you’re confident the team understands the task. Continue spot-checking throughout.

As an AI partner, we often take on the heavy lifting of data annotation for clients. In those cases, we implement strict data security measures (secure servers, controlled access) and often work with a trusted network of annotators who have proven quality. This gives clients the benefit of outsourcing (scalability, not tying up their internal team’s time) while maintaining peace of mind about data safety and label quality.

In summary, outsourcing can be done safely if you treat data security seriously and work closely with the provider to enforce it. Many companies successfully outsource annotation, especially when they lack the internal resources for it. It’s just about finding the right balance of trust and verification.

Active learning improves the data annotation process by making it more efficient and targeted. Instead of randomly choosing data points to label, active learning algorithms help identify which data points, if labeled, would yield the most benefit to the model’s learning.

Imagine you have 100,000 unlabeled examples but only resources to label 5,000. With random selection, those 5,000 might include a lot of redundant examples (things the model would have figured out anyway) and miss some critical rare cases. Active learning, on the other hand, tries to pick out the most informative examples – typically those that the current model is uncertain about or that would help it best discriminate between classes.

By focusing on these challenging or informative cases:

From a process standpoint, active learning often runs in iterations: label some data, train model, use model to pick more data, label that, retrain, and so on. This tight feedback loop between model and annotator can accelerate the training cycle.

Overall, active learning can reduce the total volume of data that needs to be annotated to achieve a given model performance. This is particularly valuable when annotation is expensive. It’s like a teacher giving a student harder and harder problems to maximize their learning, rather than making them do a bunch of problems they already know how to solve.

There are several excellent free and open-source tools available for data annotation. Some popular ones include:

Many of these free tools are widely used in academic projects and small companies due to budget constraints. They might not have all the bells and whistles (like sophisticated project management or automatic labeling) that some commercial platforms have, but they often get the job done. Plus, being open-source means if you have programming capacity, you can customize them to your needs.

Almost any industry that is adopting AI benefits from data annotation, but some of the most data-hungry and benefited industries include:

Automotive (Autonomous Driving): As mentioned, self-driving car tech heavily relies on annotated images and sensor data (for detecting lanes, cars, pedestrians, traffic signs, etc.). Without annotation, they can’t train the perception systems.

Healthcare: Medical AI for diagnostics (imaging, pathology, patient data analysis) requires annotated medical records and images. For example, labeling MRI scans or annotating medical transcripts for symptom extraction.

Retail and E-commerce: Annotation is used for product recommendation systems (labeling products, user behaviors), visual search (annotating product images), and inventory management (object detection in store videos, etc.).

Finance: Fraud detection models are trained on labeled transactions (fraudulent vs legitimate). Document processing (like mortgage documents, invoices) uses annotated examples to learn how to extract key fields. Sentiment analysis on financial news or social media can inform trading — those rely on annotated text data.

Manufacturing and Robotics: In manufacturing, AI systems might use computer vision to inspect products (needs annotated images of defects vs good products for training). Robots in warehouses are trained with annotated data to recognize objects or navigate.

Marketing and Customer Experience: Sentiment analysis (as discussed) and intent analysis on customer feedback require annotation. Also, content moderation (labeling content as inappropriate or not) is huge for social media platforms.

Security and Defense: Surveillance systems use annotated video for identifying people or objects of interest. Cybersecurity AI might use labeled examples of malware vs benign code.

Agriculture: Drones and remote sensing images annotated for crop health, weed detection, etc., help train models for precision agriculture.

Education: EdTech uses annotated data for things like automated grading (labeled examples of correct vs incorrect answers), or for educational content tagging and recommendation.

General Tech (virtual assistants, search engines): Massive data annotation efforts go into virtual assistants (like Siri, Alexa understanding queries) and improving search algorithms (evaluating relevance of search results via annotated judgments).

In essence, any sector that is deploying AI models will have some need for annotated data. But the intensity is higher in those where AI is directly interacting with unstructured data (images, text, audio) and making critical decisions. In such sectors, high-quality training data is a competitive advantage, so they invest a lot in annotation. As AI adoption grows, even traditionally less tech-heavy industries (like legal, real estate, or logistics) are starting to use annotated data to train domain-specific models.

Crowdsourcing is generally best for simple or well-defined tasks, and it can be less reliable for very complex tasks. The crowd tends to be non-expert, so if your annotation requires specialized knowledge or careful reasoning, you might not get high-quality results from an open crowd.

For example, crowdsourcing works great for tasks like:

These are tasks that can be quickly understood and executed by a broad audience with minimal training.

However, for tasks like:

In such cases, relying on a random pool of crowdworkers could yield poor results. They might try, but without the background knowledge or the patience to do it correctly, the quality will suffer. Even if a few crowdworkers are capable, you might not have enough of them in the crowd to handle your entire dataset reliably.

If you must use crowdsourcing for a complex task, some mitigations:

Often for complex tasks, a better approach is targeted outsourcing – hire annotators with the needed expertise (e.g., a panel of medical students or certified radiologists for medical data) rather than an anonymous crowd. Or use a managed service where the provider ensures the annotators are trained for the complexity.

In summary, crowdsourcing shines for scale and speed, but you trade off some quality and expertise. Use it for what it’s good for: simpler tasks or as a first pass, and rely on experts or smaller in-house teams for the complex, high-stakes annotations.

Semantic segmentation is an image annotation technique where each pixel in an image is assigned a label that corresponds to an object or category. The goal is to essentially partition the image into regions of different classes. For example, in a photo taken on a street, you might label each pixel as belonging to one of several classes: road, sidewalk, building, pedestrian, vehicle, sky, etc. The result, if you were to visualize it, looks like an outlined or color-coded image where every pixel is covered by some label mask.

This is different from just drawing boxes or classifying the whole image:

Semantic segmentation gives a fine-grained understanding. It tells a model, “this pixel is part of a pedestrian, that neighboring pixel is part of the road.” This allows the model to learn precise boundaries and context. In the context of training AI:

Performing semantic segmentation manually is quite labor-intensive (imagine tracing out every object’s outline perfectly). That’s why sometimes tools help by auto-filling regions or using AI-assisted drawing. But once done, it provides one of the richest forms of annotation for images.

To connect to something we discussed: semantic segmentation is an example where “data annotation” involves more than a simple label – hence it’s a broader term than just “labeling.” It’s a detailed annotation.

Annotation errors in the training data can adversely affect machine learning models in a few ways:

Bias and Mislearning: If certain errors are systematic, the model might learn those mistakes as if they were correct. For example, say in a animal classifier dataset, 5% of the images of wolves were mistakenly labeled as “dog.” The model might then get confused about the features that distinguish dogs and wolves, potentially learning that some wolf-like traits are still “dog.” The result is lower accuracy, especially on cases similar to those mislabeled ones.

Noise and Reduced Performance: Random annotation errors add noise to the training data. The model then has to essentially fit a pattern that isn’t truly there, which can reduce its overall predictive performance. It’s learning from a less clear signal because some portion of the examples are wrong. This typically means you need more data to overcome the noise, or performance will plateau lower than it could have.

Impact on Evaluation: If errors are also present in the test/validation set (and they often are if they come from the same annotation process), you might get an inaccurate measure of the model’s performance. Sometimes a model predicts correctly but gets penalized because the “ground truth” was actually wrong. This could mislead you during development about which model is better.

Real-world consequences: In critical applications (like medical or automotive), annotation errors could lead to models making dangerous decisions. E.g., if some stop signs in the training set were not annotated and thus treated as background, a self-driving car model might not detect stop signs as reliably. Or a medical AI trained on some scans where a tumor was missed by the annotator might not flag similar tumors in new scans.

Bias Amplification: If certain types of inputs are more prone to annotation error (maybe images of a certain demographic are mis-labeled more often due to annotator bias), the model can inherit that bias and even amplify it by generalizing incorrectly.

The extent of impact depends on how frequent and severe the annotation errors are. Models are somewhat robust to a small amount of noise – having a few mislabeled examples usually won’t ruin a model, especially deep learning models that can often handle label noise up to a point. But if the error rate is high or if the errors have a pattern, it can significantly degrade model performance.

That’s why quality control in annotation is important. It’s also why sometimes techniques like training with label noise or using approaches that can detect outliers are employed. In fact, there’s a whole area of research on learning with noisy labels, which tries to make models more resilient to annotation errors (using algorithms that can down-weight or correct suspected mislabeled examples).

In practice, the best solution is to try to prevent annotation errors through good processes, and to do sanity checks on your data. For instance, if you’re training a cat/dog classifier and the model is weirdly classifying obvious things wrong, inspect those cases – you might find the annotation was wrong and that’s confusing the model. Correct it, and retrain. In summary, bad labels equal bad model predictions, so catching and fixing annotation errors is a crucial step in building reliable AI.

By following these guidelines and understanding the nuances of data annotation, organizations can better prepare their data and, in turn, develop more accurate and effective AI models. Data annotation may be a meticulous process, but it’s absolutely worth the effort when you see the results in a well-performing AI solution. Remember, if you need help along the way, Digital Bricks is here as your AI development partner – from data annotation to deployment – to ensure your AI projects are built on a solid foundation.