AI Progress in 2025: What’s Happened and What’s Next

.avif)

.avif)

The first half of 2025 has seen unprecedented strides in artificial intelligence, from record-breaking model sizes to new ways AI agents work together. The pace of change has been breakneck, major tech players and emerging innovators alike are pushing the boundaries of what AI can do. In just six months, we’ve witnessed AI models growing dramatically in capability, AI agents taking center stage in software, hardware leaps powering these systems, and even governments rolling out bold AI education initiatives. This article takes a deep dive into the key developments of early 2025, and then looks ahead to what the second half of the year might hold.

One clear trend in 2025 is that AI models have exploded in scale and capability. The industry’s mantra seems to be “bigger is better” – measured in the billions or trillions of parameters that make up these neural networks. OpenAI’s GPT-4, for instance, greatly surpasses its 2020-era predecessor (GPT-3 had 175 billion parameters) by an order of magnitude in complexity – though OpenAI hasn’t disclosed GPT-4’s size, experts believe it’s in the trillions of parameters. This massive scale allows GPT-4 to exhibit more sophisticated reasoning and even multimodal abilities (like understanding images), demonstrating how rapid the progress has been since GPT-3’s debut.

Other tech giants are keeping pace. Google launched its Gemini in late 2023 and has iterated quickly; by March 2025 Google announced Gemini 2.5, its “most intelligent model yet” with enhanced reasoning and coding skills. Gemini is a family of models spanning from lightweight versions for mobile devices to Gemini Ultra, a top-tier model that reportedly outperformed OpenAI’s GPT-4 and Anthropic’s Claude 2 on several benchmarks. Notably, Gemini was designed from the ground up to be multimodal – able to handle text, images, audio, and even video input jointly. This points to a future where AI is not limited to text chatbots, but can see and hear as humans do. Google DeepMind’s CEO Demis Hassabis even suggested Gemini’s strategic reasoning would combine techniques from DeepMind’s AlphaGo, striving to “trump ChatGPT” in capability.

.avif)

Anthropic, another major AI lab, has also been scaling up its Claude models. Their Claude 2, released in mid-2024, already impressed with a whopping 100,000 token (75,000 word) context window – meaning it can remember and process long conversations or documents. In 2025, Anthropic introduced Claude 4 (Claude “Opus” and “Sonnet” models), touting improved reasoning and coding abilities. While Anthropic keeps exact parameter counts secret, one research paper estimated Claude’s size to be on the order of GPT-3 (hundreds of billions of parameters), and rumors suggest the next-gen Claude 3 could approach 500 billion parameters, nearly 3× Claude 2’s size. In fact, Anthropic has revealed plans for a “frontier model” called Claude-Next that would be 10× more capable than today’s most powerful AI. Training such a model is estimated to require on the order of 10^25 FLOPs (floating-point operations) – an astronomical amount of compute that would likely involve tens of thousands of GPU chips running for months. This ambition gives a sense of how far the scaling race might go; Anthropic projects spending $1+ billion to reach that goal by 2025/26.

It’s not just the closed-source models getting bigger – open-source AI has made leaps as well. In 2023, Meta (Facebook) released LLaMA 2, an open model up to 70 billion parameters, free for research and commercial use. Not to be outdone, the UAE’s Technology Innovation Institute (TII) open-sourced Falcon 180B in late 2023, a 180-billion-parameter model that became the largest openly available LLM at that time. Falcon 180B’s release demonstrated that even governments and smaller players can contribute to cutting-edge AI development, not just the Silicon Valley titans. The open-model community in early 2025 remains vibrant, with various projects fine-tuning and distilling these large models for wider use. This open availability is important because it “democratizes” AI research – it allows universities and companies in regions like the Middle East, which may not have their own GPT-4, to experiment with advanced AI on locally hosted models.

.avif)

Model scales have reached the stratosphere in 2025. We now have numerous AI systems operating in the 50B–500B parameter range, and some (like OpenAI and Google’s flagships) likely beyond that. These ever-larger models generally exhibit better performance, handle more complex tasks, and even integrate modalities (text, vision, audio) in one AI. However, the pursuit of massive scale also raises practical challenges: these models demand enormous computing power to train (and significant $$$ investment), and their sheer size can make them costly to deploy. This has spurred intense focus on optimization techniques and new hardware – which we will discuss later. But first, let’s look at how AI agents evolved in 2025, because raw intelligence isn’t useful unless it’s applied effectively.

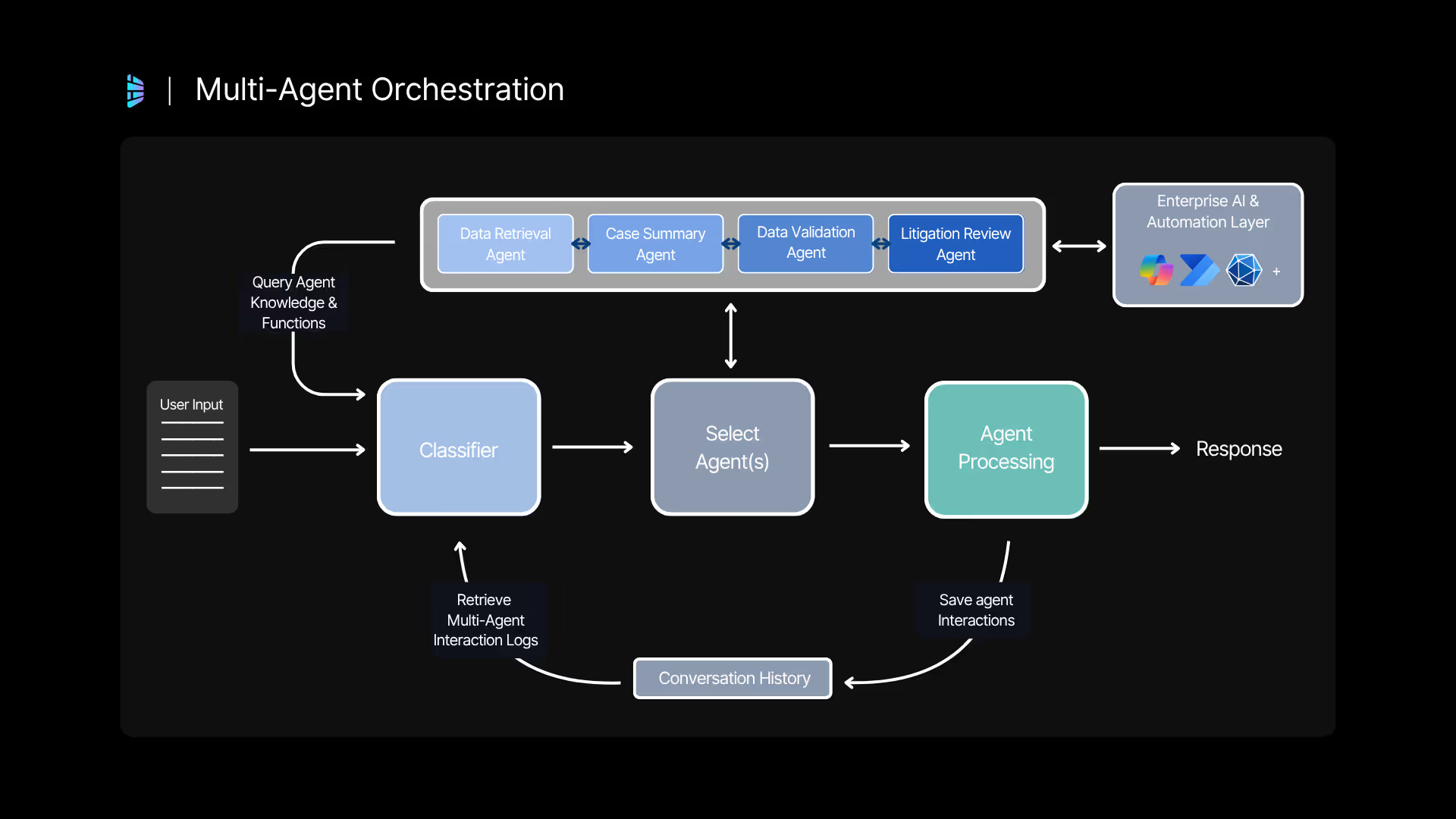

Beyond just raw model size, a major storyline of early 2025 is how AI systems are being used as autonomous agents. Rather than simply chatting with a single AI, developers are now orchestrating multiple AI agents that can communicate, cooperate, and perform complex tasks on our behalf. This is often referred to as “agentic AI,” and it’s becoming a central focus in the AI landscape.

Multi-agent orchestration allows one AI agent to delegate subtasks to other specialized agents, or for agents to negotiate and work together towards a goal. For example, imagine a customer service workflow: one agent could summarize a user’s query, another agent could look up account information, and a third could draft a response – all autonomously, in a fraction of the time a human team might take. Such scenarios are driving interest in frameworks for agents to talk to each other and to external tools.

To enable this, companies have introduced protocols for standardizing how AI agents interact. Anthropic pioneered the Model Context Protocol (MCP) in late 2024, which provides a universal way for AI models to connect with external tools, databases, and APIs. You can think of MCP as a kind of “USB-C for AI” – a single standard interface that lets any AI model access plugins and resources outside its own training data. This was a big breakthrough because it solved the “many models to many tools” integration problem. With MCP, an AI agent can fetch live information, call a computation, or save data simply through standardized protocol calls, rather than each AI needing custom integration code. In practical terms, MCP lets AI agents use tools like web browsers, databases, or code interpreters as extensions of their own capabilities. Anthropic’s Claude, for instance, can use MCP to query databases or execute code, which turns a chatbot into something closer to a software agent that acts in the world.

.avif)

If MCP equips an AI to use tools, another new standard equips AIs to talk to each other. In April 2025, Google announced Agent Communication Protocol “A2A” (Agent-to-Agent) as an open framework for AI-to-AI dialogue. The idea of A2A is that any agent following the protocol can send messages, share knowledge, and request help from any other A2A-compliant agent – even if they were built by different vendors or in different ecosystems. Over 50 organizations joined Google in supporting A2A right from launch (including major software firms and consultancies), underscoring the industry’s recognition that no single agent will do it all. A2A focuses on enabling secure, structured communication between agents, with features like authentication, real-time streaming, and negotiation of each agent’s capabilities. Crucially, Google designed A2A to be vendor-neutral and built on web standards (HTTP, JSON-RPC, etc.), so that it’s easy to implement across platforms. In other words, A2A is trying to be the lingua franca for a future “internet of agents.”

These protocols complement each other. As Google’s announcement noted, A2A is meant to complement Anthropic’s MCP. An agent might use MCP to access tools and data, then use A2A to coordinate with another agent that has different knowledge or authority. For example, a finance-report-generating agent might use MCP to pull data from a database, then via A2A ask a language-model agent to write a summary of the trends, then ask yet another agent to format that into a slide deck. Early experiments combining these – often using frameworks like LangChain – have shown promising results, essentially chaining GPT-style models together to solve tasks step-by-step.

Tech companies have quickly begun baking multi-agent orchestration into their platforms. At Microsoft Build 2025, the company unveiled a new “Connected Agents” feature in its Copilot Studio toolkit, bringing multi-agent workflows to mainstream enterprise software. This feature (in private preview by mid-2025) lets organizations design solutions where multiple AI agents collaborate to complete cross-functional tasks. Microsoft gave examples like an agent that pulls sales data from a CRM system, passes it to another agent that drafts a proposal in Word, then triggers a third agent to schedule meetings in Outlook – all automatically, agent-to-agent, with no human in the loop for the handoffs. The connected agents framework handles the orchestration, security (each agent in Microsoft’s system can have an Entra digital identity and policy constraints), and deployment channels. By June 2025, Microsoft indicated this multi-agent orchestration would enter public preview, showing how fast the concept moved from research into real enterprise products.

.avif)

Why the sudden focus on agent collaboration? It’s driven by the push for autonomy and specialization. A single large model (like ChatGPT) is great general intelligence, but in complex real-world workflows, you often need different systems with different skills. One agent might be great at text analysis, another at interacting with a database, another at user interaction. Or you might simply want them to double-check each other’s work for accuracy and safety. Multi-agent systems promise greater scalability (you can add more agents as needed) and robustness (agents can oversee or critique one another). 2025 has effectively kicked off a new era where AI agents are not lone chatbots but members of a team, capable of interfacing with software systems and with each other.

Another exciting development in early 2025 is how creating and deploying AI agents has become much more accessible to the average tech enthusiast or business user. You no longer need a PhD in machine learning (or a supercomputer) to leverage advanced AI for your own needs – thanks to a wave of “Copilot” tools and no-code or low-code AI development platforms.

.avif)

A prime example is Microsoft’s Copilot Studio, which evolved significantly by 2025. Copilot Studio is essentially a toolkit to let users build their own AI-powered assistants (copilots) and automate workflows with minimal coding. At Build 2025, Microsoft showcased how a product manager or an analyst – not just professional developers – could use Copilot Studio’s interface to compose prompts, connect data sources, and chain multiple AI functions together into a custom agent. This is AI development democratized. For instance, a user could create a custom service desk bot that uses one AI model to triage employee IT requests, then calls another AI to draft an email response and interfaces with a ticketing system API to log the issue – all via drag-and-drop blocks and natural language instructions. The barrier to creating useful AI agents has thus dropped dramatically.

One particularly powerful aspect is integration with the existing Power Platform (low-code apps) and business data. Microsoft’s vision is that every company can have an army of co-pilots tailored to their processes – and built by their own subject-matter experts. To support this, Copilot Studio allows integration of custom or industry-specific models (for example, a fine-tuned model with a company’s proprietary knowledge) through Azure AI services. And once built, these copilots can be deployed through the Microsoft 365 ecosystem (Teams, Office apps, even chat platforms like WhatsApp in the future) with one click. This means an employee could summon a custom AI agent in a Teams chat that is specifically trained on, say, the company’s sales playbook to help prepare a client presentation.

The democratization trend isn’t limited to Microsoft. OpenAI and others have also pushed to make AI more usable by non-experts – OpenAI’s ChatGPT plugins (introduced in 2023) let users augment ChatGPT with third-party tool skills without any coding; by 2025 there’s an expanding marketplace of plugins that effectively function as mini-agents. Likewise, open-source frameworks like LangChain and Flowise enable relatively simple chaining of AI models and tools, lowering the barrier for hobbyists to experiment with agentic AI. And numerous startups are offering “AI agent as a service” platforms where you can configure an agent’s goals and knowledge via a web dashboard.

The net effect is that creating an AI agent or assistant in 2025 feels a lot like building a simple app: much easier than it was a year or two ago. This democratization is important – it means AI isn’t confined to big tech companies. A lone developer, a small startup, or a company’s non-IT department can innovate and tailor AI to their niche problems. We’re seeing an explosion of specialized copilots: lawyers have AI legal research assistants, marketers have AI content copilots, doctors have AI summarizers for medical records, and so on. While there are still challenges (like ensuring these DIY agents are secure and accurate), the empowerment of more people to harness AI is accelerating the technology’s spread across industries.

AI’s rapid rise isn’t just a Silicon Valley story – it’s truly global. A standout development in early 2025 came from the Middle East: the United Arab Emirates (UAE) made a groundbreaking move by making AI education mandatory in schools. In May 2025, the UAE Cabinet approved a curriculum to introduce “Artificial Intelligence” as a core subject from kindergarten through 12th grade in all public schools. Starting in the 2025–26 academic year, every UAE student will learn age-appropriate AI concepts, from basic coding and algorithms in early grades up to machine learning and ethics for older students. This bold initiative – one of the first of its kind globally – is aimed at preparing the next generation for a future where AI will be ubiquitous in the economy and society.

Leaders in the UAE have framed this as a strategic, long-term investment. As His Highness Sheikh Mohammed bin Rashid Al Maktoum (Ruler of Dubai) said, the goal is to equip children “with new skills and capabilities” for a world very different from today. The curriculum will not only teach technical skills like coding or data science, but also focus on AI’s ethical and societal implications, ensuring students grow up with an awareness of issues like bias, privacy, and the responsible use of technology. Importantly, the government is supporting this with teacher training and resources so that educators are prepared to deliver AI lessons effectively. By integrating AI across subjects (for example, using AI tools in science projects or discussing AI in civics class), the UAE aims to produce a workforce and citizenry that is AI-literate and innovation-inclined.

This move is part of a broader pattern of AI leadership in the UAE and the MENA region. The UAE was notably the first country to appoint a Minister of AI (back in 2017), and it has a National AI Strategy that envisions AI contributing 20% of non-oil GDP by 2031. We’ve also seen heavy investments in AI research infrastructure: Abu Dhabi has established itself as a regional AI hub, home to the Advanced Technology Research Council (ATRC) and institutes like TII, which produced the Falcon series of open-source AI models. By embedding AI in education, the UAE is building the human capital to match its investments in technology. As one official put it, they are “determined to become the world’s most prepared nation for AI”, and that starts with youth empowerment.

Other countries in the Middle East are similarly ramping up AI efforts. Saudi Arabia and Qatar have launched national AI initiatives and are pouring resources into AI research (Saudi Arabia, for example, has been reported to acquire high-end NVIDIA GPUs to develop its own large language models). Egypt and Jordan have tech-education programs and incubators focusing on AI startups. The region clearly sees AI as both an economic opportunity and a strategic priority.

Perhaps most striking on the infrastructure front is how the Middle East is addressing the compute power aspect of AI. We’ve discussed how crucial hardware is – and typically, most of the world’s AI supercomputing has been in the US or Europe. But now the Gulf states are building their own. Abu Dhabi’s G42, a technology conglomerate, partnered with U.S. semiconductor firm Cerebras to build a series of AI supercomputers called Condor Galaxy. The first deployments in 2023–24 delivered on the order of 8 exaFLOPs of AI compute each (an exaFLOP is 10^18 operations per second), and more systems are underway. This is notable because they use Cerebras’s unique wafer-scale processors – a non-NVIDIA approach – showing that the region is willing to bet on novel architectures to gain independence in AI compute. By mid-2025, NVIDIA itself has teamed up with G42 to develop advanced data centers in the UAE as part of the region’s AI push. This partnership with the world’s leading AI chipmaker underscores the Middle East’s emergence as an important player in the AI race. In June 2025, NVIDIA and G42 announced plans to provide cloud infrastructure and services that will make cutting-edge NVIDIA AI hardware available in-region, enabling local industries and governments to run frontier AI models without relying on overseas data centers.

The combination of education initiatives, local research talent cultivation, and heavy infrastructure investment means the MENA region could significantly shape AI development in the coming years. At the very least, they are ensuring they won’t be left behind. It’s quite possible that the next big breakthrough or widely-used open-source model could come from a lab in Abu Dhabi or Riyadh. And on the societal side, the UAE’s schoolchildren might become the template that other countries study as they consider bringing AI into classrooms. (Indeed, after the UAE’s announcement, officials in countries like India and Singapore have talked about similar AI curricula to keep their youth competitive.)

None of these AI advances would be possible without the tremendous growth in computing power and specialized hardware that has occurred in parallel. The first half of 2025 has underscored that AI progress is as much about chips and servers as it is about algorithms. The demand for AI-capable hardware is sky-high – and it has driven the likes of NVIDIA to become the world’s hottest semiconductor company.

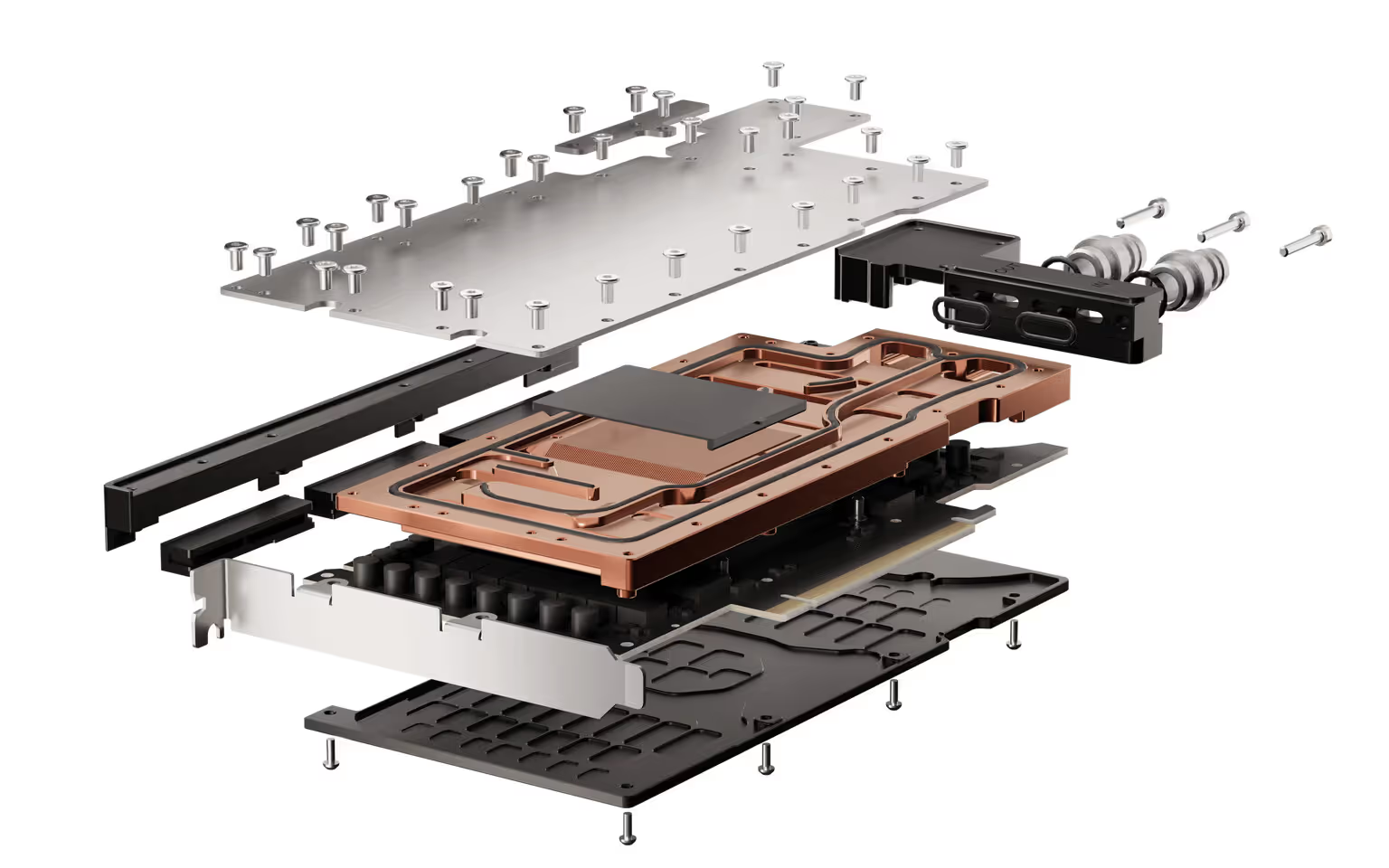

NVIDIA in particular has solidified a near-dominant position with its GPU (graphics processing unit) chips that excel at training large neural networks. Their flagship chip of the moment, the H100 “Hopper” Tensor Core GPU, is a beast: it contains nearly 80 billion transistors, delivers unprecedented speed on matrix calculations, and comes with 80 GB of ultra-fast HBM2e memory on each card. An H100 can be thought of as an “AI supercomputer on a card” – and companies buy them by the thousands. In fact, one industry trend has been tech giants racing to assemble bigger and bigger GPU clusters. OpenAI’s GPT-4 was reportedly trained on a Microsoft-built supercomputer containing tens of thousands of NVIDIA A100/H100 GPUs working in concert. Not to be outdone, Google uses its proprietary TPUs and GPUs in massive pods for Gemini. The result: by 2025, the leading AI firms operate machines that rival the fastest supercomputers ever built (measured in FLOPs), dedicated entirely to AI research.

NVIDIA’s Hopper architecture and the preceding Ampere architecture have empowered the current generation of large models. But NVIDIA isn’t standing still. In 2024 it introduced the Grace Hopper (GH200) Superchip, which actually combines a 72-core CPU with a Hopper GPU on the same module using a fast interconnect, allowing data to flow between CPU and GPU memory much faster. This kind of integration is crucial for giant models that need both general computation (CPU) and heavy ML math (GPU) working efficiently together. The GH200-based systems started rolling out in early 2025 for select customers, and NVIDIA has announced plans for the next GPU architecture (rumored “Blackwell” cores) likely to debut in 2025–26 with even greater performance per watt.

The AI chip race also features competition and newcomers. AMD – the other big name in GPUs – has been pushing its MI300 series accelerators, which combine GPU and CPU technology somewhat akin to NVIDIA’s approach, and aim to offer more memory, hoping to find a niche in big model training. Startups like Graphcore, SambaNova, and Tenstorrent are pitching alternative chip designs (some focused on sparsity, analog compute, or other tricks) that could potentially challenge GPUs. So far, none have unseated NVIDIA’s ecosystem, but the hunger for compute is so vast that many believe the market is not zero-sum – multiple winners could co-exist, especially as AI deployments diversify to the edge devices (phones, cars, IoT).

Another aspect of hardware in 2025 is the global expansion of AI data centers. Cloud providers (AWS, Azure, Google Cloud, etc.) have been frantically building new data centers filled with GPU/TPU racks to meet customer demand for AI services. It’s telling that enterprise GPU cloud rentals have at times been backordered – companies simply can’t get enough of the chips. This has even led to secondary markets and creative solutions like GPU timesharing and better software scheduling of workloads. On the national level, governments are recognizing that access to AI compute is a strategic resource – akin to oil or electricity in past eras. We already discussed how countries like the UAE are partnering to bring in top-tier compute. Meanwhile, geopolitical factors loom: U.S. export controls now restrict the sale of highest-end AI chips to certain countries, leading China to accelerate its own semiconductor programs to keep up in AI. In short, AI has elevated silicon to the forefront of policy and investment.

It’s worth noting that the cost of training cutting-edge models has ballooned due to this hardware intensity. Training GPT-3 in 2020 was estimated at ~$5 million; GPT-4 likely cost in the tens of millions. Future models like Anthropic’s Claude-Next are projected to cost $1 billion in training compute. These numbers were unheard of a few years ago in software R&D. It underscores that AI advancement is deeply intertwined with advances in hardware and the funds to wield them. The upside is that these investments push the envelope of computing: they are driving progress in chip architecture, network infrastructure (intra-datacenter networking capable of terabit speeds to connect all those GPUs), and even cooling and power solutions (AI datacenters draw immense power and often need liquid cooling). All of these innovations will likely have spillover benefits to the broader technology industry.

Given the torrid pace of the first half, what can we expect in the remainder of 2025? If current trends hold, we are in for an exciting (and possibly unpredictable) ride. Here are several developments on the horizon in the next six months:

The first half of 2025 has been a whirlwind for artificial intelligence, a period of dizzying advancements in model capabilities, agent architectures, real-world integration, and supportive infrastructure. AI is moving faster than ever, not just in one locale or through one company, but across the globe and touching every industry. The “half-time report” on 2025 shows AI at a tipping point: no longer an experimental technology, but a transformative force being woven into how we work, learn, and live. If these six months are any indicator, the second half of 2025 will bring even more remarkable AI stories. Whether it’s new record-breaking AIs, widespread autonomous agent deployments, or societies grappling with AI’s implications, one thing is certain – the world of AI will not stand still. Stay tuned, because the only constant in this field is rapid change, and the narrative of AI’s evolution is being written in real-time. Here at Digital Bricks, we’ll continue to keep you updated as we build towards an AI-enabled future.