What is the New EU AI Office?

.avif)

.avif)

On Sunday, June 16, 2024, the European Union (EU) officially opened its highly anticipated AI Office in Brussels. This office represents a significant milestone in the EU's efforts to regulate artificial intelligence and ensure its ethical and safe deployment across member states and beyond. The establishment of the EU AI Office aligns with the legislative framework provided by the AI Act, which aims to create a robust regulatory environment for AI technologies, balancing innovation with public safety and fundamental rights.

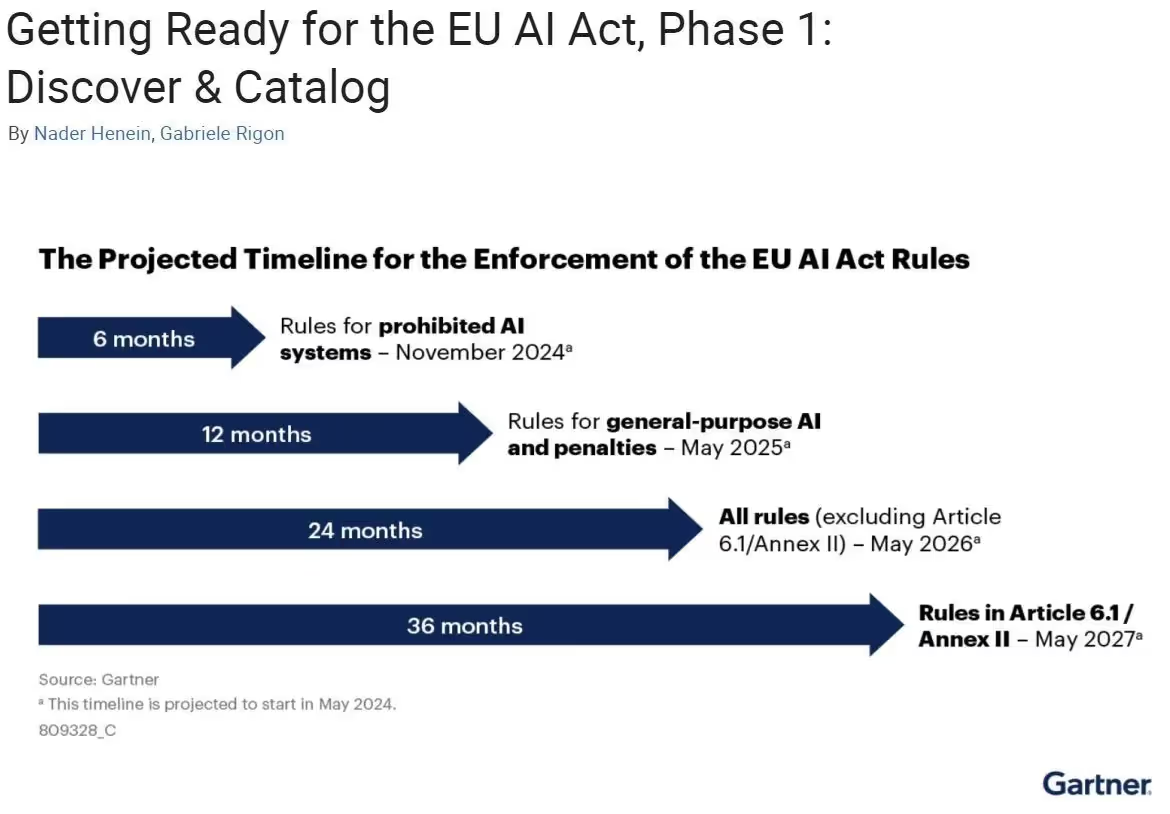

The primary function of the EU AI Office is to oversee the implementation and enforcement of the AI Act, which was adopted by the European Parliament in June 2024. The office is tasked with several critical responsibilities, including monitoring compliance, issuing guidelines, and coordinating with national regulatory authorities. With a staff of 140 experts, the AI Office will scrutinize AI systems and ensure that they adhere to the stringent requirements set forth by the AI Act.

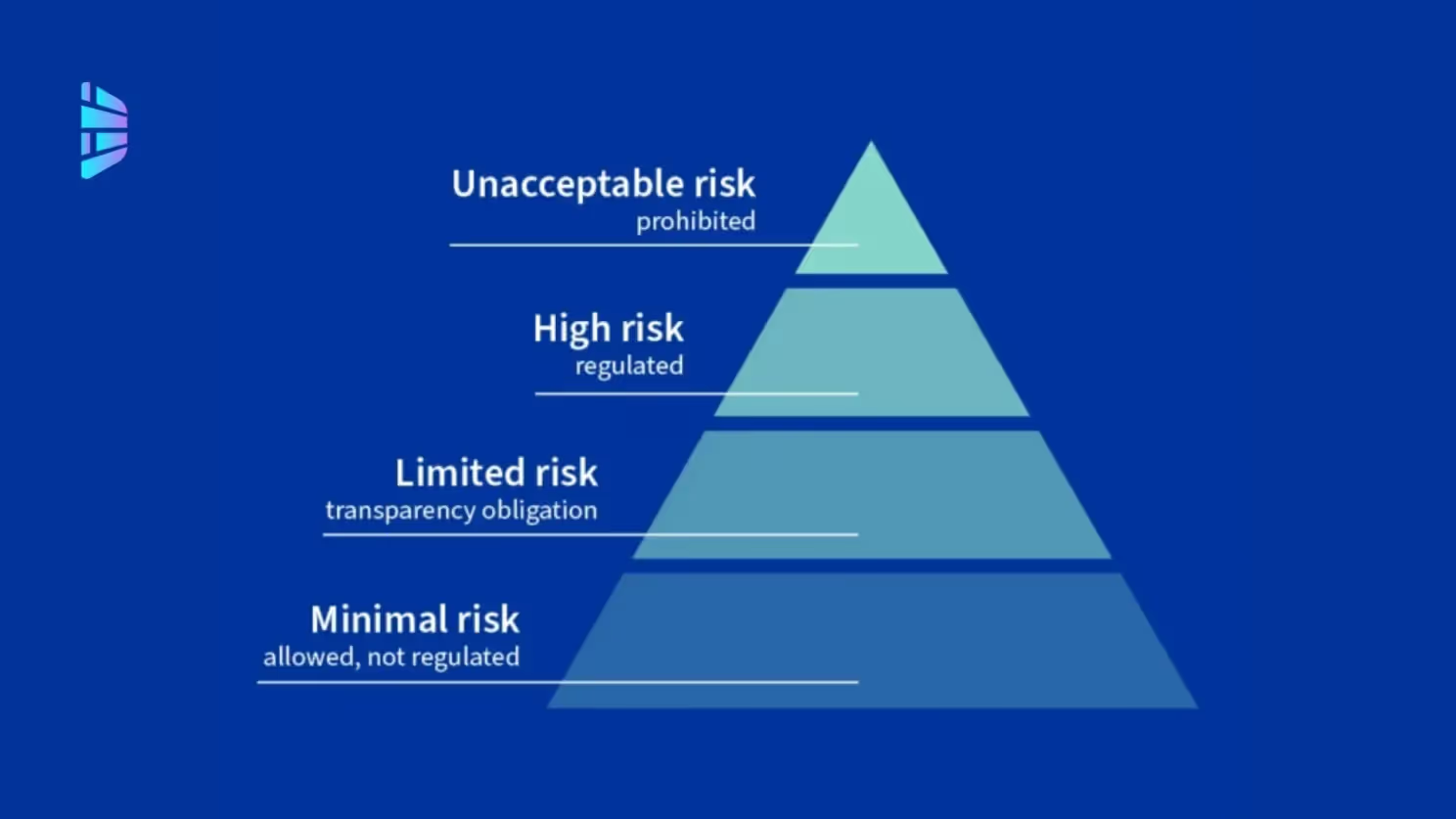

The AI Act adopts a risk-based approach to regulation, categorizing AI systems into four risk levels: minimal, limited, high, and unacceptable risk. This approach ensures that regulatory efforts are proportional to the potential impact of AI systems on individuals and society. High-risk AI systems, such as those used in critical infrastructure, medical devices, and employment decision-making, will face the most stringent requirements. These include rigorous testing, transparency obligations, and human oversight mechanisms.

The AI Act’s regulatory scope extends beyond the EU's borders, applying to any entity that provides or deploys AI systems within the EU market, regardless of where they are established. This extraterritorial effect ensures that AI systems impacting EU citizens comply with the Act's standards, fostering a global standard for AI safety and ethics.

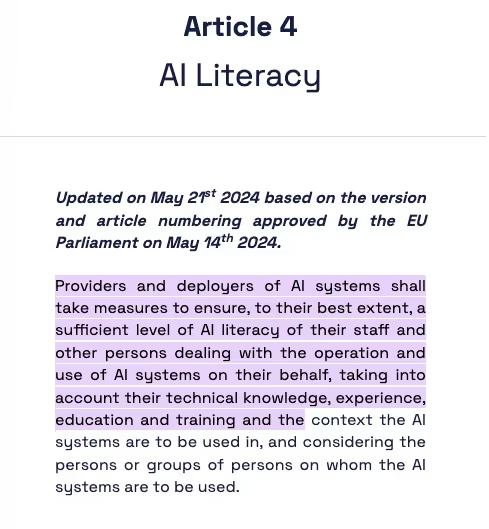

One of the often-overlooked aspects of the AI Act is Article 4, which mandates that providers and deployers of AI systems must ensure their staff possess sufficient AI literacy. This provision underscores the importance of proper training and education in the safe and effective operation of AI systems. Organizations must consider the technical knowledge, experience, and educational background of their employees, tailoring training programs to the specific context in which AI systems are used. This requirement highlights the EU’s commitment to not only regulating technology but also empowering individuals to manage and interact with AI responsibly.

The emphasis on training and compliance in the AI Act draws parallels with the General Data Protection Regulation (GDPR). Since its enforcement in 2018, the GDPR has significantly impacted how organizations handle personal data, with substantial fines for non-compliance. Notably, British Airways faced a record fine of £183 million in 2019 for failing to protect customer data, highlighting the serious repercussions of inadequate compliance measures.

Similarly, the AI Act requires organizations to establish robust training programs and compliance protocols. The EU AI Office will play a pivotal role in auditing and ensuring adherence to these requirements. The office's rigorous oversight is expected to lead to a high level of accountability, encouraging companies to prioritize AI literacy and ethical practices.

The inauguration of the EU AI Office marks a critical step in the EU's journey to regulate AI effectively. By enforcing the AI Act, the office aims to create a safe and innovative AI landscape that respects fundamental rights and promotes public trust. With its comprehensive regulatory framework and emphasis on training and compliance, the AI Office is set to become a cornerstone of AI governance, ensuring that AI technologies benefit society while minimizing potential risks. As the AI landscape evolves, the EU's proactive approach serves as a model for other regions seeking to balance technological advancement with ethical considerations.