What Are Deepfakes? Uses, Risks, and Ethics

.avif)

.avif)

Most of us have already encountered deepfakes, whether through viral internet clips or surprisingly realistic, altered videos circulating on social media. Like it or not, deepfakes are now a fixture in our digital world.

Deepfakes are AI-generated or AI-modified media, including video, audio, and images, designed to convincingly replicate real people or events. As artificial intelligence continues to evolve, the gap between reality and fabrication narrows, positioning deepfakes as both an innovative tool and a growing threat to truth, trust, and privacy.

In this article, we’ll explore:

Deepfakes are synthetic media created using deep learning models to simulate highly realistic representations of people, environments, or actions. While most known for face swaps or voice cloning, the technology extends to altering objects, landscapes, animals, or entire scenes, blurring the boundaries between authentic and artificial content.

The roots of deepfakes lie in key academic breakthroughs. In 2014, Ian Goodfellow and his team introduced Generative Adversarial Networks (GANs), which formed the foundation for creating synthetic media—a concept we’ll explore further in the next section.

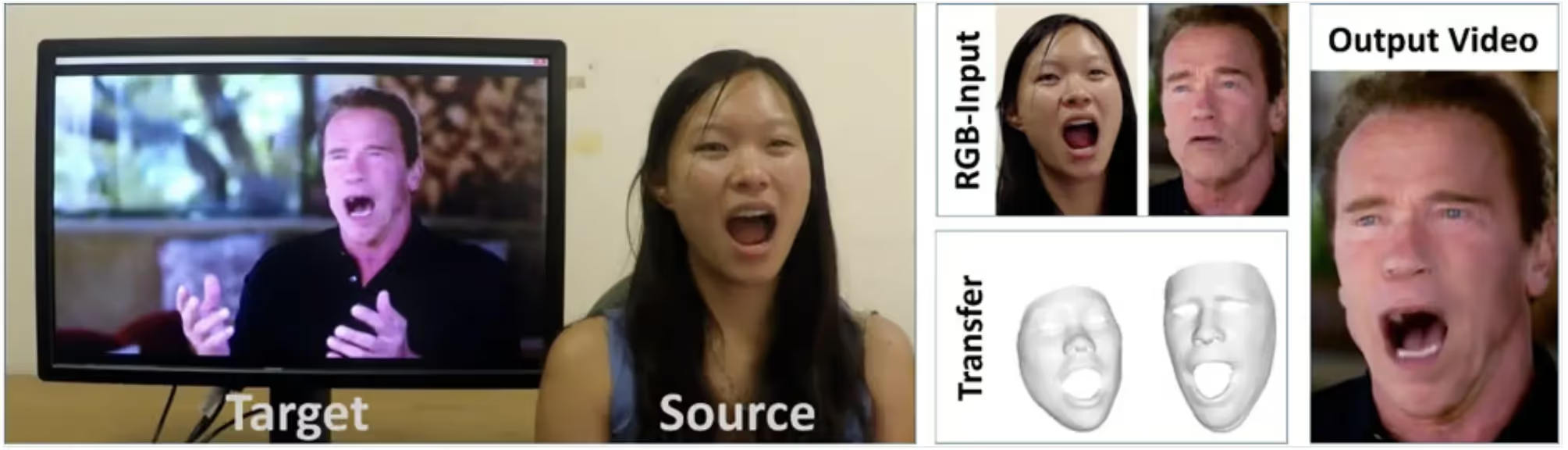

Another turning point came with the 2016 Face2Face project by Justus Thies and colleagues, which demonstrated real-time facial expression capture and transfer. One striking example shows a user's expressions projected onto actor and politician Arnold Schwarzenegger.

Public awareness surged in late 2017 when manipulated videos, often involving celebrity face swaps in explicit content, began spreading on Reddit. This triggered platform-wide bans as concerns around misuse escalated.

A well-known example surfaced in 2018, when BuzzFeed and Jordan Peele released a viral deepfake of President Barack Obama. The video was designed to illustrate how convincingly deepfakes could distort public messaging.

In 2020, South Park creators Trey Parker and Matt Stone took the concept further with Sassy Justice, a satirical series featuring deepfaked portrayals of Donald Trump, Mark Zuckerberg, and other public figures.

When we talk about deepfakes, we usually focus on the final output—but what’s actually going on behind the scenes? Let’s break it down in a way that’s accessible, even if you don’t have a technical background.

Discriminative vs. Generative Models

Discriminative models are designed to tell things apart, for example, identifying whether an image is authentic or manipulated. These models analyze data and assign labels such as “real” or “deepfake.” Examples include logistic regression, decision trees, support vector machines, and neural networks. Because they specialize in classification, discriminative models are well-suited to detecting deepfakes and spotting subtle signs of manipulation.

Generative models, on the other hand, are designed to learn the underlying patterns of real data—like facial features or speech patterns—and use that understanding to create entirely new, realistic-looking media. This is the foundation of how deepfakes are made.

The key distinction is in their function: discriminative models detect, while generative models create. Both play essential roles in deepfake development and detection, one builds believable fakes, the other works to expose them.

.avif)

Generative Adversarial Networks (GANs)

GANs are a form of generative AI in which a generative model and a discriminative model are trained together in what’s often described as a competitive dynamic. The generator produces synthetic content, while the discriminator evaluates whether that content is real or fake. Over time, this “adversarial” relationship pushes the generator to produce outputs that are increasingly hard to distinguish from the real thing.

In the context of deepfakes, think of the generator as an art forger and the discriminator as an art critic. The forger gets better at creating realistic replicas, while the critic sharpens their eye to catch imperfections. With continued exposure to data, both improve, resulting in more convincing deepfakes and sharper detection models.

Creating a deepfake involves training models on large datasets that include a target’s specific traits (like tone, cadence, and facial expressions). The AI system uses this data to build a nuanced representation that allows it to mimic the subject in a convincing way.

Common Deepfake Techniques

Face-swapping is one of the most recognized techniques and often uses autoencoders and convolutional neural networks. These models are trained on large volumes of images or video frames to understand facial structure, movement, and distinguishing features. The AI converts this information into a compressed, digital signature it can interpret and manipulate.

Once the model understands the source and target faces, it maps expressions from one person onto another, producing realistic face-swaps.

.avif)

For lip-syncing, neural networks analyze both audio inputs and visual mouth movements. By aligning phonemes with video frames, the model learns to generate video where the spoken words and lip motions match naturally.

Training for this involves labeled datasets, where each frame of video is matched with the specific audio segment being spoken. Through this pairing, the AI learns the intricate relationship between sound and mouth movement, allowing it to synchronize them accurately.

Voice cloning follows a similar pattern. Speech synthesis models are trained on extensive audio datasets with paired transcripts. These help the model capture the unique voice traits of an individual—pitch, rhythm, tone, and more. By learning this structure, the AI can generate speech that closely resembles the target’s real voice, often to a remarkably realistic degree.

Most of us have seen synthetic videos of celebrities in unexpected or humorous scenarios, but deepfake technology goes far beyond internet entertainment. It’s being explored across industries, from education and accessibility to gaming, film, and interactive media.

Entertainment

Deepfakes are opening new creative possibilities for filmmakers, allowing them to de-age actors, recreate historical figures, or seamlessly localize content through AI-driven dubbing that mirrors the original performances.

One high-profile example is Martin Scorsese’s The Irishman (2019), where Robert De Niro, Al Pacino, and Joe Pesci were digitally altered to appear decades younger for flashback scenes. This allowed the story to maintain continuity without casting younger actors. Watch the behind-the-scenes breakdown for insight into how it was done.

In gaming and virtual reality, deepfakes enable the creation of responsive digital characters that closely resemble real people. Tools like Epic Games’ MetaHuman Creator empower developers to build lifelike avatars for immersive environments, enhancing user engagement and realism.

Satirical and comedic content is also being reimagined. Where impersonations once relied on acting talent alone, deepfake technology now allows creators like Kyle Dunnigan and Snicklink to mimic public figures with remarkable precision, raising the bar for digital satire.

Education and Accessibility

Deepfakes are increasingly being used to reintroduce historical figures into modern learning experiences. BBC Maestro, for example, launched a writing course featuring a digitally recreated Agatha Christie—using AI-generated visuals and speech based on her writings and recordings. This approach allows learners to engage with legacy voices in dynamic, contemporary formats.

The technology also shows promise in enhancing accessibility. For individuals with speech impairments, deepfake-driven voice synthesis can preserve and recreate natural-sounding speech. Project Revoice is one example, where those affected by Motor Neurone Disease can regain their voice through AI, using previously recorded samples to synthesize speech with emotional accuracy.

Ethical and Social Challenges of Deepfakes

No exploration of deepfakes is complete without addressing their darker implications. As the technology matures, several key ethical and societal concerns are emerging:

Legal responses are still catching up. While some regions have introduced regulations to curb the misuse of deepfake technology, enforcement remains challenging. The absence of a comprehensive global framework leaves many questions unanswered—particularly around balancing freedom of expression with the need for accountability and protection.

As AI-generated media becomes more sophisticated, the ability to distinguish real from fake has never been more critical. Whether you're a casual social media user or part of an enterprise security team, deepfake detection is becoming an essential skill. Here’s a closer look at how both humans and machines are tackling this challenge.

Human Observation

While detection is becoming more difficult by the day, there are still visual clues that can give deepfakes away, especially to trained eyes. Many of us have seen AI-generated images where subjects have too many fingers or unnatural hand positions. But most giveaways are far subtler.

In static images, one common red flag is inconsistent pixelation or blurring along the edges of faces or objects. This often signals that the model failed to distinguish where the subject ends and the background begins, leaving behind seams or visual artifacts.

Lighting discrepancies are another giveaway. Shadows that fall in unnatural directions, or inconsistent lighting across elements in the scene, can disrupt the visual logic of an image and indicate manipulation.

.avif)

In video content, these issues can become more pronounced. Shifts in lighting direction between frames, or mismatched reflections, often create an eerie visual inconsistency. You might also notice unnatural blinking patterns, rigid facial movements, or expressions that don’t quite match the surrounding context—signs that the subject has been artificially animated. Another common tell is flickering around hairlines or facial outlines, where the synthetic mask struggles to stay aligned with the moving subject.

Technical Methods

While human analysis can catch surface-level inconsistencies, today’s most effective deepfake detection methods rely on AI and digital forensics. A growing number of tools specialize in analyzing patterns that are invisible to the naked eye, from biological signals to digital fingerprints.

Some widely used tools for deepfake detection include:

Each has a distinct approach based on its intended use case. Google SynthID and Truepic are designed as preventive technologies (marking or verifying content at the point of creation). SynthID embeds imperceptible watermarks into AI-generated images, helping identify them after the fact. Truepic uses cryptographic hashing and blockchain verification to authenticate images at the moment they’re captured, ensuring their integrity from origin to distribution.

Other tools are designed for post-analysis. DuckDuckGoose specializes in analyzing facial inconsistencies such as asymmetry, blur patterns, or unnatural movements. Intel’s FakeCatcher takes a biometric approach, using subtle physiological signals (like blood flow in the face) to determine if a video is authentic.

Enterprise-grade tools like Reality Defender and Sensity AI offer scalable solutions for organizations looking to integrate deepfake detection into security operations, news verification, or content moderation workflows. In contrast, Deepware Scanner is a lightweight, user-friendly tool aimed at everyday consumers, providing quick scans for suspicious content.

A Digital Bricks Conclusion

Deepfakes are a dual-edged sword, offering exciting possibilities in creative storytelling, education, and accessibility, while simultaneously posing real threats to privacy, trust, and public discourse. As the technology continues to evolve, so too will its implications.

Looking ahead, deepfakes will likely become more immersive and indistinguishable, especially with the rise of virtual and augmented reality. Detection tools must keep pace, evolving in complexity to counter increasingly advanced manipulation techniques.

At Digital Bricks, we believe that understanding is the first step toward responsible AI use. That’s why we offer accessible, expert-led e-learning modules that explore the technical, ethical, and societal dimensions of deepfakes, empowering individuals and teams to engage with the topic critically and confidently.

Ready to learn more?

Explore our AI literacy programs and join the conversation about the future of AI on Linkedin