Understanding AI Bias and Fairness

%20(1).avif)

%20(1).avif)

Fairness is the idea that all human beings are of equal moral status and should be free from discriminatory harm. The goal of fairness in AI is to design, develop, deploy, and use AI Systems in ways that do not lead to discriminatory harm.

Discrimination risks are neither new nor unique to AI, and like other technologies, it is not possible to achieve zero risk of bias in an AI System. However, at Digital Bricks , we strive to ensure AI Systems are deployed responsibly to minimize discriminatory outcomes wherever possible.

When considering bias, typically we consider two kinds: conscious (known) and unconscious (unknown). There are many areas where biases can be introduced throughout the AI System lifecycle:

Data serves as the bedrock of AI models, and any biases present in the data can significantly influence the outcomes of these models. Historical bias is a prevalent issue where AI systems trained on historical data inherit existing societal biases. For example, if historical hiring data reflects gender or racial biases, an AI hiring tool could perpetuate these biases by favoring certain demographics over others.

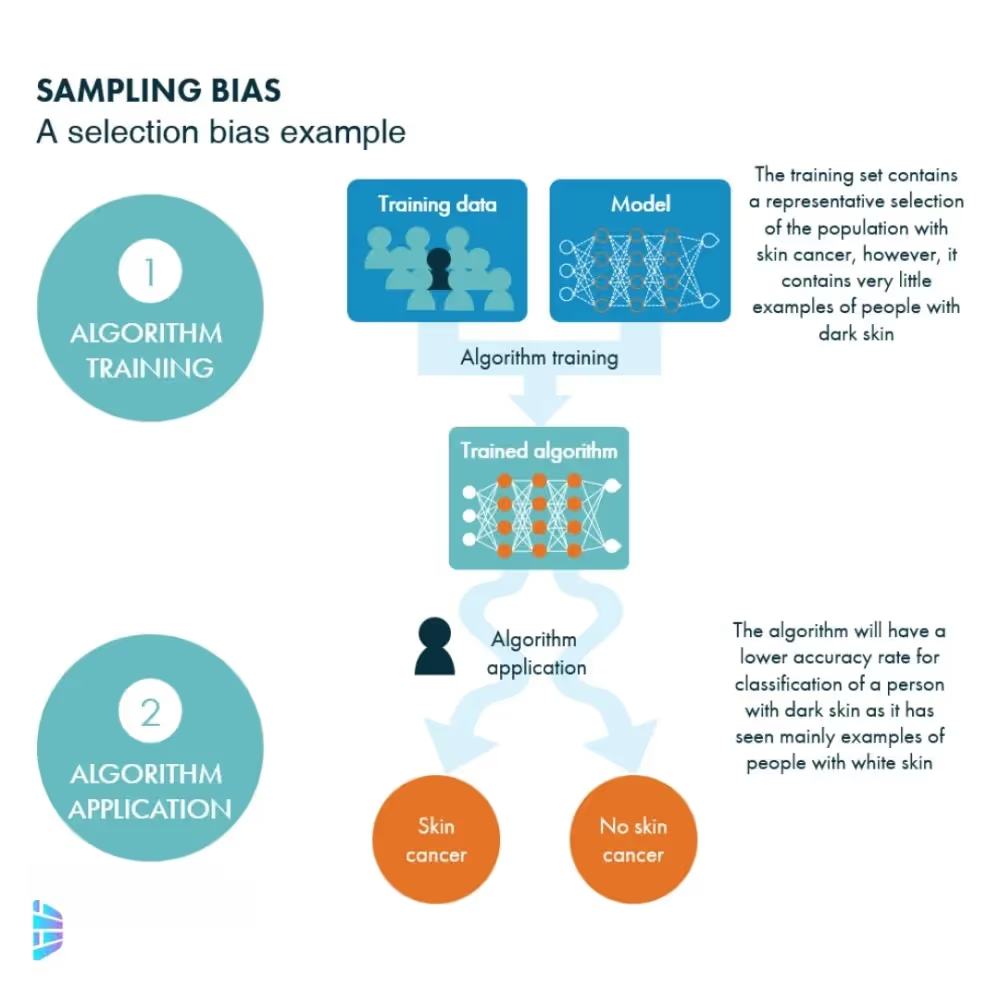

Sampling bias arises when the training data does not accurately represent the entire population. This lack of representation can cause AI systems to perform poorly for underrepresented groups. An example of this is facial recognition technology that has been predominantly trained on lighter-skinned faces, resulting in lower accuracy for darker-skinned individuals.

Measurement bias occurs when data collection methods lead to inaccuracies that disproportionately affect certain groups. For instance, medical data collected more frequently or accurately for one demographic over another can skew the AI's predictions and treatments. Labeling bias, another critical factor, happens when human annotators' subjective judgments influence the data. These biases can be introduced during the data labeling process, where personal prejudices or lack of diversity among annotators can affect the training data.

Human biases can infiltrate AI systems through various stages of development and deployment. During algorithm design, developers make choices that can inadvertently introduce bias. For example, prioritizing certain performance metrics over others can lead to unequal representation and outcomes. Implicit bias is another concern, where developers' unconscious biases influence the AI through decisions made during data selection, feature engineering, or model evaluation.

Operational bias emerges from the context in which an AI system is deployed. An AI tool might perform inconsistently across different geographic regions if not properly adapted to local contexts. Furthermore, feedback loops can perpetuate and amplify existing biases. For example, a biased predictive policing tool can lead to over-policing in certain areas, creating a self-reinforcing cycle where biased outcomes lead to more biased data, further skewing the AI's predictions.

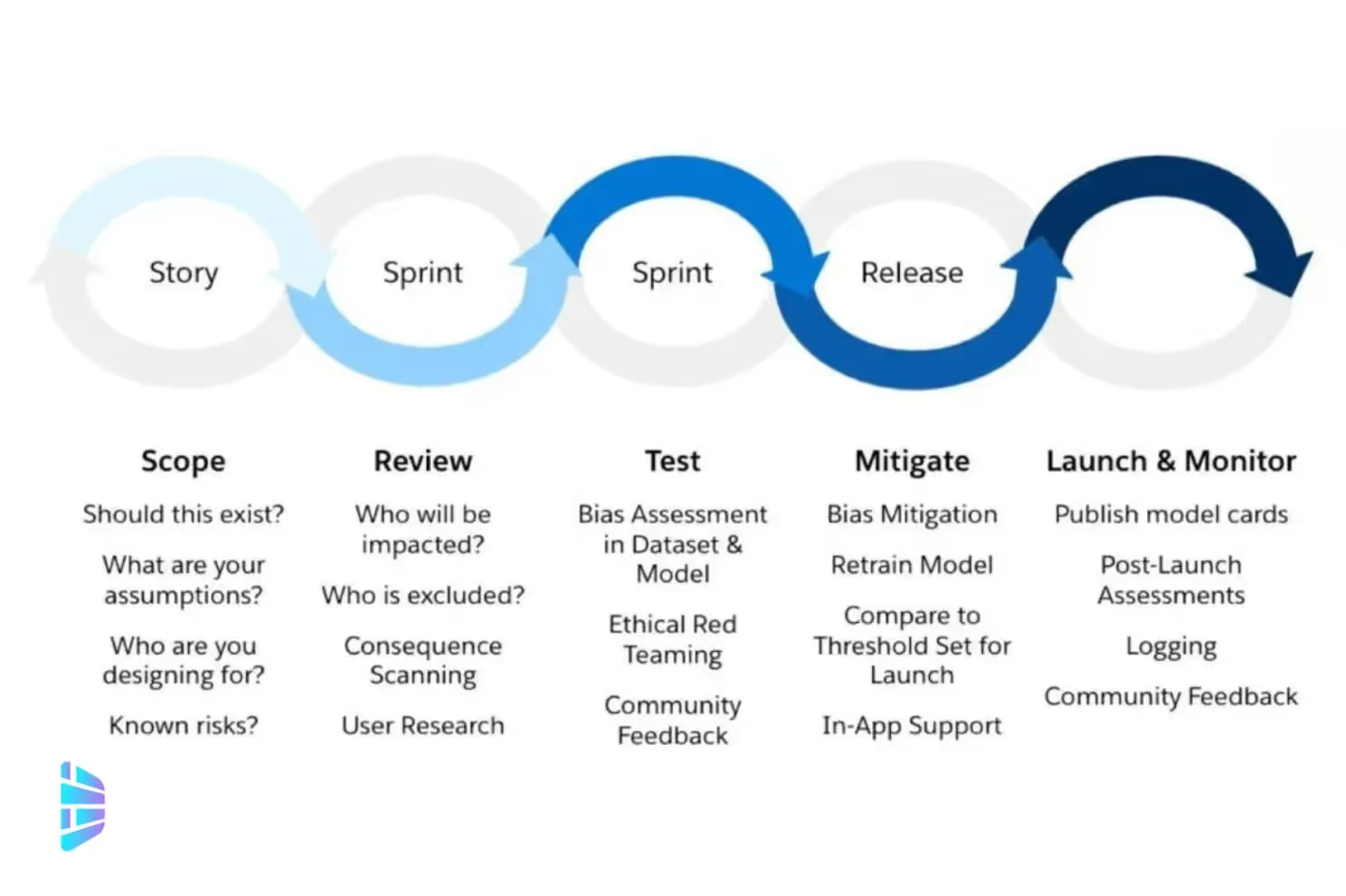

Addressing AI bias requires a multi-faceted approach. Ensuring that training data is diverse and representative is crucial. This includes continuously updating datasets to reflect current realities and avoiding the use of outdated or incomplete data. Techniques for detecting and reducing bias during model training and evaluation are essential. Fairness-aware machine learning algorithms can help identify and mitigate biases in AI models.

Adopting ethical AI frameworks that prioritize transparency, accountability, and fairness ensures that AI systems are designed and deployed responsibly. These frameworks guide developers in making ethical decisions throughout the AI lifecycle. Continuous monitoring and evaluation are also necessary to maintain fairness over time. Regular audits and updates of AI systems help ensure they adapt to new data and contexts, maintaining their reliability and equity.

We are dedicated to implementing AI systems that prioritize fairness and mitigate bias. Our approach includes using diverse and high-quality datasets and adhering to ethical AI frameworks to ensure transparency and accountability. We provide comprehensive upskilling programs focused on creating internal AI champions who drive responsible AI initiatives within their organizations. These programs cover bias detection, ethical AI development, and best practices for continuous monitoring.

Our commitment to fair AI extends to our continuous monitoring and evaluation practices. We regularly audit AI systems to detect and rectify any emerging biases, ensuring ongoing fairness and accuracy. This proactive approach helps our clients leverage AI responsibly and effectively, fostering trust and innovation.

By focusing on these principles, Digital Bricks helps organizations overcome the challenges of AI bias and fairness. Our expertise ensures that AI systems are not only powerful but also just and equitable, contributing to a more inclusive and fair technological future.