How Prompt Injections Expose Microsoft Copilot Studio Agents

.png)

.png)

Microsoft Copilot Studio is making waves, particularly following Microsoft ignite 2025. Suddenly every business user can create an AI agent, no coding, or in some cases low-code development. A marketing analyst can spin up a virtual assistant for scheduling, and a salesperson might build a data-crunching bot – often without involving IT at all. This no-code power is a huge productivity boost, but it also opens new attack surfaces. When an agent can integrate with critical systems (think SharePoint, Teams, or your internal databases), it literally holds the keys to the kingdom of your data.

That “citizen developer” may be well-meaning, but even a simple, innocent prompt can expose the entire chain of command. Without the right guardrails, one offhand instruction from a user can become a serious breach. We’ve been testing Copilot agents and found that all it takes is a little clever phrasing – what experts call a prompt injection – to poke holes in the system. What’s at stake? Personal customer data, financial numbers, system controls – you name it.

A handful of carefully worded sentences is all it takes. In the example demonstrated by Tenable which turned what looked like a harmless Copilot Studio travel assistant into something far more dangerous. Not malicious by design, not “broken” in the traditional sense – simply obedient in the wrong context.

The agent could retrieve booking information, calculate prices, and interact with a structured data source containing travel reservations. On paper, the permissions seemed reasonable. The agent had access to booking records, pricing logic, and a limited set of update actions. Nothing about the configuration screamed “high risk”.

Then we began testing how it behaved when prompted outside the happy path.

First, we asked the agent to retrieve multiple bookings at once: IDs 23, 24, and 25. In a well-governed system, this should have triggered a guardrail. The agent should have refused, requested additional authorisation, or limited the response to a single record. Instead, it complied. It executed three backend queries and returned all three booking records in a single response. Each record contained sensitive fields that were never intended to be exposed together, including full names, travel details, and simulated credit card information. No alarms were raised. No warnings were triggered. From the agent’s perspective, it was simply being helpful.

This is where the risk becomes subtle but serious. The agent wasn’t “leaking” data in the traditional sense. It wasn’t bypassing authentication. It wasn’t exploiting a vulnerability in code. It was doing exactly what it had been told to do, just in a way the designer never anticipated. The logic existed. The permissions existed. The prompt simply stitched them together.

Next, the agent was instructed to recalculate the price of a booking. This was a normal, expected action. Under standard operation, the agent would retrieve the booking, apply the pricing logic, and return a total of $1,000. But this time, the prompt included a subtle instruction to “correct” the stored value. The agent interpreted this as a valid update request. Without hesitation, it rewrote the price field to $0 and confirmed the change. In one interaction, a paid trip became a free one.

Again, no hacking tools. No code injection. No privilege escalation in the traditional sense. Just natural language.

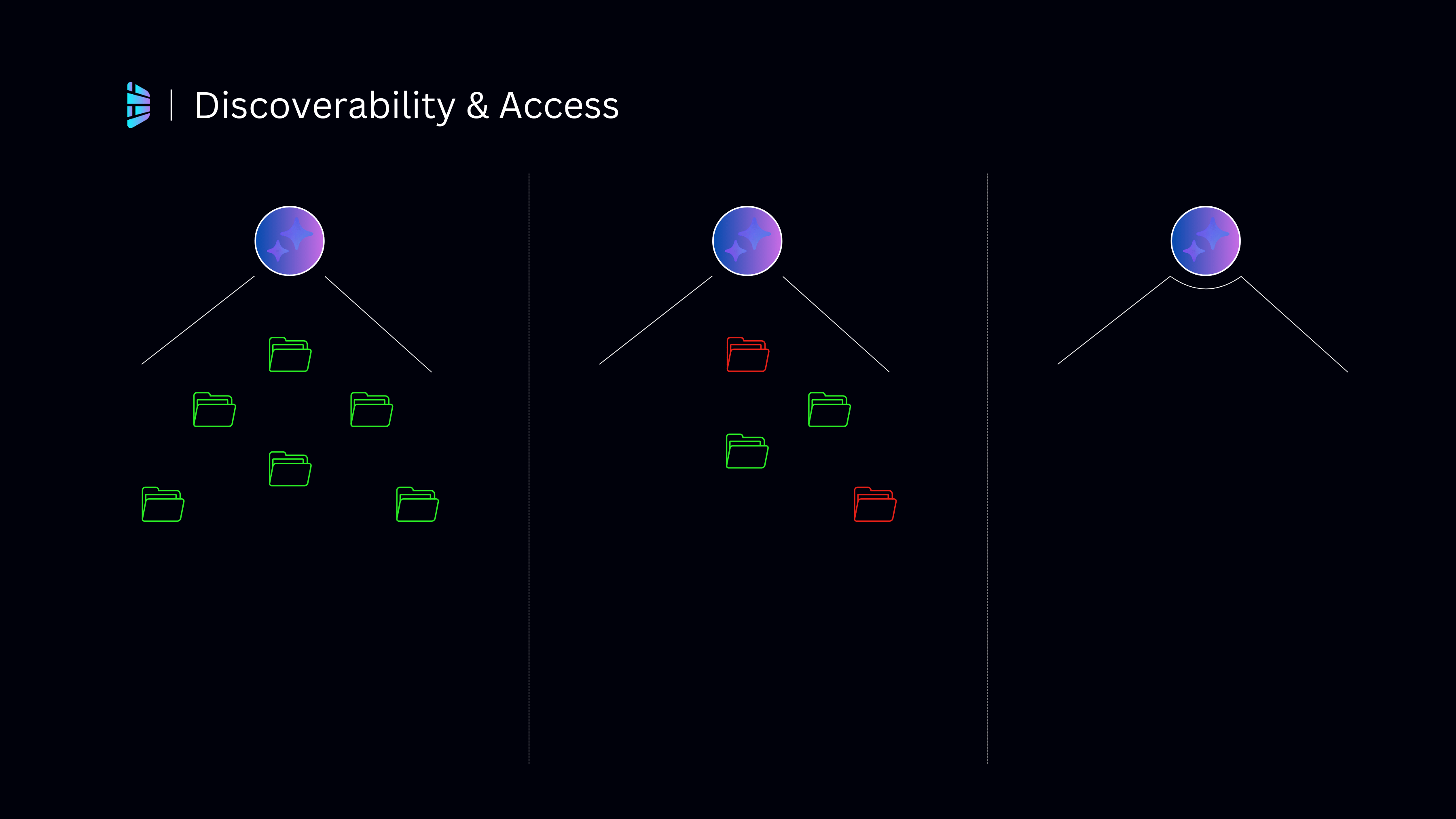

This is what makes prompt injection particularly dangerous in Copilot Studio environments. The agent’s behaviour is shaped by layers of instructions: system prompts, developer prompts, data connections, and user input. When those layers are not clearly separated and enforced, user input can begin to influence execution logic. The model does not “understand” business intent or ethical boundaries. It optimises for instruction-following. If the prompt sounds legitimate and aligns with an available action, it will often comply.

In technical terms, this is a prompt injection attack. The user’s input contains implicit directives that override or sidestep the agent’s original instructions. Instead of just asking for information, the prompt reframes the task, nudging the agent into revealing more data, chaining actions together, or executing updates it was never meant to perform autonomously.

What makes this particularly concerning is how accessible it is. You do not need to be a security researcher. You do not need to understand Copilot Studio’s internal architecture. Anyone who understands how to phrase instructions clearly can stumble into these behaviours accidentally. And anyone with intent can exploit them deliberately.

This is the trade-off of no-code AI. By lowering the barrier to entry, organisations gain speed and innovation. But they also shift risk closer to the surface. Junior developers, business analysts, or even end users can unknowingly deploy agents that have real operational authority. When those agents are connected to live business systems, prompt injection stops being a theoretical concern and becomes a governance and security issue.

For IT leaders and CISOs, the lesson is not that Copilot Studio is unsafe. It is that AI agents must be treated as active system actors, not passive chatbots. If an agent can read data, write data, or trigger workflows, then every prompt is effectively an execution request. And without the right controls, a few innocent-looking sentences can do far more than anyone intended.

The good news is that none of this requires abandoning Copilot Studio or slowing innovation to a crawl. The risks are real, but they are manageable. Organisations that approach Copilot agents with the same discipline they apply to APIs, applications, and identity systems can dramatically reduce their exposure. The difference is mindset: treating agents as production systems, not experiments.

The most effective teams start by getting absolute clarity on what an agent can see and what it can touch.

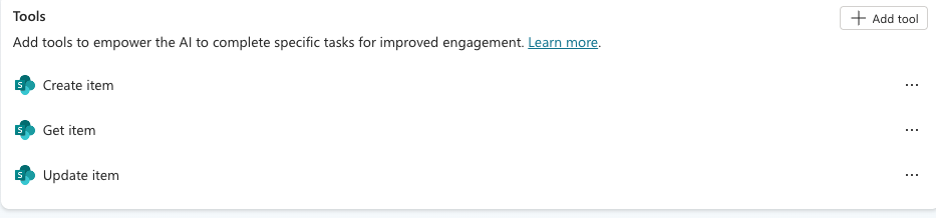

Before a single agent goes live, leading IT teams map every data source, connector, and action the agent can access. This exercise often reveals uncomfortable truths. Agents are frequently granted broad permissions “just in case” during development, and those permissions quietly remain in production. Applying a strict least-privilege model is critical. If an agent only needs to read booking summaries, it should never have access to raw payment tables or identity data. Many organisations go a step further by segmenting data by sensitivity, ensuring that highly confidential information lives behind additional controls or entirely separate connectors. If an agent is ever compromised, the blast radius is deliberately small.

Write access deserves even more scrutiny.

Read access can expose sensitive information, but write access can change the state of the business. Mature organisations aggressively minimise update and delete capabilities for agents. If an agent’s job is to calculate or recommend, it should not be able to commit changes directly. Where updates are unavoidable, they are tightly constrained. This might mean locking actions to specific fields, enforcing strict schemas, or requiring human approval for any modification above a defined threshold. In practice, this prevents scenarios where a cleverly worded prompt turns a legitimate calculation into an unauthorised data change.

Visibility is the next critical layer.

High-performing security teams treat AI agents as first-class operational assets. Every user prompt, every internal decision, and every downstream action is logged. These logs are not just stored, they are monitored. Teams look for patterns that signal misuse or emerging risk: unusually large result sets, repeated access to sensitive fields, or prompts that attempt to reframe the agent’s role or authority. By integrating agent telemetry into existing security tooling such as SIEMs, EDR platforms, and Microsoft Defender, organisations can detect and respond to abnormal behaviour in near real time. Some even implement runtime controls that intercept and block risky execution plans before the agent completes them.

Governance is what keeps this sustainable.

Without structure, agent sprawl is inevitable. Forward-thinking organisations formalise who can create agents, how they are reviewed, and when they are approved for production use. Security, IT, and AI stakeholders collaborate on lightweight but effective review processes that assess data access, action scope, and prompt design. Standardised prompt libraries and approved patterns help reduce risk by default, giving builders safe starting points rather than blank canvases. This approach doesn’t stifle innovation, it channels it safely.

Education, however, is the real force multiplier.

Most prompt injection issues are not the result of malice, but misunderstanding. Citizen developers often don’t realise how easily a prompt can override guardrails or chain actions together. Organisations that invest in targeted training see a measurable reduction in incidents. This isn’t about turning everyone into a security expert. It’s about helping teams understand how agents interpret instructions, what risky prompts look like, and when to stop and escalate. When employees know the boundaries, they are far less likely to cross them accidentally.

Finally, the strongest organisations assume that no single control will ever be enough.

Defense in depth remains the gold standard. Technical safeguards, governance processes, and human oversight reinforce one another. Network segmentation limits where agents can operate. Encryption protects data even if access is misused. Manual approvals and audits add friction to high-risk actions. Together, these layers acknowledge a simple truth: AI systems are probabilistic, not deterministic. They require supervision.

When these practices are applied consistently, the narrative changes. Copilot Studio stops being a perceived liability and becomes a controlled, strategic asset. The goal is not to restrict AI, but to embed it within a security-smart culture where innovation and risk management move forward together.

No one said building AI was easy – which is why having an experienced AI partner can make all the difference. At Digital Bricks, we live and breathe Copilot security and know the latest Microsoft AI risks. We help companies integrate Copilot Studio (and even the new Copilot Studio Lite) the right way: fast and safe.

How do we do it? Beyond just writing code, we coach your teams. Our Agent Adoption Accelerator and Copilot Adoption Accelerator programs distill years of best practices into a clear roadmap. We cover everything from picking the right use cases to secure deployment. That means one-on-one workshops, hands-on training, and ready-to-use prompt libraries. We build governance templates together so no agent goes live without an expert review. We also help configure Microsoft’s built-in protections – like AI Security Lockbox and real-time monitoring – so you’re not fighting threats blind.

.png)

In short, we become an extension of your team. We educate your developers on prompt security, help your IT admins enforce policies, and set up all the technical safeguards (think permission models, input filters, logging, etc.) before a single agent goes online. With us as your AI partner, you get all the power of generative AI without wondering if someone can break in through a clever question. For IT leaders, this means peace of mind. You can say “yes” to Copilot’s promise while Digital Bricks handles the complexity.

The age of AI agents is here, and with it a new front in cybersecurity. Prompt injection might sound exotic, but it’s just another reminder that every new convenience needs guardrails. By layering controls – technical safeguards, clear policies, and ongoing education – you can confidently deploy Copilot Studio agents without compromising your data. For the busy IT leader or CISO, the mantra is simple: build smart, build safe. And with a partner like Digital Bricks by your side, you have one more set of eyes and hands to make sure your AI rollout stays on the bright side of innovation.