What is Retrieval Augmented Generation (RAG)?

RAG, short for Retrieval Augmented Generation, is a method that merges the strengths of pre-trained large language models with external data sources. By integrating the generative capabilities of LLMs such as GPT-3 or GPT-4 with specialized data search mechanisms, RAG produces responses that are both nuanced and precise. This article delves into the intricacies of retrieval augmented generation, offering practical examples, applications, and resources for further exploration of LLMs.

Let's illustrate the concept of RAG and its functionality with a common business scenario.

Suppose you manage operations for a tech company specializing in electronic gadgets such as smartphones and laptops. Your goal is to develop a customer support chatbot to handle various user inquiries ranging from product specifications to troubleshooting and warranty details.

Your intention is to leverage the prowess of LLMs such as GPT-3 or GPT-4 to drive the chatbot's responses. However, the inherent limitations of large language models can impede the efficiency of the customer experience in the following ways:

Absence of precise data

Traditional language models are constrained to offering generic responses derived from their training data. When users pose inquiries tailored to the software your company offers or seek guidance on intricate troubleshooting procedures, conventional LLMs might struggle to furnish accurate solutions.

The root of this challenge lies in their lack of exposure to organization-specific data. Additionally, the training data of these models is bound by a cutoff date, restricting their capacity to deliver current and relevant responses.

Hallucinations

LLMs are prone to "hallucinate," wherein they confidently produce inaccurate responses based on fictitious information. Moreover, these algorithms may diverge into off-topic responses when unable to furnish precise answers to user queries, resulting in a subpar customer experience.

Standardized replies

Language models frequently furnish generic responses devoid of context specificity. In customer support contexts, the absence of personalized responses tailored to individual user preferences can pose a significant limitation.

RAG effectively addresses these limitations by facilitating the integration of LLMs' general knowledge base with the capability to access organization-specific information, such as data from product databases and user manuals. This approach ensures the delivery of highly precise and dependable responses customized to the unique requirements of your organization.

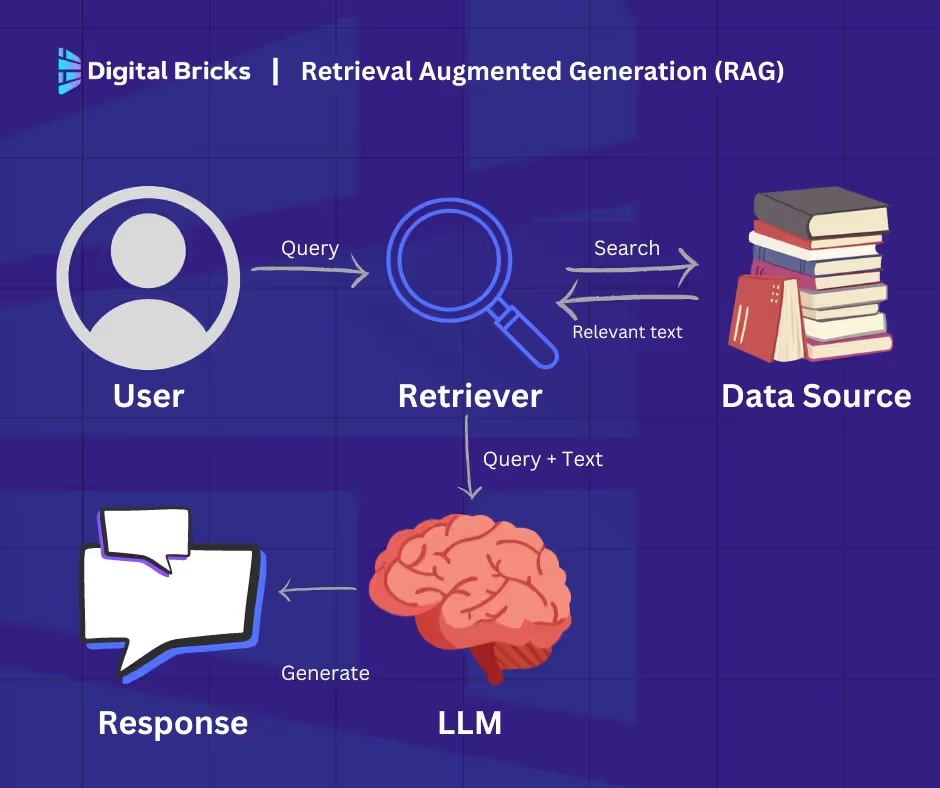

Now that you grasp the essence of RAG, let’s delve into the procedural steps involved in establishing this framework:

Step 1: Data Compilation Commence by gathering all pertinent data essential for your application. For instance, in developing a customer support chatbot for an electronics firm, collate user manuals, a product database, and a comprehensive list of FAQs.

Step 2: Data Segmentation Data segmentation entails dividing your dataset into more digestible portions. For instance, if dealing with a lengthy 100-page user manual, segment it into distinct sections, each potentially addressing different customer queries.

This strategy ensures that each data segment remains focused on a specific topic. By avoiding irrelevant information from entire documents, the system increases its likelihood of delivering directly applicable responses to user queries, thereby enhancing operational efficiency.

Step 3: Document Embedding Following the breakdown of source data into smaller segments, it undergoes conversion into a vector representation. This conversion process involves transforming textual data into embeddings, numeric representations capturing the semantic essence of the text.

In essence, document embeddings empower the system to comprehend user queries and correlate them with relevant information in the source dataset based on textual meaning, transcending simplistic word-to-word comparisons. This methodology guarantees that responses remain pertinent and aligned with user inquiries.

Step 4: Query Handling Upon entry of a user query into the system, it undergoes conversion into an embedding or vector representation. Consistency between document and query embeddings is pivotal, necessitating the utilization of the same model for both.

Following query embedding conversion, the system juxtaposes it with document embeddings. Utilizing metrics such as cosine similarity and Euclidean distance, the system identifies and retrieves segments whose embeddings bear the closest resemblance to the query embedding, deeming them most relevant to the user’s inquiry.

Step 5: Response Generation via LLM The system channels the retrieved text segments, alongside the initial user query, into a language model. Leveraging this amalgamation of data, the algorithm crafts coherent responses to the user’s queries via a chat interface.

Here is a simplified flowchart summarizing how RAG works:

Now that we understand how RAG enables LLMs to generate coherent responses using external information, its diverse business applications promise enhanced organizational efficiency and user experience. Beyond the customer chatbot scenario discussed earlier, here are some practical applications of RAG:

RAG harnesses content from external sources to generate precise summaries, offering significant time savings. Consider busy managers and high-level executives who lack the bandwidth to sift through extensive reports.

With an RAG-driven application, they can swiftly access key findings from textual data, streamlining decision-making processes without the need to delve into lengthy documents.

RAG systems offer the capability to analyze customer data, including past purchases and reviews, to formulate personalized product recommendations. This enhancement not only elevates the user experience but also drives increased revenue for the organization.

For instance, RAG applications can enhance movie recommendations on streaming platforms by considering a user’s viewing history and ratings. Additionally, they can analyze written reviews on e-commerce platforms to suggest relevant products.

Leveraging the semantic comprehension inherent in LLMs, RAG systems deliver personalized suggestions that surpass the capabilities of traditional recommendation systems, offering users more nuanced and tailored recommendations.

3. Business Intelligence

Organizations traditionally base business decisions on monitoring competitor behavior and analyzing market trends. This process involves meticulous examination of data contained in business reports, financial statements, and market research documents.

By leveraging an RAG application, organizations can streamline the process of identifying trends in these documents. Rather than manual analysis, an LLM can efficiently extract meaningful insights, enhancing the efficiency and effectiveness of the market research process.

While RAG applications offer a means to bridge information retrieval and natural language processing, their implementation presents unique challenges. Let's explore the complexities encountered in building RAG applications and discuss strategies to mitigate them.

Integration Complexity

Integrating a retrieval system with an LLM can be challenging, especially when dealing with multiple sources of external data in varied formats. Consistency in the data fed into an RAG system is essential, necessitating uniform embeddings across all data sources.

To overcome integration complexity, separate modules can be designed to handle different data sources independently. Data within each module can then undergo preprocessing for uniformity, and a standardized model can ensure consistent embeddings.

Scalability

The efficiency of RAG systems diminishes as the volume of data increases due to computationally intensive operations such as generating embeddings, comparing text meanings, and real-time data retrieval.

To address scalability challenges, distributing computational load across different servers and investing in robust hardware infrastructure is essential. Caching frequently asked queries can improve response times. Implementing vector databases can also enhance scalability by facilitating easy handling of embeddings and quick retrieval of closely aligned vectors.

Data Quality

The effectiveness of an RAG system hinges on the quality of the input data. Poor source content results in inaccurate responses, underscoring the importance of diligent content curation and fine-tuning processes.

Organizations should invest in refining data sources to enhance quality, potentially involving subject matter experts to review and fill in information gaps before employing the dataset in an RAG system.

RAG represents a cutting-edge technique to leverage LLM language capabilities alongside specialized databases, addressing pressing challenges in language model applications. However, like any technology, RAG systems have limitations, particularly their dependence on input data quality.

To maximize the utility of RAG systems, human oversight in data curation is crucial. Meticulous curation of data sources, coupled with expert knowledge, ensures the reliability and effectiveness of these solutions in real-world applications.